Automating Bone Fragment Classification with Deep Learning

In an exciting development for ecological monitoring, a recent study demonstrates the feasibility and effectiveness of using deep learning to automate the classification of bone fragments from Barn Owl (Tyto javanica javanica) pellets. This is particularly significant as it marks the first application of an object detection framework specifically trained to recognize skeletal fragments in owl pellets, revolutionizing conventional pellet analysis which traditionally relies on manual osteological examination. This conventional method has several limitations, including restricted throughput and a heightened risk of human error.

The Role of AI in Ecological Monitoring

The introduction of a YOLOv12-based model addresses these limitations by facilitating rapid, reproducible, and scalable detection of rodent skeletal components. This advancement in technology not only streamlines the classification process but also directly supports conservation and pest management efforts. Researchers can simply photograph the bone fragments throughout the year, and the Python script developed in this study can analyze hundreds of fragments in mere seconds. This contrasts starkly with manual methods, where collectors must retain and examine physical specimens.

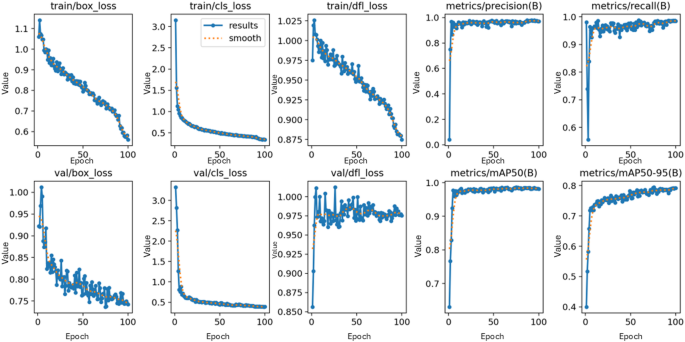

Performance Metrics and Model Efficacy

The deep learning model achieved impressive performance metrics, including a high mean Average Precision (mAP@0.5) of 0.984, alongside strong precision and F1-score values of 0.90 and 0.97, respectively. Such metrics are comparable to—and sometimes exceed—other ecological deep learning applications. One of the noteworthy findings from the study is the comparative ease of classification tasks for distinct bone types, which yielded more consistent results than broader species recognition models that often struggle with morphological variability.

Challenges and Mitigation Strategies

Despite its impressive performance, the model faced challenges with background misclassification; specifically, 48% of true background instances were predicted as femurs. This highlights an area for improvement in future iterations—tuning the objectness loss component during training to enhance background discrimination. Additionally, detecting small, occluded, or degraded bone fragments remains a persistent challenge in ecological imagery. While the model excelled with well-preserved fragments, various factors—including overlapping structures and poor contrast—reduced sensitivity for bones under 1 cm in length.

Dual Strategy for Abundance Estimation

An innovative aspect of this study is its dual strategy for estimating rodent abundance. By using skull counts as a conservative metric and paired bones as secondary indicators, the researchers enhanced reliability—particularly when dealing with absence or damage to skulls. This approach aligns with protocols established in previous pellet analysis studies and offers a non-invasive monitoring tool that minimizes disturbance to wildlife. This feature is particularly beneficial in protected areas where minimizing ecological impact is crucial.

The Importance of Non-Invasive Monitoring

Rodents, known for being prolific agricultural pests, cause considerable damage across various crops. Accurate, real-time monitoring of rodent populations is vital for effective pest management interventions. Traditional trapping methods are not only costly and time-consuming but often impractical for large-scale operations. The pellet-based monitoring system provides a cost-effective, passive alternative that reflects long-term prey presence. The integration of AI-driven image analysis allows for timely and scalable monitoring, contributing positively to integrated pest management (IPM) frameworks.

Limitations of Object Detection Frameworks

The study also notes structural limitations inherent to YOLO-based detectors, particularly the challenges of using bounding boxes for overlapping or fragmented objects—common scenarios in densely packed owl pellets. To accurately detect bone fragments, physical disaggregation of pellets is required, as intact pellets obscure important skeletal elements. Emerging segmentation models, such as Mask R-CNN and YOLOv5-seg, present an opportunity for improving spatial precision through pixel-level delineation, addressing some of these challenges.

Broader Testing and Future Directions

While the model has shown consistent results in multiple countries, further validation across different owl populations, seasons, and prey assemblages is necessary. Environmental heterogeneity can significantly influence model performance. Strategies such as domain adaptation and data augmentation could enhance the model’s applicability across varying habitats. Expanding the anatomical class diversity and incorporating more sophisticated segmentation techniques will be critical for addressing challenges related to overlapping objects.

Democratizing Access to Deep Learning Tools

This study underscores the value of cloud-based platforms, such as Roboflow and Google Colab, in making deep learning more accessible to researchers worldwide. However, practical constraints like computational limitations and reliance on internet connectivity call for lightweight, locally deployable solutions. The framework developed in this research can be adapted for additional ecological scenarios involving fragmented biological materials, including scat analysis and forensic classifications of bones.

The Versatility of the Model

The versatility of the model opens the door to numerous applications in conservation, agriculture, and ecological research where accurate species monitoring is essential. Its adaptability allows for easy modification to identify different skeletal elements or discriminate among various species. This is essential for supporting ongoing research and efforts to maintain ecological balance.

Conclusion of Findings

The results of this study showcase that deep learning, specifically utilizing the YOLOv12 object detection framework, can effectively automate the classification of rodent bones within barn owl pellets. The robust performance metrics achieved reflect the model’s reliability, even under complex biological conditions. By integrating this model with an inference pipeline for estimating rodent abundance, the research paves the way for a scalable tool for ecological monitoring, potentially transforming the landscape of wildlife management and conservation. Future studies remain vital for enhancing the generalizability and sensitivity of this innovative approach, contributing to the larger field of AI in conservation biology.