Unveiling TarFlow: Advancing Normalizing Flows in Generative Modeling

Normalizing Flows (NFs) have long been recognized as a compelling framework for density estimation and generative modeling tasks involving continuous inputs. Despite their initial excitement, it’s true that NFs have experienced a lull in attention compared to other machine learning methodologies. However, recent advancements, particularly the introduction of TarFlow, are breathing new life into this powerful modeling technique.

The Importance of Normalizing Flows

NFs offer an elegant solution to the problem of learnable density estimation by transforming a simple distribution, such as a Gaussian, into a more complex one. This process enables the model to learn intricate data distributions, which is particularly valuable in fields like computer vision, where data can be high-dimensional and complex. While previously, NFs demonstrated potential, they often fell short in terms of performance when compared to other generative models like Generative Adversarial Networks (GANs) or Variational Autoencoders (VAEs). Enter TarFlow—raising the bar for NFs considerably.

What is TarFlow?

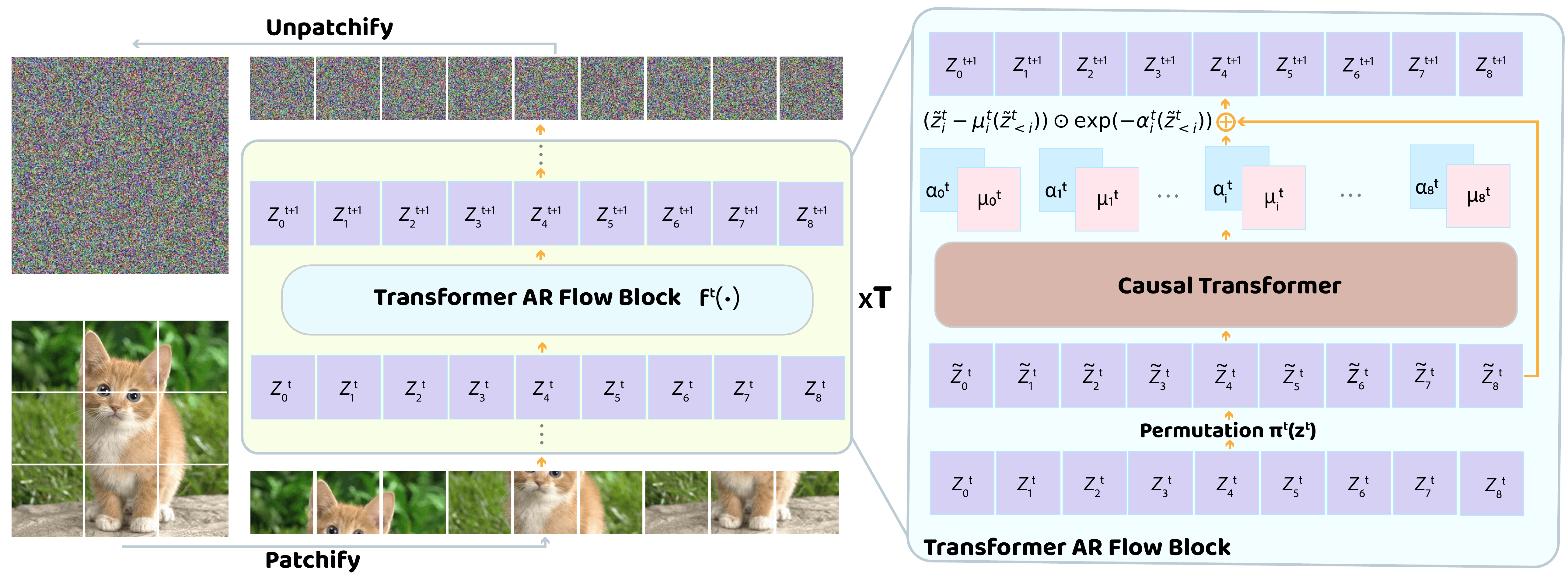

TarFlow represents a new frontier in the development of Normalizing Flows, characterized by its simplicity and scalability. Inspired by the architectural innovations of Transformers and Masked Autoregressive Flows (MAFs), TarFlow employs a stack of autoregressive Transformer blocks applied to image patches. The alternating autoregression direction between layers adds a unique twist, enabling the model to capture the nuances of data distributions more effectively than traditional approaches.

Training Made Easy

One of the standout features of TarFlow is how straightforward it is to train. The architecture is designed for end-to-end training that can scale up seamlessly, making it not only user-friendly but also a reliable choice for practitioners looking to deploy powerful NF models. Simply put, TarFlow takes the complexity out of implementing high-performing Normalizing Flows while delivering impressive results.

Cutting-Edge Techniques for Improved Sample Quality

TarFlow doesn’t stop at being an innovative architecture; it also incorporates three key techniques aimed at enhancing the quality of generated samples:

-

Gaussian Noise Augmentation: During training, integrating Gaussian noise into inputs significantly helps the model generalize, enabling it to learn more robust representations. This technique mitigates the risk of overfitting to the training data.

-

Post-Training Denoising Procedure: After the training process, a dedicated denoising step aids in refining the generated samples, ultimately leading to a more polished output that better resembles real data distributions. This step enhances clarity and cohesion within the generated images, making them more visually appealing.

- Effective Guidance Method: TarFlow includes an effective guidance mechanism that operates in both class-conditional and unconditional settings. This duality allows for greater versatility in sample generation, empowering users to produce diverse outputs tailored to specific needs.

Stellar Performance: A New Benchmark for NFs

Thanks to these innovations, TarFlow has achieved state-of-the-art results in likelihood estimation for images, setting a new benchmark in the domain. It doesn’t just marginally outperform previous methods; it does so with a significant margin, marking a turning point for NFs. This leap in performance is especially remarkable given that TarFlow can generate image samples of quality and diversity that are comparable to diffusion models, but as a standalone NF architecture.

Visual Illustrations of TarFlow

To truly appreciate the capabilities of TarFlow, let’s highlight a couple of visual representations:

-

Generated Samples: The first figure showcases a remarkable assortment of images generated by TarFlow at various resolutions. Each sample illustrates the model’s adeptness at capturing complex patterns and details inherent in real-world images.

Figure 1: Samples at various resolutions generated by TarFlow. -

Model Architecture: The second figure illustrates the intricate model architecture of TarFlow, offering a glimpse into the layers and operations that contribute to its high performance.

Figure 2: Model architecture of TarFlow.

The Future of Normalizing Flows

With the introduction of TarFlow, we are witnessing a revitalization of interest in Normalizing Flows as a viable option for generative modeling. Its elegant architecture, ease of training, and state-of-the-art results will likely inspire further research and applications in a variety of fields ranging from image generation to more complex multi-modal models.

As the landscape of machine learning evolves, the resurgence of NFs through innovations like TarFlow may well signify a crucial shift—an excellent opportunity for researchers and practitioners alike to explore the realms of creativity and efficiency it opens up. Whether you’re engaged in academia, industry, or merely fascinated by the capabilities of AI, TarFlow represents a noteworthy advancement worth keeping an eye on.