Unlocking the Potential of Generative AI for Your Security Operations Center

Unlocking the Potential of Generative AI for Your Security Operations Center

Core Concept of Generative AI in Security Operations

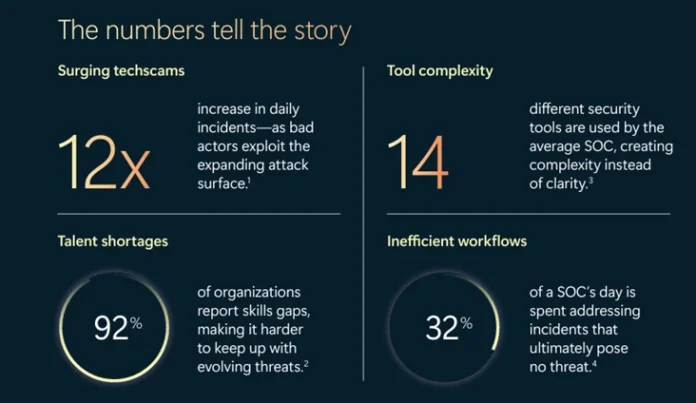

Generative AI refers to algorithms that can create new content based on learned patterns from existing data. In security operations, this technology can revolutionize how security analysts monitor, detect, and respond to threats. With rising cyber threats and increasing alert fatigue, generative AI offers significant potential to improve both efficiency and effectiveness in security operations centers (SOCs).

For example, imagine a bustling office filled with analysts overwhelmed by an avalanche of alerts about potential threats. Generative AI can sift through this data, filtering out noise and identifying real risks, thus allowing human analysts to focus on high-impact issues. This shift not only enhances productivity but can also lead to quicker and smarter decision-making in thwarting cyber threats.

Key Components of Generative AI for SOCs

The implementation of generative AI in SOCs involves several key components. First, it requires robust data inputs, such as past incident reports and threat intelligence. Next, machine learning models analyze this data, learning to detect patterns and anomalies that may indicate future threats.

A practical analogy is how a teaching method evolves. Just as educators use past student performances to adjust teaching strategies, SOCs can use historical data to refine their security measures. The continuous learning aspect of generative AI enables it to adapt to new threats, making it a vital tool in ever-evolving cyber landscapes.

Step-by-Step Workflow Integration

Integrating generative AI into SOC workflows generally follows a systematic process. Initially, data collection occurs, gathering relevant information from various sources like network logs and threat feeds. This is followed by data analysis, where generative AI tools sift through data to identify potential threats. The next step involves automation, allowing the AI to perform specific remediation tasks, such as isolating compromised machines.

For example, when an unusual spike in login attempts is detected, the AI can automatically flag this for investigation and even run preliminary analyses to inform the analysts. This seamless integration can reduce the mean time to resolution (MTTR) significantly, improving overall operational effectiveness.

Real-World Application Scenarios

In real-world scenarios, the application of generative AI drastically changes the landscape of security operations. For instance, in a recent case study, a financial services institution deployed generative AI to manage their SOC’s workflow. The AI identified a pattern of suspicious transactions linked to a known malware strain.

The AI consolidated alerts from multiple sources, providing analysts with a comprehensive summary and prioritizing the investigation based on severity. This not only minimized the noise but also accelerated the response, ultimately preventing significant financial losses. The measurable improvements in incident handling underscore the transformative power of generative AI.

Common Mistakes and How to Avoid Them

Implementing generative AI isn’t without its pitfalls. A common mistake organizations make is underestimating the quality of input data. Poor data leads to ineffective model training, resulting in false negatives or positives. To mitigate this risk, ensure that only high-quality, relevant data feeds into the system.

Another issue is resistance from staff who may be hesitant to trust AI-driven insights. Training and demonstrating the AI’s capabilities and reliability can alleviate these concerns, fostering a culture where technology complements human expertise rather than replaces it.

Tools and Metrics for Effectiveness

There are various tools available for integrating generative AI into SOCs. Microsoft Security Copilot, for instance, is designed to enhance operational efficiency through real-time insights and automated response options. Organizations utilizing such tools can track metrics like MTTR and incident response accuracy to evaluate performance.

When selecting tools, consider the scope of coverage, integration capabilities, and the specific needs of your organization. Limiting tools to those that meet precise requirements can prevent unnecessary complexity and fragmentation in the security environment.

Alternatives to Generative AI in SOCs

While generative AI offers substantial benefits, there are alternative approaches, such as traditional rule-based systems. These systems rely on predefined rules, which can be effective for known threats but may lack adaptability for evolving ones.

The primary advantage of generative AI over traditional systems is its ability to learn and adapt in real-time. However, organizations must evaluate their specific context. For example, smaller companies might benefit more from straightforward rule-based systems due to lower complexities, while larger enterprises often realize the advantages of a comprehensive AI-driven approach.

FAQ

What is the primary advantage of using generative AI in SOCs?

Generative AI enhances threat detection and response times by analyzing large volumes of data to discern meaningful patterns, allowing analysts to focus on higher-priority tasks.

Can generative AI completely replace human analysts?

No, generative AI is designed to augment human efforts, not replace them. Human analysts bring critical thinking and contextual understanding that AI cannot replicate.

How does generative AI help in reducing false positives?

By analyzing historical data and recognizing legitimate patterns, generative AI can differentiate between typical behavior and anomalies, effectively reducing the occurrence of false alerts.

Is generative AI easy to integrate into existing systems?

The integration process can be complex and may require training and adaptation. Selecting the right tools that align with your existing infrastructure can ease this transition.