“Unlocking Generative AI: A Method to Identify Personalized Objects”

Unlocking Generative AI: A Method to Identify Personalized Objects

Understanding Personalized Object Localization

Personalized object localization refers to the ability of artificial intelligence (AI) systems to identify and track specific objects within images and videos. This task is crucial for applications ranging from monitoring pets to recognizing personal belongings. Unlike general object recognition, which identifies common items like “dog” or “car,” personalized localization focuses on specific instances, such as “Bowser the French Bulldog.” This distinction is significant because it enhances user experience, especially when AI needs to perform tasks that require contextual understanding.

Why Personalized Localization Matters

The impact of effective personalized localization extends across various industries. For instance, in pet monitoring applications, ensuring that a dog owner can see their pet’s exact location while at work offers peace of mind and improves user engagement. Moreover, personalized localization can enhance assistive technologies for visually impaired individuals, enabling more precise navigation and interaction with their environment. By enhancing the contextual awareness of AI systems, businesses can offer more personalized experiences, thereby increasing user satisfaction and trust.

Key Components of the New Method

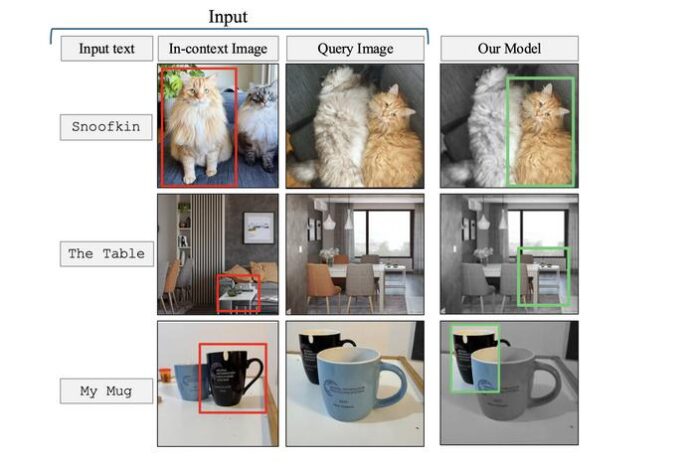

The new method developed by researchers from MIT and the MIT-IBM Watson AI Lab focuses on several critical components: the dataset design, training technique, and object naming conventions.

-

Dataset Design: Instead of using random images of objects, the researchers curated a dataset using video-tracking data, where the same object is tracked across multiple frames. This allows models to learn from context rather than relying solely on memorized identifiers.

-

Training Technique: The method involves fine-tuning existing vision-language models (VLMs) using the newly designed datasets. This process not only improves localization accuracy but also preserves the model’s general capabilities.

- Object Naming Conventions: Utilizing pseudo-names for objects—like calling a tiger “Charlie”—forces the model to rely on contextual clues rather than its pre-existing knowledge, enhancing its ability to identify personalized objects.

Step-by-Step Process of Training

-

Data Collection: The researchers gathered video footage showcasing the same object in various contexts. For example, a tiger might be filmed walking, resting, and drinking water.

-

Dataset Structuring: They structured the dataset by cutting frames from the video and pairing them with example questions about the object’s location within the scene.

- Fine-Tuning the Model: Using this dataset, they retrained existing VLMs, allowing those models to enhance their localization capabilities significantly.

With this method, the model achieved an average accuracy improvement of 12%. When pseudo-names were introduced, the accuracy jumped to 21%. Such improvements highlight the effectiveness of the new training strategy.

Practical Applications and Mini Case

Consider a pet owner desiring to track their dog, Bowser, at a park using a generative AI application. Traditional AI systems might struggle to discriminate Bowser from other dogs. In contrast, the newly retrained model can focus on contextual clues—like the color of Bowser’s collar or his specific movements—allowing owners to locate him accurately in real time. This type of personalized tracking expands the boundaries of not only pet monitoring but also extends to wildlife monitoring, inventory management, and people-tracking scenarios.

Common Pitfalls and Solutions

One common challenge with VLMs is their tendency to rely on prior knowledge instead of new contextual information. This leads to incorrect assumptions about specific cases. For instance, the model might identify a tiger due to its learned association with the word rather than its situational context.

To combat this, the use of pseudo-names as object identifiers prompts the model to analyze the surrounding visual context rather than defaulting to memorized labels. Carefully selecting video frames to provide diverse environmental backgrounds also prevents overspecialization in interpretation.

Tools and Metrics in Practice

Various frameworks are used to train and evaluate VLMs, including TensorFlow and PyTorch. Metrics for assessing the success of these models often revolve around accuracy rates and contextual understanding. Companies involved in AI-driven applications—like those in pet monitoring or smart home technology—should implement these retraining methods to enhance current models and meet user expectations effectively.

Alternatives and Trade-offs in Object Localization Techniques

While the new method shows promise, alternatives exist. Traditional object detection frameworks like YOLO (You Only Look Once) focus on speed and efficiency but may lack the nuanced understanding required for personalized tasks. Choosing which method to adopt often depends on the specific application and user needs. For instance, while YOLO may provide faster results for general object detection, the MIT-developed technique excels in personally relevant scenarios but may require more extensive computational resources for model training.

FAQs

Q: How much improvement can be expected with the new method?

A: Models retrained with this method showed an accuracy improvement of up to 21% when utilizing pseudo-names for objects, signifying substantial gains in personalized localization tasks.

Q: Can this method be applied to objects beyond pets?

A: Yes, the technique is versatile and can extend to various applications like tracking personal items in homes or identifying specific wildlife in ecological studies.

Q: What are the limitations of current VLMs?

A: Current VLMs struggle with context-based learning, often relying on memorized associations rather than dynamically interpreting new information, which this method aims to address.

Q: Is the training process resource-intensive?

A: While retraining models can require considerable computational power, the resulting improvements in contextual understanding and user interaction often justify the investment.