Enhancing Named Entity Recognition in Moroccan Arabic: The NGOAI-ANER Methodology

In the dynamic landscape of Natural Language Processing (NLP), Named Entity Recognition (NER) is paramount, particularly in multilingual contexts. The introduction of the NGOAI-ANER methodology specifically addresses NER systems tailored for the Moroccan dialect, ensuring higher precision and efficiency when processing Arabic texts. This article explores the innovative techniques embedded in the NGOAI-ANER approach, detailing the methodologies and their implications for NLP advancements.

Overview of NGOAI-ANER

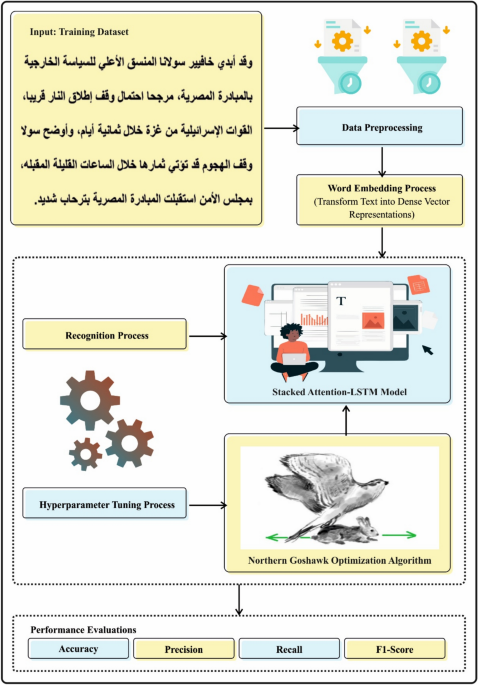

The NGOAI-ANER methodology integrates several sophisticated procedures: word embedding for rich semantic representations, SALSTM-based classification for deep learning, and hyperparameter tuning through the Northern Goshawk Optimization (NGO) technique. Together, these components form a robust system designed to effectively enhance NER capabilities in Moroccan Arabic, a dialect rich in morphological complexity.

Workflow of the NGOAI-ANER Method

The workflow of the NGOAI-ANER method is visualized in Figure 1, highlighting the sequential processes involved, from data preprocessing through to model evaluation.

Word Embedding Techniques

The first step in the NGOAI-ANER methodology is word embedding, a technique that transforms text into dense vector representations that encapsulate semantic meanings vital for NER tasks. Traditional embeddings like Word2Vec (W2V) have been utilized successfully in various contexts, but they fall short in capturing the intricate morphology of languages like Arabic.

Advanced Embeddings: AraVec and FastText

For Arabic, a pre-trained word embedding model called AraVec is incorporated, leveraging the strengths of W2V. However, due to the morphological richness of Arabic, merely using embeddings isn’t enough. FastText supplements this by allowing word representations to be formed from character n-grams, enabling better handling of rare words and capturing nuanced meanings associated with different spellings.

Deep Learning with SALSTM

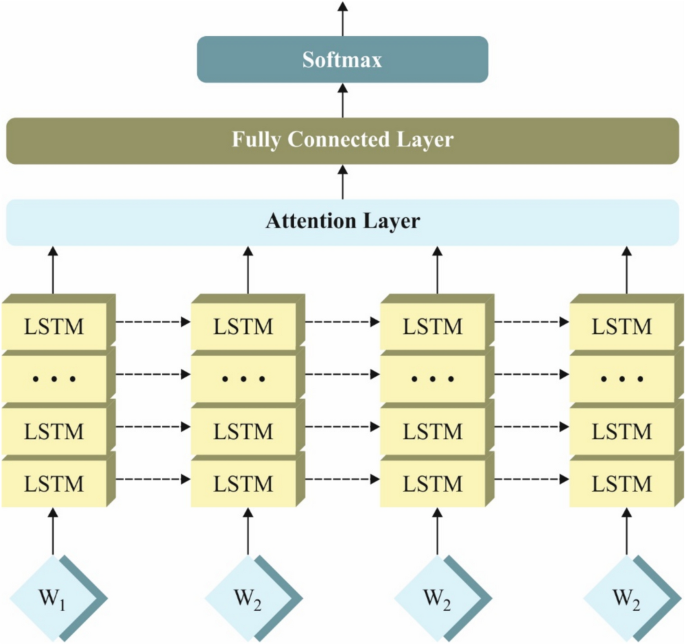

Once the word embeddings are established, the next phase involves utilizing a Stacked Attention Long Short-Term Memory (SALSTM) model. Deep learning architectures are adept at classifying named entities crucial for NER tasks.

Benefits of Deep Stacking in LSTM

The adoption of stacked LSTMs means multiple LSTM layers work in conjunction, enhancing the model’s understanding of complex sequential patterns. This configuration allows the model to learn richer representations, crucial for interpreting the sometimes ambiguous nature of named entities in Moroccan Arabic texts.

Mathematical Foundations of SALSTM

The mathematical backbone of the SALSTM model is described using a series of equations that compute essential parameters such as the forget gate, input gate, and hidden state. These formulas manage the flow of information through LSTM cells, ensuring that relevant context is maintained across sequences.

Attention Mechanism in Neural Networks

The introduction of an Attention Mechanism (AM) complements the SALSTM architecture, directing the model to prioritize critical data points within the input sequence. In essence, it helps the model efficiently manage the extensive amount of data by allocating computational focus where it matters most, thereby avoiding information overload.

The Computational Process of Attention Mechanism

The functionality of the AM is achieved in two stages: computing attention distribution across inputs and calculating weighted averages based on these distributions. This dual-stage computation distinctly enhances the model’s performance in recognizing named entities.

Hyperparameter Tuning with NGO

A critical aspect of optimizing the NGOAI-ANER methodology lies in effective hyperparameter tuning. The use of the Northern Goshawk Optimization (NGO) provides a nature-inspired solution to streamline this process.

Advantages of Northern Goshawk Optimization

The NGO approach mimics the hunting behaviors of the Northern Goshawk, utilizing these principles to navigate the optimization landscape effectively. Its adaptability allows for a balanced exploration of potential solutions, while requiring less computational effort compared to traditional algorithms.

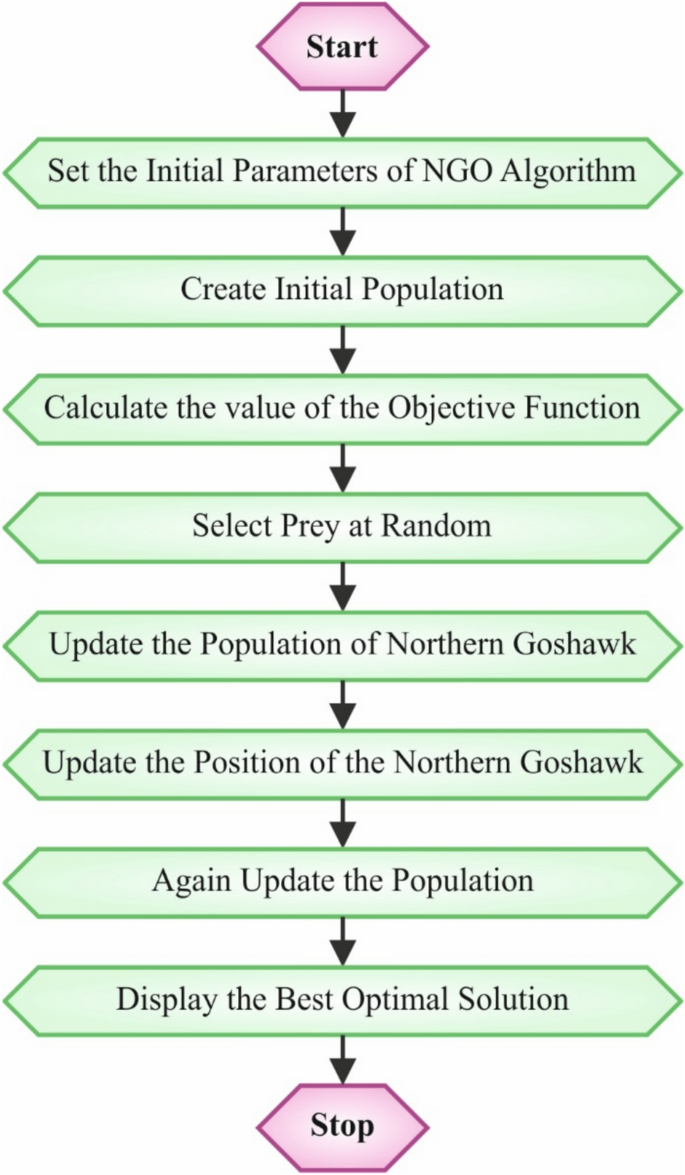

Workflow of NGO Method

The workflow illustrating the NGO process outlines how the methodology progresses through population initialization, evaluation of objective functions, and iterative improvement of solutions.

Stages of the NGO Approach

The NGO model processes two fundamental stages – identification of prey (exploration) and chase and escape (exploitation). Both stages are essential in refining the search for effective solutions.

Stage 1: Identification of Prey (Exploration)

This exploratory phase mimics the behavior of a Northern Goshawk selecting a target. It enhances the algorithm’s search capabilities across the landscape of potential solutions, leading to a broad identification of areas with higher performance metrics.

Stage 2: Chase and Escape (Exploitation)

In the chase phase, the model optimizes its focus on the more promising solutions identified during exploration. By narrowing its search radius and emphasizing speed, it enhances the accuracy of the results as the iterations progress.

The iterative functionality, designed to update population members based on performance feedback, culminates in identifying the optimal solution for enhancing NER systems.

Objective Function for Performance Measurement

The effectiveness of the NGO model is based on a carefully defined fitness function, crucial in evaluating the performance of potential solutions. This error-rate minimization serves as the driving metric for refining model parameters.

The systematic application of the NGOAI-ANER methodology reflects a significant advancement in the field of Arabic NER. By integrating advanced word embeddings, a sophisticated deep learning architecture, and a nature-inspired optimization technique, it sets a new standard for accuracy and efficiency in processing Moroccan Arabic texts.