Unpacking the Transformation of Visual Recognition: The Journey from Human Cognition to Deep Neural Networks

Introduction: The Crossroads of Intelligence

In the ever-evolving landscape of artificial intelligence (AI) and machine learning, the intersection of human cognitive processes with computational models stands as one of the most intriguing frontier areas. With the facilitation of deep neural networks (DNNs), we’ve observed remarkable advancements in visual recognition tasks that were once the sole purview of human perception. This article endeavors to delve deep into the undercurrents of this phenomenon, tracing the pivotal studies that laid the groundwork for understanding both human and machine recognition of objects.

The Dawn of Deep Learning: A Significant Breakthrough

In 2012, a seminal paper by Krizhevsky, Sutskever, and Hinton catapulted deep learning into mainstream consciousness. Titled "ImageNet classification with deep convolutional neural networks" and published in the Advances in Neural Information Processing Systems (NIPS) conference, this research demonstrated how deep convolutional neural networks (CNNs) could far surpass previous models in image classification tasks. The use of large labeled datasets allowed these networks to learn complex hierarchical representations of visual data, leading to significant improvements in recognition accuracy (Krizhevsky et al., 2012).

Deep Residual Learning: Overcoming Limitations

While the initial breakthroughs were astounding, they also unveiled certain limitations in depth of representation. He et al. (2016), in their paper "Deep residual learning for image recognition," introduced the concept of residual networks (ResNets), allowing models to learn residual functions with reference to the layer inputs instead of learning unreferenced functions. This innovation facilitated the training of deeper networks, enabling substantial improvements in accuracy without the vanishing gradient problem that plagued early models. The architecture not only propelled image recognition but also set a new standard for future developments in deep learning.

Bridging Speech and Vision: Multimodal Approaches

Hinton et al. expanded the potential of DNNs beyond image recognition in their work on speech recognition, "Deep neural networks for acoustic modeling in speech recognition." Here, they applied deep learning techniques to acoustic modeling, proving that similar principles could govern different types of sensory recognition (Hinton et al., 2012). The ability to apply DNN architectures successfully across modalities emphasized a broader understanding of how neural networks could mimic human cognitive capabilities.

End-to-End Processing: The Deep Speech Model

In 2016, Amodei et al. introduced "Deep Speech 2," showcasing an end-to-end speech recognition system capable of working in noisy environments and across different languages, including English and Mandarin. This model demonstrated how end-to-end training could streamline workflows in AI systems, removing the need for traditional, segmented processing tasks (Amodei et al., 2016). The implications for both speech and visual recognition are profound, illustrating a paradigm shift in how machines could manage complex tasks traditionally reserved for humans.

Games as a Testing Ground: Reinforcement Learning

The realm of games has also served as a fertile testing ground for deep learning applications. Silver et al. (2016) presented "Mastering the game of Go with deep neural networks and tree search," which showcased how specialized neural networks combined with reinforcement learning could achieve superhuman performance in complex strategy games. This model epitomized the potential of DNNs to not only learn from data but also to adapt and strategize in dynamic environments (Silver et al., 2016).

Bridging Human and Machine Perception

Understanding how closely deep learning models can simulate human processing remains paramount. Vinyals et al. (2019) explored this through their paper "Grandmaster level in StarCraft II using multi-agent reinforcement learning," which delves into cooperative and competitive strategies reminiscent of human cognition in complex tasks (Vinyals et al., 2019). This led to questions about what it truly means for AI to understand or perceive its environment—are DNNs merely pattern recognizing or are they building a form of understanding similar to human cognition?

Analyzing Neural Representations

Khaligh-Razavi and Kriegeskorte (2014) examined the representations learned by DNNs, concluding that deep supervised models could accurately explain cortical representations, shedding light on the parallels between human brain processing and neural architectures (Khaligh-Razavi & Kriegeskorte, 2014). This comparative analysis has encouraged researchers to question not just the accuracy of these models but their interpretability and alignment with human understanding.

Towards Understanding Generalization

Generalization, a core principle of intelligence, presents unique challenges. Geirhos et al. (2018) investigated how DNNs and humans differ in their generalization capabilities, revealing that while DNNs often rely heavily on texture, humans tend to emphasize shape in recognition tasks (Geirhos et al., 2018). This investigation into the generalization mechanisms has significant implications for improving AI models to better mimic human-like reasoning and adaptability.

Computational Modeling of Visual Systems

As we ponder the intricacies of brain-like functions in neural networks, various studies compare human brain functioning with DNNs. Yamins et al. (2014) explored how performance-optimized hierarchical models could predict neural responses in the human higher visual cortex, providing insights into the efficiency and architecture of neural representations (Yamins et al., 2014). Such findings inspire continued research bridging neuroscience and machine learning.

Insights From Object Representation Studies

Rajalingham and colleagues (2018) conducted extensive research comparing object recognition behavior in humans and advanced DNNs, revealing that certain neural network architectures perform remarkably well in mirroring human visual recognition (Rajalingham et al., 2018). By delving into similarities and differences in object perception, researchers can identify areas where AI still lags behind human cognition.

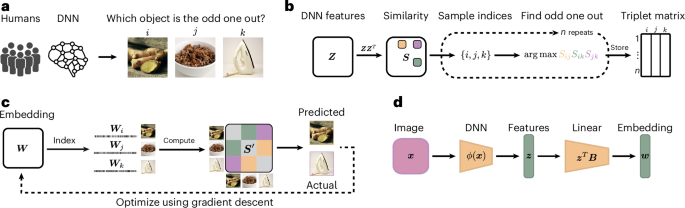

The Future: Aligning Human and Neural Representations

Recent studies like those by Lindsay (2021) and Muttenthaler et al. (2023) have taken steps toward understanding the alignment of human and machine representations, suggesting that DNN training could benefit from human similarity judgments in refining visual representations (Muttenthaler et al., 2023; Lindsay, 2021). The blending of insights from human cognition and artificial intelligence fosters a collaborative landscape that could redefine how we approach machine learning.

Conclusion: A Collaborative Path Forward

As we continue traversing the interconnected realms of human cognition and machine learning, the path forward may not be a simple one. With each study, we glean insights that not only enhance our understanding of AI but also reflect the profound complexities of human perception. By leveraging both our understanding of the brain and enhancing the architectures of neural networks, we can aspire to create machines that don’t just mimic human tasks but perhaps one day understand and learn in ways akin to us. The conversation on representation, generalization, and the alignment of cognitive systems beckons further inquiry and exploration, making this an exciting field ripe for discovery.