Understanding AI, Machine Learning, and Deep Learning: A Guide for Lab Managers

Artificial intelligence (AI), machine learning (ML), and deep learning (DL) are terms that often flow into one another, yet they encapsulate very different realms of complexity, capability, and human involvement. For lab managers tasked with integrating these technologies into laboratory workflows, grasping these distinctions is crucial. This article breaks down the hierarchy of these technologies, how they function, and offers real-world applications pertinent to lab settings.

What is Artificial Intelligence?

AI acts as the umbrella term covering a wide array of technologies designed to mimic human intelligence. Its scope can be anything from basic algorithms performing predefined actions to complex systems like ChatGPT that generate unique outputs. Understanding AI at this granular level can vastly improve how lab managers evaluate vendor claims, especially when products are marketed as "AI-powered."

AI can include everything from simple rule-based systems utilizing IF/THEN logic, which automate repetitive tasks, to more sophisticated tools capable of incrementally adapting over time. When soliciting vendor information, it’s vital to inquire about the underlying mechanics of their AI: Does it simply follow deterministic rules, or does it learn and adapt?

Lab Use Cases for AI

Lab managers can find tangible uses for AI, such as:

- CellProfiler: A software that differentiates between cellular and non-cellular objects using rule-driven image processing, allowing for accurate cell counting.

- Chemical Storage Safety: Certain chemical management software employs AI to automatically identify potential safety hazards, acting as a safeguard for human inspections.

Delving into Machine Learning

ML narrows down the scope of AI, as it empowers systems with the ability to learn from data without explicit programming. The MIT Sloan School of Business succinctly captures this: ML is a subfield of AI where systems learn patterns from labeled data and subsequently apply these patterns to new inputs. This adaptability distinguishes ML tools from rigid, rule-based AI systems.

Key components of an effective ML model include:

-

Feature Extraction: This process simplifies raw data into meaningful variables. For instance, image analysis could be reduced to metrics like edge density and color histograms.

- Data Labeling: Essential for "supervised learning," data labeling involves annotating data to provide context. Well-labeled datasets instruct models on how to detect correlations—like identifying spam emails or correctly classifying images.

Given the human expertise required in labeling and feature extraction, ML tools often strike a sweet balance between performance and resource demand. They typically require less data and computing power than DL systems, making them more feasible for labs with structured datasets.

Lab Use Cases for ML

The applications of ML in the lab are numerous:

- PeakBot: An innovative ML-based chromatographic peak picking program that adapts to user data for enhanced accuracy.

- Inventory Tracking: ML-enhanced lab management software can forecast supply needs, offering timely reorder alerts based on analyzed lead times.

- Experiment Design Assistance: Some ML tools provide recommendations for testing parameters, optimizing experimental setups.

The World of Deep Learning

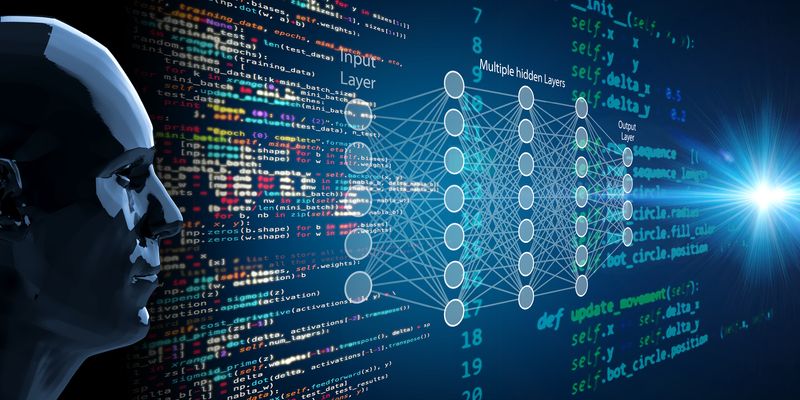

Deep learning represents a further refinement of machine learning, employing layered neural networks to identify complex patterns in data. These neural networks simulate aspects of human brain operation—allowing DL models to learn abstract features from unstructured data like images or text.

What sets deep learning apart is its ability to extract features autonomously without human intervention. While this comes with notable advantages, including enhanced accuracy and scalability in handling complex datasets, it also demands significantly more computational resources.

Lab Use Cases for DL

Deep learning tools are increasingly pivotal in scientific research:

- Large Language Models: Applications like ChatGPT and Google Gemini are gaining traction in labs for diverse tasks, from summarizing research findings to coding assistance.

- Organoid Analysis: DL methods accelerate and automate the analysis of organoids, enhancing processing speed and accuracy in biomedical research.

- Protein Folding Predictions: Projects such as AlphaFold and its open-source equivalent, Boltz, utilize DL to predict protein interactions, dramatically speeding up early-stage drug discovery processes.

AI, Machine Learning, and Deep Learning: A Comparison Table

Understanding the distinctions among AI, ML, and DL can aid lab managers in selecting the right tools for their needs:

| Category | AI | ML | DL |

|---|---|---|---|

| Input | Rules or data | Labeled data | Raw data (images, text, etc.) |

| Learning Method | Pre-programmed or reactive | Learns patterns via training | Independently learns patterns |

| Human Involvement | High (rules must be defined) | Medium (features must be extracted manually) | Low (extracts features autonomously) |

| Complexity | Broad range | More adaptable than AI | Most adaptable; mimics human learning |

| Example | IF/THEN logic in equipment scheduling | Email spam filters | ChatGPT, AlphaFold, image classifiers |

In summary, while terms like "AI-powered" may sound enticing, the nuances beneath the hood—whether they be rule-based systems, traditional ML models, or sophisticated deep learning architectures—are what ultimately dictate a tool’s applicability and effectiveness in a laboratory context. Understanding these layers helps lab managers make informed choices aligned with their operational needs.