Revolutionizing Accessibility with Wearable Brain-Computer Interfaces

Imagine a world where individuals with limited physical capabilities can interact with their environment through the power of their thoughts. Engineers at UCLA are making strides in this exciting frontier with a groundbreaking wearable, noninvasive brain-computer interface (BCI) system. This innovative technology employs artificial intelligence (AI) as a "co-pilot," interpreting user intent to assist in completing tasks, such as moving a robotic arm or controlling a computer cursor.

The Underpinning Technology

At the heart of this advancement is electroencephalography (EEG), a method that records the brain’s electrical activity. The UCLA engineering team has meticulously developed custom algorithms that can decode these signals to extract the user’s movement intentions. This decoding process transforms neural impulses into actionable commands that a computer or robotic system can understand and execute.

AI as a Collaborative Partner

What sets this BCI apart is its integration with a camera-based AI platform. This system not only decodes user intentions but also interprets those signals in real time, allowing for a more fluid interaction with assistive technologies. The collaboration between brain signals and AI has led to remarkable outcomes, enabling participants to complete tasks significantly faster than they could without this technological assistance.

A Key Study with Promising Results

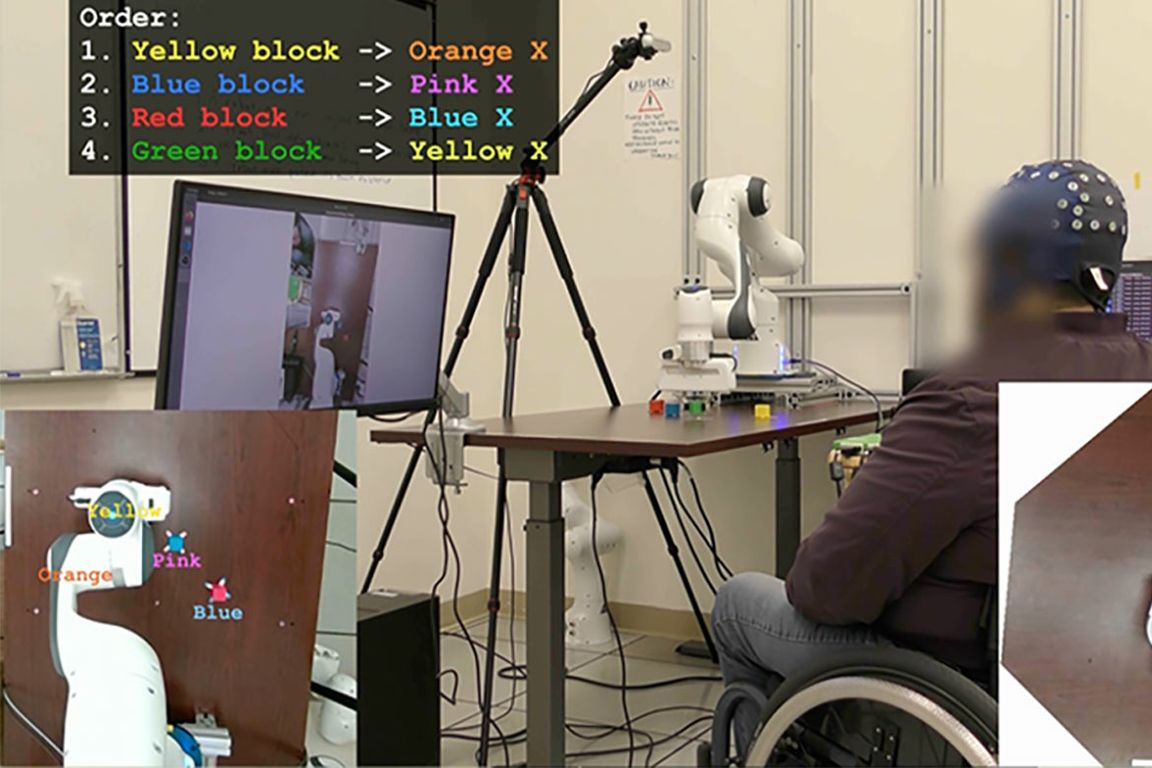

The study was published in Nature Machine Intelligence, showcasing a new level of efficacy for noninvasive BCIs. During trials, four participants were put to the test—three without motor impairments and one individual paralyzed from the waist down. Participants wore a specially designed head cap to measure their EEG signals, while the AI system monitored their movements and supported them in accomplishing two distinct tasks.

In the first task, participants aimed to move a cursor on a computer screen to hit eight targets, maintaining the cursor’s position for at least half a second at each target. In the second scenario, they were tasked with using a robotic arm to relocate four blocks on a table to pre-defined positions. Remarkably, the paralyzed participant successfully completed the robotic task in approximately six and a half minutes with AI assistance, a feat he could not achieve unaided.

How It Works: Decoding Intent Through Brain Signals

The BCI system excels at interpreting electrical signals that convey intended actions. Unlike conventional systems that might rely on eye movements, the advanced AI utilizes computer vision to understand user intent more effectively. This nuanced approach allows for a more seamless interaction between the user and the technology, further bridging the gap for those with limited mobility.

Future Directions in AI-BCI Development

The exciting possibilities for AI-assisted BCIs are just beginning to unfold. Researchers like Johannes Lee, a co-lead author and doctoral candidate at UCLA, envision the next generation of co-pilot systems that could further refine robotic arm movements for increased speed and precision. These enhancements might allow for nuanced interactions, adapting the AI’s responses to various objects and tasks.

Moreover, incorporating larger datasets for training the AI could enable it to tackle more complex tasks and improve the efficiency of EEG signal decoding. This points towards a future where shared autonomy between humans and machines isn’t just a dream, but a practical reality.

A Collaborative Effort

The research team is comprised of talented members from Jonathan Kao’s Neural Engineering and Computation Lab at UCLA. Their collective expertise and insight illuminate the collaborative nature of this research endeavor. The project receives financial backing from the National Institutes of Health and a partnership between UCLA and Amazon through the Science Hub for Humanity initiative.

The Road Ahead

With patents for this cutting-edge AI-BCI technology already in the works, the implications of this research extend beyond individual users. It signals a potential shift in how we understand and implement assistive technologies for those with movement disorders, such as paralysis or amyotrophic lateral sclerosis (ALS). As the journey continues, the integration of AI with BCI systems holds the promise of significantly enhancing independence for individuals facing physical challenges.

In essence, UCLA’s innovative work is carving a path toward a more inclusive future, where technology and human spirit converge for greater accessibility and empowerment.