Trends and Growth Insights in the AI Infrastructure Market

Trends and Growth Insights in the AI Infrastructure Market

Understanding AI Infrastructure

AI infrastructure comprises the hardware and software resources necessary to develop and deploy artificial intelligence applications. This includes servers, storage systems, network components, and specialized software that support machine learning, data analytics, and AI model deployment. As organizations increasingly adopt AI solutions, the demand for robust infrastructure grows significantly.

For instance, companies like Tesla leverage specialized AI infrastructure for their autonomous vehicle systems. This approach enables real-time data processing and feedback, highlighting the essential role of infrastructure in operational efficiency.

Core Aspects Driving Market Growth

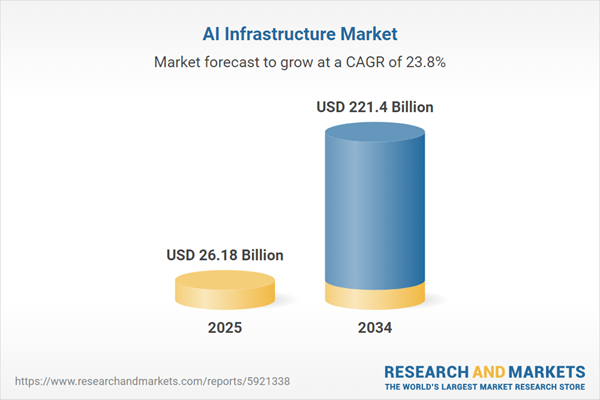

The AI infrastructure market, valued at approximately USD 26.18 billion in 2024, is expected to grow at a compound annual growth rate (CAGR) of 23.80% to reach USD 221.40 billion by 2034 (ResearchAndMarkets.com, 2025). Key growth drivers include:

- Generative AI Models: These require significant computational resources, pushing the need for advanced hardware like NVIDIA’s GPUs.

- Edge AI Adoption: Organizations are implementing localized AI solutions to enhance operational efficiency, leading to demand for low-latency infrastructure.

For example, manufacturers in the automotive sector are increasingly integrating AI to optimize production processes, showcasing the intertwined nature of AI capabilities and infrastructure.

Components of AI Infrastructure

AI infrastructure can be dissected into several core components:

-

Hardware: This includes GPUs, high-performing servers, and storage systems essential for processing large datasets and executing complex algorithms.

- Server Software: Solutions like Kubernetes facilitate the orchestration of AI workloads, enabling scalability and efficient resource allocation.

An illustration of this is the collaboration between NVIDIA and various tech leaders to create specialized AI infrastructure, enhancing the European Union’s digital ecosystem.

Step-by-Step Infrastructure Lifecycle

Building effective AI infrastructure involves a systematic lifecycle:

-

Needs Assessment: Organizations determine specific AI application requirements, including anticipated data volumes and processing speed.

-

Design and Planning: This phase includes selecting suitable hardware and software while accounting for compliance and security needs.

-

Implementation: Deploy the selected infrastructure components, establishing data pipelines and AI model training environments.

- Monitoring and Optimization: Continual performance monitoring and resource optimization ensure the infrastructure evolves with organizational needs.

For example, Microsoft’s substantial investment in AI-focused data centers in Germany showcases a real-world application of this lifecycle.

Common Pitfalls and Solutions

Organizations often face several challenges in establishing AI infrastructure:

-

Underestimating Resource Needs: Failing to accurately assess required compute power can lead to performance bottlenecks. This can be rectified by piloting smaller projects before full-scale implementation.

- Ignoring Energy Efficiency: AI operations are power-intensive, with some data centers projected to account for 8% of global electricity usage by 2030 (International Energy Agency, 2023). To mitigate environmental impact, organizations should invest in energy-efficient systems and cooling technologies.

For instance, Japan’s government is providing subsidies for liquid cooling systems in AI-related infrastructure, showcasing a proactive approach to energy management.

Tools and Frameworks in Practice

Various tools and frameworks support AI infrastructure demands:

-

Kubernetes: This open-source platform manages containerized applications, allowing organizations to deploy and scale AI solutions effectively.

- TensorFlow: Widely used for machine learning, TensorFlow offers flexibility in building and deploying AI models, making it a staple in AI infrastructure setups.

Utilization of these tools accelerates the development cycle, particularly as organizations scale their AI capabilities.

Alternatives and Trade-offs

Organizations have several deployment models for infrastructure:

-

On-Premises: Preferred for controlled environments where data security is critical. This model, however, has higher upfront costs.

-

Cloud-based Solutions: Offers scalability and flexibility, suitable for enterprises looking to minimize capital expenditure. However, dependencies on internet connectivity can be a drawback.

- Hybrid Models: These combine both approaches, allowing organizations to balance data security with the agility of cloud solutions. Choosing the right model hinges on specific organizational needs and regulatory requirements.

FAQs

What is edge AI, and why is it important?

Edge AI refers to processing AI algorithms at the edge of networks, closer to data sources. This reduces latency and improves response times, crucial for applications in fields like autonomous driving and real-time manufacturing.

How can organizations ensure energy efficiency in AI infrastructure?

Implementing sustainable practices, such as utilizing liquid cooling technologies and adopting energy-efficient hardware, can significantly reduce the overall energy footprint of AI operations.

What are the primary hardware requirements for AI infrastructure?

Requirements typically include high-performance GPUs, substantial RAM, and fast storage solutions to manage vast datasets efficiently.

Why is compliance critical for AI infrastructure?

Regulatory compliance ensures that organizations’ AI systems adhere to data protection standards, which is particularly important in industries like finance and healthcare.