Quantitative Performance Evaluation of a GAN-based Educational System

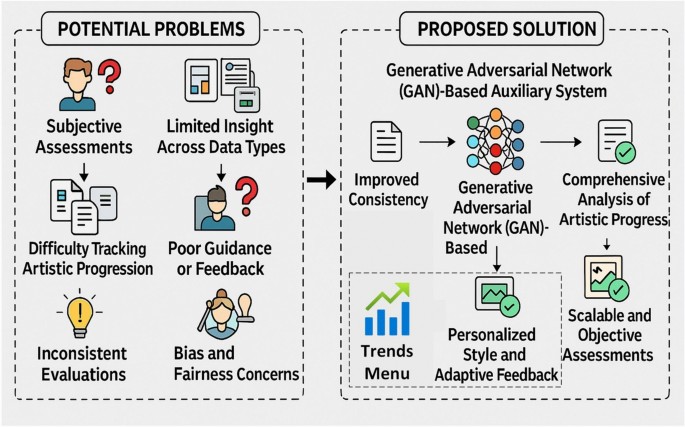

In the realm of art education, the emergence of Generative Adversarial Networks (GANs) has opened new avenues for creativity and pedagogical effectiveness. This article delves into the quantitative performance evaluation of a GAN-based educational system, comparing its outputs against traditional baseline architectures. We specifically focus on four models commonly utilized in image-to-image translation: Baseline-GAN, StyleGAN, Pix2Pix, and CycleGAN. Through metrics such as Fréchet Inception Distance (FID) and Inception Score (IS), we explore how our proposed model stands out in producing high-quality generative outputs.

Comparative Analysis of Baseline Architectures

In our quantitative evaluation, we measured the generative quality and diversity of outputs across these models. The comparison highlighted the proposed model’s superiority, evidenced by achieving the lowest FID score of 34.2. This metric is critical as it indicates the visual fidelity of generated images in comparison to real-world artwork. Notably, Baseline models like CycleGAN and Pix2Pix lagged, recording FID scores of 53.4 and 58.9, respectively. The lower FID score of our model suggests that the artworks it generates are not only more visually appealing but also more aligned with real artwork distributions.

When examining Inception Score, our model excelled with a score of 3.9—the highest among all tested architectures. This score reflects both the diversity and recognizability of the generated artworks, indicating that our approach successfully captures a broader array of artistic styles and themes. By integrating sketch, style, and textual inputs through a multi-modal GAN architecture, the proposed model effectively synthesizes these diverse sources to enhance the quality of generated outputs.

Qualitative Visual Outcomes and User Feedback

Beyond the quantitative metrics, we placed significant emphasis on evaluating the perceptual quality and user satisfaction through qualitative analysis. We conducted two user studies: the first was a student survey with 60 participants assessing the visual realism of outputs from various models, and the second involved expert reviews from 12 professional artists and instructors who evaluated stylistic alignment and educational utility.

In our findings, participants rated the proposed model the highest for visual realism (mean = 4.7/5) and stylistic consistency (mean = 4.8/5), surpassing all baseline models. For example, CycleGAN and Pix2Pix received lower scores, predominantly at 4.0 and 3.8 for realism, and 3.9 and 3.6 for style consistency. Such feedback underscores the model’s capability to produce visually striking and educationally meaningful content, with comments reflecting that the outputs "resembled instructor-quality illustrations" and "captured individual artistic style effectively."

Ablation Study and Component Impact

To further understand how each input modality and architectural component contributed to the overall performance, we performed an ablation study. This involved systematically removing key modules from the system and evaluating the resulting changes using FID and Structural Similarity Index Measure (SSIM).

The results of this analysis revealed compelling insights. When key components like sketch input, style reference, textual prompt, and the feature fusion layer were excluded, notable drops in performance were observed. For instance, removing the sketch input resulted in a FID increase to 46.8 and SSIM dropping to 0.72, suggesting that sketches are vital for structural guidance. Each removed component demonstrated its unique contribution, confirming the complementary nature of the different input modalities in generating high-quality, stylistically accurate outputs.

Latency and Real-Time Responsiveness

Assessing the system’s practicality for real-time educational environments, we measured inference latency and considered scalability under varying user loads. Latency—the time taken from input submission to output generation—proved crucial for classroom applications.

Our proposed model recorded an average inference latency of 278 milliseconds, outperforming baseline architectures like CycleGAN (430ms) and Pix2Pix (470ms). This responsiveness is significant for live feedback scenarios, making the model viable for use in digital art classrooms. Furthermore, even under a stress test with 200 to 500 concurrent users, the system maintained low latency, only rising slightly during peak times. This efficiency in deploying the model ensures a seamless and engaging user experience in collaborative learning settings.

User Study and Engagement Metrics

To gauge the system’s impact on learner experience, we conducted a comprehensive user study with 60 students over a four-week period. Participants utilized the GAN-based system while responding to a structured survey that assessed five key indicators: confidence, creativity, engagement, motivation, and overall satisfaction.

The results were striking. Pre-study scores averaged between 2.1 and 2.3, while post-interaction ratings surged to an impressive 4.1 to 4.5 across the metrics. Engagement metrics, notably, revealed a 42.7% increase, with expert evaluations indicating a 35.4% improvement in the overall quality of student artwork. Usage frequency also reflected strong adoption, with 45% of educators engaging with the system daily.

Comparative Performance Metrics

To provide a robust assessment of the proposed GAN-based educational system, we performed a comparative analysis against state-of-the-art image-to-image translation models. Essential metrics such as FID, SSIM, and IS were evaluated, alongside qualitative feedback from users and experts.

Our findings demonstrated that the proposed model not only reduced FID by over 35% but also improved SSIM scores by approximately 18% as compared to baseline scores. This significant enhancement in both generative fidelity and perceptual quality speaks to the efficacy of our architectural innovations. The model’s integration of multi-modal inputs—sketches, style images, and text prompts—enables it to capture artistic intentions flexibly, leading to high-quality outputs tailored to individual learning needs.

Potential Limitations

Despite the promising performance and user engagement, the GAN-based educational system is not without its limitations. Chief among these is the reliance on the availability and diversity of high-quality training data. The datasets utilized, such as QuickDraw and WikiArt, while extensive, are predominantly skewed toward Western art forms, posing a risk of stylistic bias that could marginalize non-Western traditions.

Moreover, as with many deep learning architectures, some of the model’s operations remain somewhat opaque, challenging educators and learners to fully trust its outputs in formative assessments. Additionally, the system’s performance can be sensitive to poor-quality inputs, necessitating user adherence to specific formatting guidelines to achieve optimal results.

To address these challenges, several strategies are proposed to increase inclusivity and performance across diverse artistic traditions. These include curating additional datasets from global collections, applying systematic bias audits, and enhancing personalization modules to include cultural preferences.

By addressing these limitations through proactive strategies, the GAN-based educational system aims not only to succeed in generating high-quality artistic outputs but also to enhance the overall educational experience for a diverse user base.