Enhancing Deep Neural Networks with Adapters: Techniques, Design, and Implementation

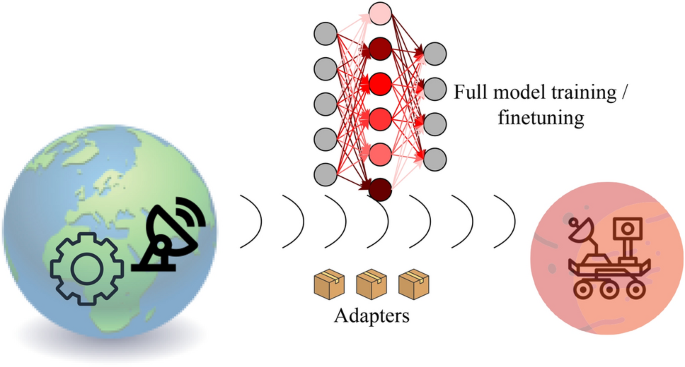

Deep Neural Networks (DNNs) remain at the forefront of artificial intelligence, powering remarkable advancements in various applications, from image recognition to natural language processing. However, the challenge of efficiently adapting these models to different environments and tasks persists. Here, we introduce the innovative concept of adapters, integral components designed to facilitate the seamless integration of multi-domain capabilities within DNNs.

What Are Adapters?

Adapters serve as lightweight, trainable modules inserted into pre-existing DNN architectures, allowing models to adjust with minimal retraining. Our motivation stems from the need for versatile models that can adapt to various tasks without starting from scratch. Using adapters means we can fine-tune models for specific applications while retaining the core architecture.

Adapter Architecture and Design

Drawing inspiration from Rebuffi et al.’s work on residual adapters in ResNet-15, we design adapters that effectively outperform traditional transfer learning techniques. The architecture can be summarized mathematically. Let:

- (y_n) represent the output of the (n)-th layer in a DNN.

- The model can be described by the equation:

[

y_n = \varphi_n\left{ \text{BN}_n\left[ f_n\left( xn, w{fn}\right) , w{\text{BN}_n}\right] \right}

]

Here, (\varphi_n) is the non-linearity applied, (\text{BN}_n) refers to the batch normalization layer, and (fn) signifies the linear transformation applied, such as a fully connected or convolutional layer. The learnable parameters (w{fn}) and (w{\text{BN}_n}) are associated with (f_n) and (\text{BN}_n), respectively.

When transitioning from an upstream task ((T_0)) to a downstream task ((T_1)), the model’s parameters can change. If we adjust the parameters of the (n)-th layer, the updated output can be expressed as:

[

y_n^{T_1} = \varphi_n\left{ \text{BN}_n\left[ f_n\left( xn, w{x}^{T1}\right) , w{\text{BN}_n}^{T_1}\right] \right}

]

To reduce computational complexity, we identify that if (T_1) is closely related to (T0), the changes in parameters ((\Delta w{x}^{T_1})) can be minimal, allowing us to learn the adjustments directly by inserting a series adapter:

[

A_n^{T1} = f{A_n}\left[ f_n\left( xn, w{f_n}^{T0}\right) , w{f_{A_n}}^{T_1}\right]

]

This approach simplifies parameter learning, allowing for efficient integration without overhauling existing structures.

Memory Complexity and Efficiency

Leveraging the adapter structure leads to substantial memory savings. Adapters increase efficiency by concentrating on learning the necessary changes without duplicating the extensive parameters of convolutions. For instance, a convolution layer with weights of size (w_{f_n}) exhibits space complexity of (\Theta(K_n \times K_n \times I_n \times O_n)). An adapter based on batch normalization and (1 \times 1) convolution reduces this overhead to (\Theta(5 \cdot O_n)).

While this design significantly lessens the number of parameters to update, it introduces computation costs during inference. However, techniques like layer folding could mitigate this by consolidating the adapter operations directly into the main architecture.

Adapter Fusion: Streamlining Model Complexity

One effective method to save memory and reduce computational costs is to implement adapter fusion. This process merges adapter batch normalization layers with subsequent (1\times 1) convolutional layers. The steps are as follows:

- Fusing Adapter Layers: Begin by compressing the adapter’s batch norm and sum operations with the (1 \times 1) convolutional layer.

- Renormalizing Layers: Next, fuse the resulting layer back into the original model’s batch norm layer.

- Final Fusion: Finally, incorporate the modified (1 \times 1) convolution into the main architecture’s convolutional layers.

Through this fusion, the operational cost associated with adapters dissipates, leaving a leaner model while retaining flexibility for future modifications.

Adapter Ranking: Optimizing Importance and Utility

Assessing the necessity of each adapter within the model can lead to more efficient utilization of resources. By implementing a ranking system, we can determine which adapters are beneficial and which can be discarded. This evaluation builds on the idea of keeping only those components contributing significantly to output accuracy.

We introduce a metric defined as:

[

Z = \frac{{\Vert w_{fn} \Vert}^2}{|w{f_n}|}

]

In this formula, (Z) indicates the relative importance of a layer’s parameters. High values suggest that the adapter is crucial for maintaining accuracy, while low values signal potential redundancy.

Through empirical validation, the significance derived from adapter ratings leads to model simplifications, retaining performant features while trimming unnecessary components.

Conclusion

Adapters offer an innovative solution for enhancing DNNs, providing flexibility for multi-domain tasks while ensuring efficient resource use. With proper design and implementation strategies, including concepts like fusion and ranking, we can adaptively manage model complexity and effectiveness across diverse applications. This continual evolution in adapter architecture not only answers contemporary needs in AI but also paves the way for future advancements in deep learning technologies.