Optimizing Neural Networks: Exploring Attention Module Enhancements

Introduction to Experiments

In the realm of deep learning, optimizing the performance of neural networks—especially those utilizing attention mechanisms—is a pressing concern. This article delves into a series of experiments focused on improving the efficiency and efficacy of attention modules within various neural network architectures. By applying targeted optimizations, we can assess the influence on evaluation metrics and inference speed across different network components.

Methodology: A Structured Approach

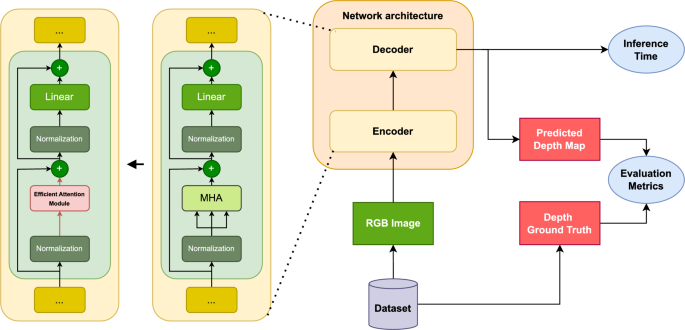

The experiments utilized a structured approach to see how various optimizations impacted specific areas within neural networks. We focused on three configurations:

- Optimisation Network: Here, optimizations were applied to all attention modules across the entire architecture.

- Optimisation-Base Network: In this case, only the encoder’s attention modules were optimized, leaving the decoder unmodified.

- Base-Optimisation Network: This configuration applied optimizations only to the decoder, while the encoder remained unchanged.

This targeted approach not only illuminates which network regions benefit the most from optimizations but also helps pinpoint any performance trade-offs.

Performance Metrics and Dataset Analysis

An empirical analysis was conducted on two popular datasets: NYU Depth V2 and KITTI. Each dataset was further divided into tiny, base, and large model sizes, presenting a comprehensive overview of how adjustments to the architecture affect performance outcomes.

Tables 1 and 2 delineate the results of baseline and optimized models, showcasing their performance measured by Root Mean Square Error (RMSE) and inference times. The experiments were insightful, particularly in revealing meaningful trade-offs between the depth estimation quality and the speed of the models.

Key Findings from NYU Depth V2 Dataset

Several observations were made while analyzing results:

- The majority of optimizations, with the exception of MoH, posed minimal degradation on qualitative metrics while reducing inference times.

- When evaluating different model sizes, it became apparent that Meta and Pyra optimizations generally enhanced network performance, especially in terms of inference speed.

- However, in deeper architectures like PixelFormer and NeWCRFs, decoder-level optimizations matched or even exceeded baseline performance metrics.

The experiments clearly indicated that while various optimizations could indeed expedite inference, they did not universally enhance quality. Thus, understanding the nuances of each method is vital.

Insights from the KITTI Dataset

On the outdoor samples analyzed in the KITTI dataset, generalization capabilities posed challenges for the METER models. Insights included:

- Although optimizations minimized RMSE and (Abs_{Rel}) errors for smaller models, larger versions did not exhibit the same consistency.

- Efficient attention mechanisms contributed to increased speed across all models, with Meta showing the best inference times.

- NeWCRFs yielded superior results with MoH, illustrating that not all optimizations have a uniform effect across architectures.

This highlighted the complexity in balancing performance and efficiency, particularly when faced with varying data types.

The Pareto Frontier: A Detailed Analysis

To tackle the challenge of identifying optimal trade-offs, results were organized into a Pareto Frontier. This approach facilitates a visual and analytical means of understanding how each model’s performance metric (RMSE) balances against inference time.

The Pareto Frontier effectively illustrates which models present optimal choices. Models located on this frontier represent the best compromises, as improving one metric necessitates a sacrifice in another. This methodology has proven invaluable for discerning model efficacy in terms of both performance and general computational requirements.

From the analysis, certain trends emerged. Applications of Meta led to speedier models but often at the expense of accuracy, while Pyra facilitated a favorable balance, and MoH showed minimal time improvement.

Embedding Entropy Analysis

Focusing on embedding quality offered significant insights into model performance, especially for PixelFormer and NeWCRFs. Analysis revealed:

- Architectures with optimized decoders consistently showed superior accuracy and efficiency compared to models where optimizations were applied solely to the encoder.

- The increased dispersion in embedding distributions for optimized encoders suggested that such modifications impaired depth map reconstruction capabilities.

To quantify embedding efficiency, we employed differential Gaussian entropy and Kozachenko-Leonenko entropy measures, both of which showed lower values indicative of more concentrated embeddings promoting more effective decoder operations.

Comprehensive Comparison of Optimizations

Through these experiments, we observed distinct performance across different optimization techniques. Notably:

- Meta optimizations consistently demonstrated superior performance in terms of both parameters and memory footprint across all models.

- While other optimizations led to increased memory utilization due to additional layers or routing complexities, Meta’s approach favored simpler pooling strategies, resulting in overall efficiency without compromising structure significantly.

Resource-Constrained Device Experiments

Real-world applicability was further examined through experiments using the Jetson Orin Nano board, reflecting actual deployment conditions for deep learning models. Performance analyses on this low-resource device yielded:

- Meta optimizations led to the most significant incremental improvements in inference speed, affirming the efficacy of its architecture adjustments.

- Conversely, MoH optimizations did not perform as well under resource constraints due to inherent routing overhead.

This distinction underscores the importance of optimization strategies in low-resource contexts, further validating the need for tailored approaches in deployment scenarios.

Conclusion

In sum, these comprehensive experiments underscore the intricate balance between model performance and computational efficiency within neural networks. By systematically applying and analyzing optimizations to attention modules, it is evident that tailored approaches can greatly enhance robustness and speed, shaping the future of deep learning in real-world applications. The nuanced findings emerging from these experiments pave the way for optimized, efficient architectures suited for both high and low-resource environments.