IDLTHAR-PDST Technique for Activity Recognition in IoT-Edge-Cloud Continuum

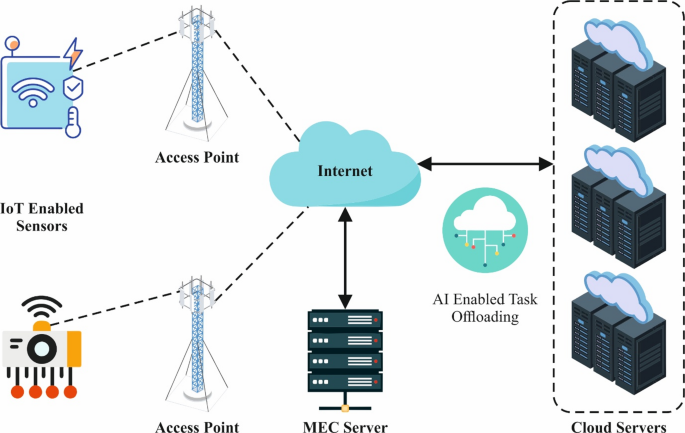

In a world increasingly dominated by smart technology, the ability to accurately recognize and interpret human activities becomes crucial. The IDLTHAR-PDST technique, designed for Human Activity Recognition (HAR), leverages sensor technology within the smart IoT-Edge-Cloud continuum, offering an efficient approach to this complex task. The significance of this technique lies in its multi-step process, which includes data preprocessing, an EHBA-based feature selection, and a DBN-based classification process.

Data Preprocessing

The initial phase of the IDLTHAR-PDST technique involves meticulous data preprocessing. It utilizes a min-max normalization model, considered a gold standard in optimizing sensor data consistency. This approach effectively scales the data to a specific range (typically between 0 and 1), ensuring that the model does not favor features with inherently larger values. By normalizing the data, the model can learn more efficiently and converge faster, a vital aspect for Deep Learning (DL) models.

Min-max normalization is computationally effective and maintains the relationships between data points, making it particularly useful for the IoT-Edge-Cloud continuum where real-time data from diverse sensors is processed. Irrelevant and redundant features are filtered out during preprocessing, enhancing model precision while minimizing computational complexity. The mathematical representation of min-max normalizing data can be expressed as follows:

$$

{x}^{\prime}=\frac{x-\text{min}(x)}{\text{max}(x)-\text{min}(x)}

$$

Here, (x) refers to the value being transformed, while the max and min functions indicate the range of feature values.

EHBA-based Feature Selection

Once data preprocessing is complete, the focus shifts to feature selection, where the Enhanced Honey Badger Algorithm (EHBA) comes into play. This technique is particularly effective in dealing with complex high-dimensional datasets. By simulating the aggressive foraging behavior of honey badgers, the EHBA can navigate through the feature space efficiently, avoiding overfitting while selecting the most relevant features.

The EHBA balances exploration and exploitation, optimizing feature subsets and thus improving classification performance. Its robustness is especially beneficial in HAR applications, where data is often noisy and may contain redundant features. The procedure emphasizes selecting top features while minimizing overfitting and complexity, thereby enhancing the model’s predictive capabilities.

Initialization and Objective Function

The EHBA’s initialization is often governed by a Levy Flight model, which sets constraints like maximum feature size and iteration number. The equations governing this initialization include:

$$

ad{i}=lr{b}{i}+random{1}\times (ur{b}{i}-lr{b}_{i})

$$

In this expression, (random{1}) is randomly generated, and (ur{b}{i}) and (lr{b}_{i}) refer to the upper and lower bounds of the population size.

Furthermore, dual fitness functions (FF) are utilized to select optimum features, optimizing performance while ensuring minimal classification error:

$$

min(FF{1}) = \left(\frac{1}{mi} \sum{f=1}^{mi} \frac{M{I}{error}}{M{I}{AI}}\right) \times 100

$$

This strategy effectively enhances the model’s capability to identify critical features for accurate classification.

DBN-based Classification Process

Following feature selection, the Deep Belief Network (DBN) is employed for the classification of human activities. The DBN stands out due to its capacity to model complex hierarchical features from large, high-dimensional datasets. Comprising multiple layers of Restricted Boltzmann Machines (RBMs), the DBN adeptly learns feature representations—often without extensive feature engineering.

This capability is particularly valuable in HAR tasks, where data can be noisy and unstructured. The architecture of DBN allows for the discovery of latent features, pertinent for enhancing classification accuracy. It also adapts well to the volume of real-time data generated from IoT sensors in smart environments.

The efficiency of DBNs can be seen in their robust performance metrics, given that they employ backpropagation methods to fine-tune weights and decrease overfitting. The energy function for the input and hidden layers is expressed as follows:

$$

P(v, h) = \frac{e^{-E(v,h)}}{\sum_{v,h} e^{-E(v,h)}}

$$

This formula plays a crucial role in training the layers of DBN.

Performance Validation

The robust performance of the IDLTHAR-PDST approach has been validated using various datasets, underscoring its effectiveness in accurately detecting and identifying multiple classes of human activities. The confusion matrices illustrate successful classification of instances from the datasets employed.

Key metrics, including accuracy, precision, recall, and F1 measures, have been documented, affirming that the IDLTHAR-PDST approach significantly outperforms existing methods. For instance, with an application of an 80:20 training to testing ratio, the IDLTHAR-PDST method achieved impressive average metrics of:

- Accuracy: 98.75%

- Precision: 96.24%

- Recall: 96.24%

- F1 Measure: 96.24%

These findings demonstrate the technology’s potential to revolutionize the landscape of human activity recognition, fostering greater integration within smart systems rooted in the iot-edge-cloud ecosystem.

Through these methodologies, the IDLTHAR-PDST technique opens up new avenues for development in human activity recognition, embodying the seamless interaction of sensor technology in our increasingly interconnected world.