Set Up and Validate a Distributed Training Cluster on Amazon EKS with AWS Deep Learning Containers

Set Up and Validate a Distributed Training Cluster on Amazon EKS with AWS Deep Learning Containers

Training advanced machine learning models demands significant computational resources, particularly when working with large datasets and complex algorithms. Amazon Elastic Kubernetes Service (EKS) allows organizations to efficiently manage these resources, leveraging AWS Deep Learning Containers (DLCs) for optimized performance. This article explores the vital aspects of setting up and validating a distributed training cluster using EKS and AWS DLCs.

Core Concepts of AWS EKS and Deep Learning Containers

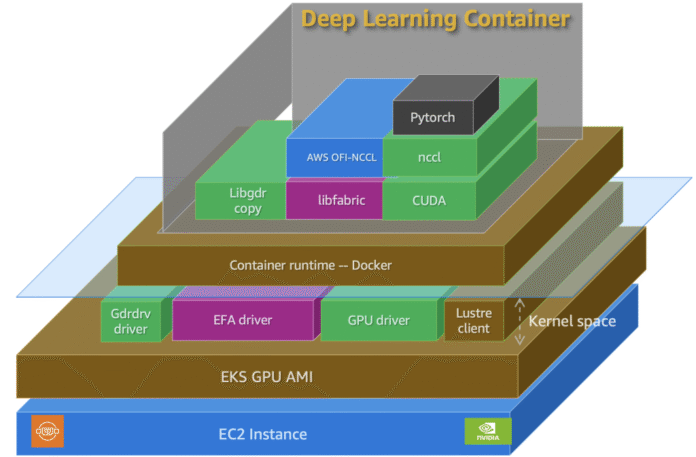

Amazon EKS is a managed service that simplifies deploying, managing, and scaling Kubernetes clusters. Kubernetes is an open-source platform for automating the deployment, scaling, and operation of application containers, making it ideal for distributed computing tasks. On the other hand, AWS Deep Learning Containers are Docker images pre-configured with various machine learning frameworks like TensorFlow and PyTorch.

Using EKS with DLCs significantly reduces the time and complexity involved in launching training jobs. AWS’s architecture provides reliable scaling and optimized resource allocation, which is critical for demanding workloads such as training large language models or other deep learning applications.

Step-by-Step Process: Building Your Cluster

The foundational steps to set up a distributed training cluster using Amazon EKS and DLCs include:

-

Creating a Docker Image with Dependencies: Start by building a Docker image for your required libraries. AWS DLCs are optimized for use with popular ML frameworks, simplifying this process.

-

Launching the EKS Cluster: Define your EKS cluster using a YAML configuration file, detailing the necessary infrastructure components, such as node types and storage options.

-

Installing Necessary Plugins: Add required plugins like NVIDIA device plugins for GPU access or the Elastic Fabric Adapter (EFA) for enhanced network capabilities.

-

Running Health Checks: Verify the readiness of your node and the correct configuration of software components.

- Performing a Test Training Job: Run a simple training workload to validate the entire setup before deploying larger jobs.

An example configuration might involve using a combination of node groups optimized for system management and GPU workloads. For instance, deploying a c5.2xlarge node group for system pods alongside a p4d.24xlarge group for high-performance training.

Key Components of Your EKS Cluster

Setting up a successful training cluster revolves around several critical components:

-

Node Types: Choose the right EC2 instances. G instances are suitable for light workloads, while P instances (like p4d.24xlarge) are designed for powerful distributed training.

-

Networking: Setting up high-bandwidth networking using EFA can significantly improve communication speeds between nodes.

-

Persistent Storage: Utilize Amazon EBS for flexible storage options or FSx for Lustre for high-performance computing environments.

- Monitoring Tools: Tools like Amazon CloudWatch are essential for observing training jobs and identifying bottlenecks.

An organization focused on training large models using EKS might run low-latency networking for their P instances to optimize their performance and minimize downtime.

Common Pitfalls and How to Avoid Them

Common pitfalls when setting up distributed training clusters include:

-

Misconfigurations: Incorrect settings in YAML files can lead to deployment failures. Carefully validate every element of your configuration.

-

Resource Limitations: Insufficient compute or storage capacity can halt training jobs. Always check AWS service quotas and ensure your account has enough resources allocated.

- Plugin Incompatibility: Using outdated or incompatible plugins can lead to errors during job execution. Regularly update and verify each plugin’s compatibility with your training framework.

For instance, setting up network configurations might go awry if the EFA drivers are not installed correctly, leading to network inefficiencies.

Tools and Metrics in Practice

When deploying and verifying your cluster, a host of tools and metrics play a role:

-

NVIDIA-SMI: Monitor the performance and health of your GPUs. This tool allows immediate insight into memory usage, driver versions, and GPU load.

-

Kubectl: The command-line tool for controlling Kubernetes services acts as an interface to deploy applications, inspect and manage cluster resources.

- Metrics Server: Monitors resource utilization within K8s pods, allowing for efficient resource allocation.

Companies like Netflix and Airbnb have adopted similar infrastructures to optimize the performance of their machine learning models, using EKS to handle complex workloads efficiently.

Variations and Alternatives

While AWS EKS with DLC is a robust option, there are alternatives:

-

Self-Managed Kubernetes: For teams with specific needs or experiences, running Kubernetes independently offers flexibility but increases operational overhead.

- Other Managed Services: Consider alternatives like Google Kubernetes Engine (GKE) or Azure Kubernetes Service (AKS) if your organization has a multi-cloud strategy.

Deciding which path to choose should depend on your team’s resource capabilities, expertise in managing cloud infrastructure, and specific project requirements.

FAQ

What are AWS Deep Learning Containers?

AWS Deep Learning Containers are Docker images optimized for machine learning tasks. They come pre-installed with popular ML frameworks and necessary system libraries, simplifying the setup process.

How canI check if my EKS cluster is healthy?

You can verify the health of your EKS cluster by running several commands using kubectl, such as checking the status of nodes with kubectl get nodes and monitoring pod statuses with kubectl get pods -n kube-system. Ensuring that all nodes are in Ready status and pods are Running is essential for healthy cluster operations.

What should I do if I face network-related errors?

If you experience network-related issues, ensure that the Elastic Fabric Adapter (EFA) is properly configured and running. Running NCCL tests can help you identify if communication issues exist among nodes. Checking the configuration files and confirming plugin compatibility will also be beneficial.

Can I use EKS for inference as well as training?

Yes, Amazon EKS can be used for both distributed training and inference. However, it’s crucial to configure the instances optimally for their purpose. For inference, lighter instances may suffice, while training often requires high-performance GPU instances.

In summary, setting up a distributed training cluster on Amazon EKS with AWS Deep Learning Containers embodies a systematic approach that capitalizes on Kubernetes’ scalability and the optimized performance of AWS’s containerized workloads. By adhering to this structured setup and proactively addressing potential pitfalls, teams can streamline their machine learning operations and shift focus toward maximizing model performance and innovation.