Understanding the Importance of Datasets in Music Melody Generation

Introduction to Datasets Collection

In the realm of artificial intelligence and music generation, the datasets utilized play a pivotal role in determining the effectiveness and universality of proposed algorithms. This article explores the two large-scale public MIDI datasets adopted in a recent study, aimed at enhancing melody generation models, and elaborates on their preprocessing and evaluation techniques.

The LAKH MIDI v0.1 Dataset

The LAKH MIDI v0.1 dataset serves as the primary training data in the study, boasting over 170,000 MIDI files. This extensive dataset is rich in melodic, harmonic, and rhythmic material, making it an excellent foundation for a model learning music rules.

Data Preprocessing

During the data preprocessing phase, the study specifically filters out piano music MIDI files from the LAKH MIDI dataset. This decision is rooted in the need for clearer melodic structures that suit basic training materials for melody generation models. The filtered content allows the model to focus on more straightforward melodic attributes.

Supplementing with the MuseScore Dataset

To mitigate the limitation of the LAKH MIDI dataset—predominantly characterized by piano music—the study introduces a second dataset: the MuseScore dataset. This collection, gathered from the online sheet music community MuseScore, showcases a diverse range of instruments. It includes classical orchestral music, modern band instruments like guitar and drums, and various solo instruments, thus providing an invaluable platform to test the model’s ability to generate melodies beyond the piano.

Diverse Instrumental Coverage

The advantage of the MuseScore dataset lies in its great instrumental variety, enabling the evaluation of the model’s universality and effectiveness in generating melodies that are not piano-centric. This inclusion is critical for testing the model’s adaptability across different instrumental configurations.

Unified Preprocessing Workflow

A systematic preprocessing workflow is crucial for both datasets, ensuring consistency in the input data fed into the models.

1. Instrument Track Filtering

For the LAKH MIDI dataset, metadata is employed to filter out MIDI files with the piano as the primary instrument. In the MuseScore dataset, heuristic rules are applied to isolate melodic tracks, selecting those with instruments like the violin or flute that have the most notes and the widest range to enhance the model’s learning.

2. Note Information Extraction

Using Python’s mido library, each MIDI file is parsed to extract four core attributes of each note: pitch (MIDI note number), velocity, start time, and duration. These attributes form the basis of the model’s understanding of musical elements.

3. Time Quantization and Serialization

To standardize rhythm information, the start time and duration of notes are quantized to a precision level of 16th notes. This ensures that all note events are aligned along a structured grid, facilitating easier analysis and learning patterns by the model.

4. Feature Engineering and Normalization

To eliminate any bias towards specific musical keys, each melody is transposed to C major or relative minor. The notes are encoded into numerical vectors that contain normalized pitch, quantized duration, and interval time from previous notes.

5. Data Splitting

Finally, the preprocessed sequence data undergoes an 80%/20% split to create training and test sets. This separation is crucial for evaluating the model’s performance accurately.

Experimental Environment and Parameters Setting

Setting up an efficient experimental environment is fundamental to ensure reliable results. This study utilizes a high-performance computing cluster equipped with NVIDIA Tesla V100 GPUs. These GPUs facilitate accelerated model training, capable of managing the computational demands associated with large-scale datasets.

Framework and Training Methodology

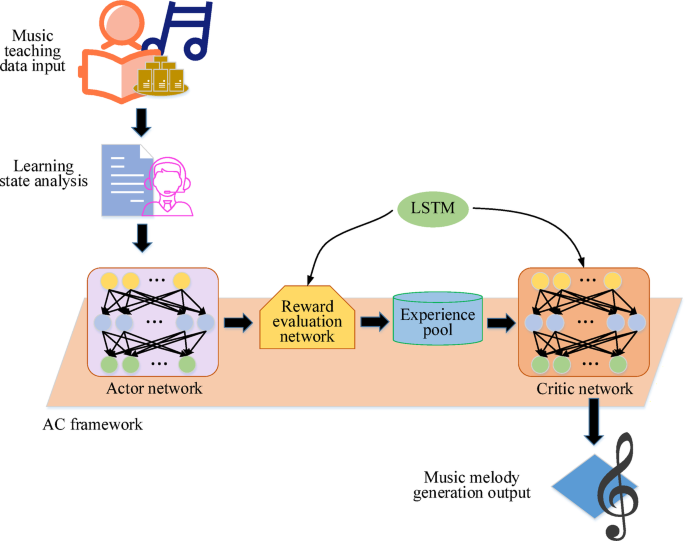

The model training implements the TensorFlow 2.0 framework, with Keras supporting neural network optimization. Given the extensive nature of the LAKH dataset, multi-GPU parallel computing technology is incorporated to significantly reduce training time, thus boosting experimental efficiency.

Hyperparameter Tuning

Careful tuning of hyperparameters is crucial for optimal model performance. The study employs a combination of grid search and manual fine-tuning based on validation set performance to determine the best hyperparameters such as learning rate, batch size, and the number of neurons in the hidden layer.

Performance Evaluation of the AC-MGME Model

The model’s performance is rigorously evaluated against other algorithms, including DQN, MuseNet, and DDPG, using metrics like accuracy and F1-score.

Results Comparison

On the LAKH MIDI v0.1 dataset, the AC-MGME model demonstrated exceptional results, achieving an accuracy of 95.95% and an F1 score of 91.02%. While MuseNet showed strong initial performance, the AC-MGME model, leveraging an efficient reinforcement learning framework, outperformed it in later training stages.

Statistical Significance Tests

To affirm the superiority of the AC-MGME model, statistical tests, including a two-sample t-test, validate that the performance differences in accuracy and F1 score are statistically significant, supporting the claim that AC-MGME excels in melody generation.

Generalizability Across Diverse Music Environments

The generalization ability of the AC-MGME model is put to the test on the MuseScore dataset. Despite the dataset’s complexity and diversity, the model achieved an accuracy of 90.15%, indicating robust learning and adaptability to a multi-instrument environment.

Cross-Dataset Performance Analysis

Even in a challenging setting, the AC-MGME model maintained its lead over other reinforcement learning models and certainly outperformed MuseNet in the later training phases. This further confirms its efficacy beyond the confines of the piano-dominated LAKH dataset.

Real-World Deployment Potential

Evaluating the post-training inference performance reveals that the AC-MGME model exhibits high efficiency on both high-performance servers and low-power edge devices like the NVIDIA Jetson Nano. This suggests a broad applicability for real-time music education tools.

User Experience and Subjective Assessment

User feedback through a double-blind perception study reveals that the model provides significantly enhanced learning effects and melody quality compared to traditional methods. Participants praised its ability to generate musically coherent and enjoyable melodies, emphasizing its potential in educational settings.

Ethical Considerations

As the application of such advanced AI technologies in music education grows, so do ethical considerations. The risk of copyright infringement due to the model’s potential to reproduce existing melodies warrants measures like plagiarism detection and strict data privacy protocols to safeguard user information.

Conclusion

The detailed examination of the datasets, preprocessing techniques, experimental frameworks, and performance evaluations highlights how effective data utilization and technological advancement can enrich the field of music education through innovative AI-driven solutions.