We Are Living in a Pivotal Moment: The Merging of Computer Vision and Language Models

In the fast-paced realm of technology, we’re currently witnessing a transformational convergence between two traditionally distinct fields: Computer Vision (CV) and Large Language Models (LLMs). For years, these technologies operated independently, but now they are fusing into a single, dynamic force that is reshaping our interactions with machines. This isn’t a concept confined to the future; it’s unfolding right now, heralding a new era of multimodal AI.

Envisioning the Transformation

Picture this scenario: you snap a photo of your broken washing machine, convey the issue in words, and receive immediate, step-by-step repair instructions. Or imagine a doctor uploading an X-ray and getting back not just a diagnosis, but also a concise natural-language treatment summary. What once seemed like distant possibilities are now becoming mainstream realities, all thanks to the advancements in technologies such as GPT-4V and Vision Transformers.

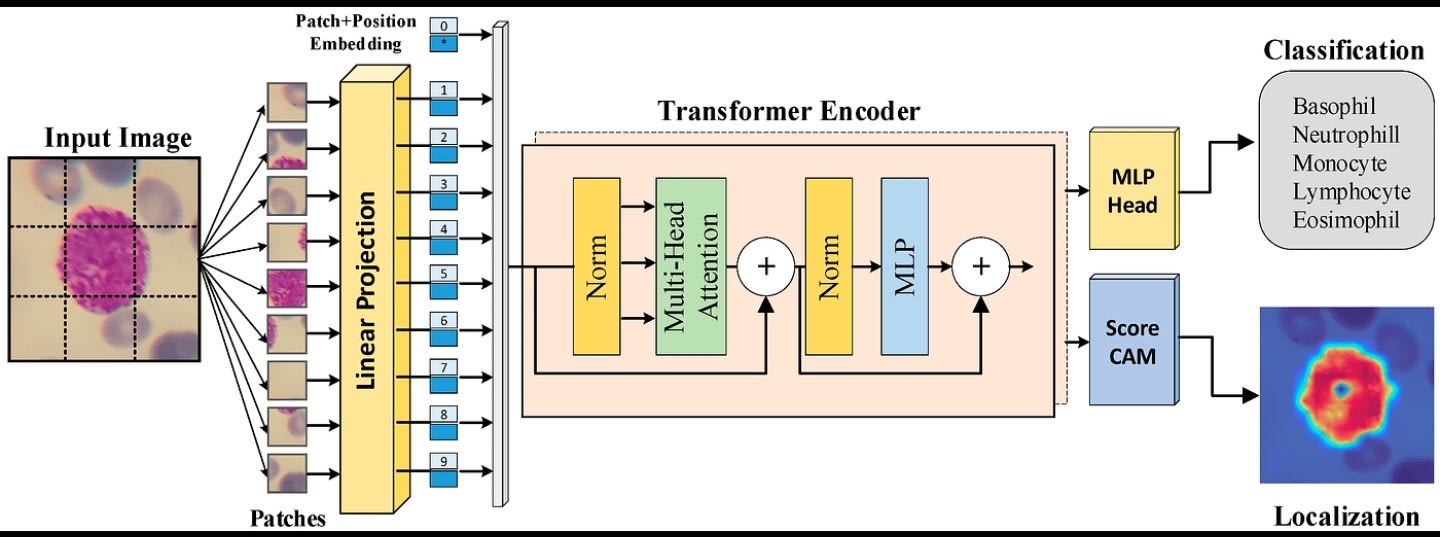

The groundwork for this transformation is two-fold. First, Computer Vision has seen significant leaps, particularly with the advent of Vision Transformer (ViT) models. These models have outperformed traditional CNN systems in areas like object detection, image segmentation, and anomaly identification. Second, Large Language Models have evolved beyond mere text processing. Models like GPT-4V and LLaMA-Vision can now comprehend and generate natural language based on visual inputs. Together, they create multimodal AI systems that can effectively “see” and “understand,” marking a substantial shift in how we engage with technology.

The Power of Integration

1. Contextual Understanding

With the combination of CV and LLMs, AI can analyze and interpret both visual elements and textual context simultaneously. This dual capability enables a far deeper level of understanding. For instance, not only can an AI identify what’s in an image, but it can also grasp the user’s intentions behind sharing that image.

2. Dynamic Interactions

Imagine asking an AI, “What type of bird is this?” The AI identifies the species, displays pertinent information, explains its habits, and even suggests conservation methods—all delivered through a coherent narrative. This level of interaction transforms simple queries into comprehensive engagements.

3. Domain Expertise

In specialized fields, such as healthcare and robotics, these merged systems excel. They can guide robotic actions or perform intricate tasks like real-time medical scan segmentation, demonstrating how AI can seamlessly operate within complex environments.

4. Business Intelligence

Consider a practical application: a security camera equipped with this technology can detect shoplifting and narrate the event to store managers. This actionable intelligence streamlines operations, propelling businesses toward data-driven decision-making.

Real-World Applications

In Healthcare

AI now critically aids in medical imaging, not only interpreting CT scans and MRIs but also drafting diagnostic summaries for practitioners. This integration places vital medical information at doctors’ fingertips, enhancing patient care.

In Customer Service

Imagine this: you send a blurry photo of a receipt. The AI extracts the necessary data and suggests the steps for a refund—all articulated through a friendly chat interface. This seamless experience enhances customer satisfaction and operational efficiency.

In Robotics

Vision-Language-Action (VLA) models like RT-2 and Helix allow robots to understand and act upon natural language commands. Picture instructing a robot to “pick up the red mug” and watching it execute the task flawlessly.

In Manufacturing

Utilizing cameras to monitor production lines, AI can detect anomalies and proactively communicate them to engineers via dashboards or vocal alerts, thereby reducing downtime and improving quality control.

In Education

Multimodal AI is revolutionizing tutoring by analyzing homework pictures, providing explanations and corrections in natural language, making learning more engaging and effective.

In Accessibility

For visually impaired users, AI can read aloud signs or surroundings, such as announcing “A STOP sign ahead” or “Stairs approaching,” thereby enhancing their independence and mobility.

Why 2025 is Set to be a Breakthrough Year

As we look toward the future, several trends indicate that 2025 could mark a pivotal point in the evolution of these technologies:

-

Cheaper Sensors Everywhere: With the proliferation of devices, from doorbell cameras to medical imaging tools, data availability is skyrocketing, fueling AI advancement.

-

Transformer Architecture Impact: Vision Transformer models have superseded CNNs by treating image patches similarly to words in a sentence, enhancing versatility and analytic power.

-

Model Merging Innovations: New training techniques are enabling the merging of vision specialists and LLMs into cohesive systems, elevating their performance.

- Surge in Research: A heightened focus on multimodal systems is expected at conferences like CVPR 2025, showcasing new papers and models that will pave the way for future advancements.

What You Can Do Now

Even if you’re not a developer or technical expert, there are several ways to engage with these advancements:

-

Apply Off-the-Shelf Tools: Leverage user-friendly applications like GPT-4V, Google Lens, or Microsoft’s Seeing AI to convert images to text, create ALT descriptions, or summarize visual content.

-

Build Small Automations: Utilize tools like Zapier or Make.com to automate workflows that involve image recognition and data extraction, streamlining everyday tasks.

-

Explore Model Merging Libraries: If you’re technically inclined, consider experimenting with open-source platforms like VisionFuse that allow you to merge vision modules with LLMs without extensive retraining.

-

Experiment with Robotics Kits: Engage in hands-on learning with beginner VLA platforms or Raspberry Pi setups, instructing robots through voice commands—a rewarding hobby or educational project.

- Join the Conversation: Stay informed about developments through resources such as CVPR materials and AI reports from organizations like LDV Capital.

Challenges to Consider

While the integration of CV and LLMs holds significant promise, there are challenges that must be acknowledged:

-

Accuracy Concerns: Instances of AI “hallucinations” or biases—misinterpretations of images or misalignments in text—can undermine the reliability of these systems.

-

Privacy Risks: The potential for sensitive data exposure, especially in healthcare and personal contexts, raises ethical and legal implications.

-

Compute and Cost Barriers: The resource-intensive nature of training and deploying multimodal AI systems can pose affordability challenges for many.

- Safety and Oversight: In critical applications such as healthcare or robotics, misinterpretations can lead to significant risks, making human review processes vital.

The Path Ahead

The convergence of AI’s “eyes” and “ears” into a cohesive system capable of reasoning and interaction signifies a monumental shift in technology. Within the next few years, we can anticipate:

- Robotic assistants that respond to verbal commands with contextual awareness in diverse settings like stores and warehouses.

- Legal and medical assistant bots that can visually verify documents or medical slides, articulating findings for users.

- Consumer applications offering real-time visual-to-text narration, enriching experiences for travelers, shoppers, and learners.

These innovations are no longer confined to the realm of science fiction; they are at various stages of experimental or early rollout, poised to redefine how we interact with our world.