Experimental Design in Evaluating Hybrid CNN-LLaMA2 Architecture for Chinese Text Processing Tasks

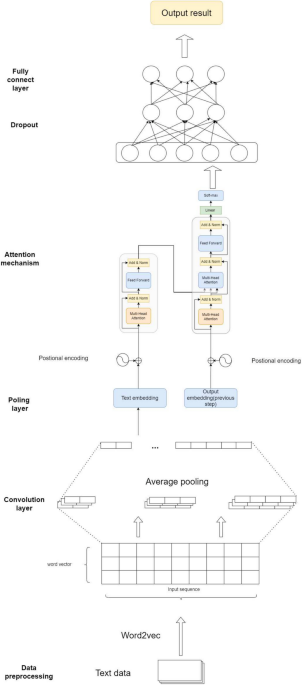

The effectiveness of machine learning models, particularly in natural language processing (NLP), is often contingent upon rigorous experimental designs. In this exploratory study, we delve into a well-structured evaluation of the hybrid CNN-LLaMA2 architecture aimed at enhancing performance across various Chinese text processing tasks. Centered around three pivotal research questions, our experimentation seeks to illuminate the strengths and operational merits of this hybrid approach.

Research Questions

1. Hybrid Architecture vs. Single-Architecture Approaches

The first question that guided our experiments investigates the comparative performance of our proposed hybrid architecture relative to both LLM-only and CNN-only frameworks across diverse tasks, such as sentiment analysis, named entity recognition (NER), and text classification. By contrasting how each model handles the nuances of Chinese text, we sought to identify which architecture configuration yields superior outcomes.

2. Outperformance of Existing Hybrid Models

The second research query addresses the degree to which our architecture surpasses established hybrid models and state-of-the-art specialized systems. This examination potentially underscores the robustness and innovation embedded within our hybrid design, showcasing not just performance metrics but also practical implications for real-world applications.

3. Contributions of Architectural Components

Finally, our third question aims to dissect the individual contributions of each component—LLaMA2, CNN, and the attention mechanism—to the overall efficacy of the system. Understanding how each piece enhances performance helps in identifying areas for future improvement and optimization.

Experimental Environment

All evaluations were conducted in a high-performance computing environment, which is crucial for maintaining a level playing field across different models. The standardized infrastructure facilitates reproducibility, which is essential in empirical research. Adequate resources were devoted to ensure clear and unbiased comparisons among models, allowing for a comprehensive analysis of each architecture’s attributes.

Datasets Utilized

To assess model performance, we utilized three widely recognized Chinese NLP benchmarks:

-

ChnSentiCorp: This dataset comprises reviews from various domains, such as hotels and electronics, featuring informal language and subtle sentiment expressions. The average sequence length is about 62 characters, with significant variability in structure and writing style.

-

MSRA-NER: A key benchmark for NER that features approximately 74,000 annotated entities across 50,000 sentences. This dataset presents unique challenges including nested entities and context-dependent identification.

- THUCNews: This expansive news corpus covers multiple domains, featuring around 10,000 articles per category across 14 topics. The average article length is 812 characters, emphasizing the significance of domain-specific vocabulary alongside general topical comprehension.

Evaluation Metrics

Our evaluation protocol integrated a multidimensional lens to scrutinize model performance:

-

Performance Metrics: These included accuracy, precision, recall, F1 score, and AUC, providing a well-rounded perspective on model accuracy.

-

Efficiency Metrics: We assessed training speed, memory usage, and computational time, acknowledging the importance of operational efficiency alongside performance.

- Qualitative Analysis: Through detailed error patterns and attention visualization, we aimed to uncover the model’s behavior on more challenging test cases, providing an additional layer of insights.

Baseline Comparisons

For a well-rounded evaluation, we juxtaposed our hybrid model against several baselines. These included:

-

LLM-only Models: Testing against widely employed LLMs, particularly those pre-trained on expansive corpora and fine-tuned for specific tasks. Notably, while these models excel at global semantic understanding, they often struggle with local feature extraction.

-

CNN-only Models: CNN-based architectures, proficient in recognizing local patterns (like n-grams), were also included in the comparison. However, they exhibited limitations in modeling long-range dependencies.

-

Existing Hybrid Models: Our model was compared to previous hybrid architectures aimed at integrating the strengths of both LLMs and CNNs, focusing on benchmarking innovations in deep integration of features.

- State-of-the-Art (SOTA) Models: To round out our comparative framework, we also included models recognized as leading approaches in Chinese text processing, expertly tuned to enhance relevance and utility.

Detailed Performance Analysis

Sentiment Analysis – ChnSentiCorp Dataset

For sentiment analysis, results indicated that the hybrid architecture outperformed both single and hybrid baseline models. The proposed model exhibited an impressive accuracy of 93.2%, showcasing a considerable improvement over traditional LLM frameworks, which hovered around 89% accuracy. This performance boost highlights the necessity to synergize local and global information through effective architectural design.

Named Entity Recognition – MSRA-NER Dataset

On the NER task using the MSRA-NER dataset, the proposed hybrid model obtained an F1 score of 96.1%, solidifying its position at the pinnacle of performance metrics. Here, the hybrid architecture succeeded in effectively recognizing entity boundaries, a challenge that often plagues both CNN-only and LLM-only models.

Text Classification – THUCNews Dataset

Within text classification, the hybrid architecture maintained a competitive edge, achieving an accuracy of 95.4%. These results confirmed that enhanced contextual understanding significantly benefits topic identification, especially where semantic nuance is paramount.

Ablation Study

To disentangle the individual contributions of the architectural components, we organized an ablation study. Our findings reaffirmed the distinct advantages each part brings to the hybrid model. Notably, the CNN component provided vital local feature extraction, while LLaMA2 enriches contextual understanding. The attention mechanism proved critical in integrating these features dynamically, adapting through the complexity of the tasks at hand.

Error Analysis and Future Directions

Investigating the model’s errors revealed consistent patterns rooted in architectural designs and data characteristics:

-

Sentiment Analysis: Misclassifications often arose with Chinese idiomatic expressions, pointing to an area demanding specialized handling.

-

Named Entity Recognition: Boundary detection errors indicated challenges in distinguishing between nested entities, reinforcing the need for enhanced contextual modeling.

- Text Classification: Topic ambiguity remained a pervasive issue, particularly between closely related categories, highlighting areas where the model’s contextual understanding required refinement.

Looking ahead, we propose targeted strategies to enhance model robustness. These include implementing advanced integration mechanisms, refining pre-processing techniques for Chinese text, and adopting a multi-task learning paradigm to enrich shared representations. Such advancements aim at leveraging our hybrid architecture’s strengths while systematically addressing its limitations.

By meticulously designing our experiments and analyzing results against established baselines, we have laid a strong foundation for understanding and enhancing the performance of hybrid approaches in Chinese text processing tasks. The nuances and insights gathered pave the way for future explorations in this evolving field, ensuring that solutions remain both innovative and applicable.