Revolutionary Deep Learning Tool for Tailored Ideological and Political Music Education Resources

Revolutionary Deep Learning Tool for Tailored Ideological and Political Music Education Resources

Core Concept and Why It Matters

The intelligent deep learning model designed for recommending ideological and political education (IPE) resources leverages advanced techniques to optimize the integration of red music into educational contexts. Red music encompasses a range of songs that promote ideological narratives, often tied to historical events and cultural sentiments. This model addresses the significant gap in personalized learning experiences, surfacing resources that align with learners’ interests while fostering ideological education. By doing so, it not only enriches the educational landscape but also deepens emotional connections between learners and the subject matter.

Key Components of the Model

A few core components drive this model’s efficiency:

-

Multimodal Data Integration: The model combines various types of data, including audio files, lyrics, user interaction metrics, and emotional responses. This breadth of information allows for a nuanced understanding of user preferences.

-

Hyperparameter Optimization: By carefully tuning parameters such as learning rate and dropout rates, the model enhances its learning efficiency and accuracy in recommending resources.

- Deep Learning Techniques: Techniques like graph neural networks and transformer models are used to analyze complex relationships within the data, ensuring comprehensive feature extraction and recommendation processes.

The integration of these components exemplifies an innovative response to the needs of IPE education, making the learning process not only more personalized but also more engaging.

Step-by-Step Lifecycle

The deployment of the deep learning model follows a structured lifecycle:

-

Data Collection: This involves gathering a diverse dataset like the China Red Music Digital Resource Database, which holds over 3,000 red music works across different historical eras (CRMRD, 2023).

-

Preprocessing: Data undergoes labeling and normalization, where audio files are analyzed for musical features while lyrics are annotated for sentiment and context.

-

Model Training: Utilizing high-performance computing environments, the model is trained using advanced frameworks like PyTorch, allowing for rapid processing of extensive datasets.

-

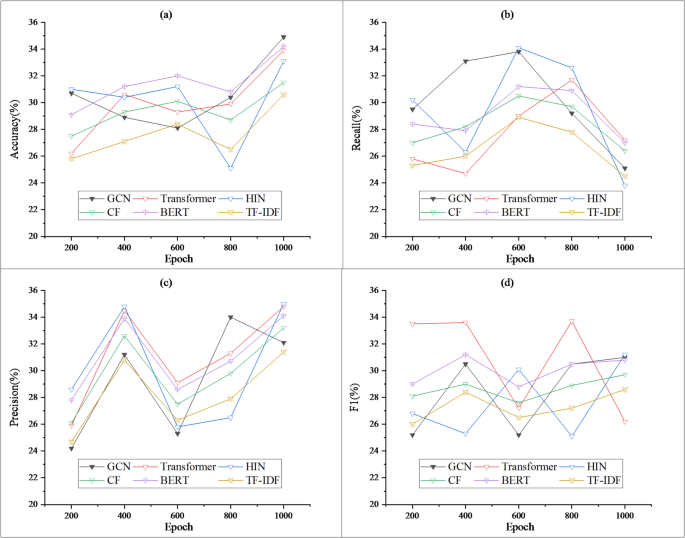

Testing and Evaluation: The model’s performance is evaluated based on various metrics, including accuracy, precision, and F1-score to ensure its effectiveness in making recommendations.

- Deployment: After successful training and evaluation, the model is integrated into educational environments, ready to provide tailored recommendations to users.

This structured approach ensures the model’s robustness and reliability throughout its deployment.

Practical Example: Case Study

A practical illustration of the model’s application can be seen in its recommendation of red music during a unit on ideological education in high schools. For instance, students studying the “Revolutionary War” might receive personalized song recommendations that resonate with the themes of sacrifice and patriotism through works like The Yellow River Cantata. The system analyzes their interaction history, such as songs they listened to multiple times, and curates a list that aligns emotional engagement with educational objectives.

Common Pitfalls and Solutions

Despite its advantages, certain challenges can arise:

-

Data Bias: If historical data heavily favors certain songs, the model may inadvertently promote a narrow set of resources. To counter this, a diverse training set needs to be maintained, ensuring balanced representation.

-

Overfitting: The model might become too specialized in its recommendations, failing to generalize to new learners. Implementing regularization techniques and validating performance on diverse datasets can mitigate this risk.

- Interpretability: Users may find it hard to understand why specific recommendations were made. Using explainable AI performance metrics can enhance user confidence in the system by clarifying recommendation rationale.

By recognizing these pitfalls, developers can make necessary adjustments to optimize model performance.

Tools and Metrics in Practice

The model utilizes a range of tools and metrics for performance evaluation. For example, PyTorch and TensorFlow serve as robust frameworks for building and training the deep learning system. Metrics such as Recall, Precision, and the F1-score are employed to continuously assess model performance and improve recommendation accuracy. Educational Match Score (EMS) and Emotional Resonance Score (ERS) further enrich the evaluation, ensuring that recommendations not only align with educational goals but also resonate emotionally with learners.

Variations and Alternatives

Several models exist for educational recommendations, each with its trade-offs. Traditional collaborative filtering, for example, leverages learner interactions but may struggle to capture rich emotional contexts present in red music. On the other hand, the proposed deep learning model excels in identifying nuanced learner preferences and volatile cultural sentiments, making it a superior choice for IPE scenarios.

The choice between these approaches depends on the specific educational goals and the emotional depth required for engagement. For instance, in environments focusing solely on factual learning, simpler models could suffice. However, in cases demanding a multi-faceted understanding of ideology and emotional territory, the deep learning model shines.

FAQ

Q: How does the model personalize recommendations?

A: The model assesses user interaction data to understand preferences, adjusting its recommendations accordingly.

Q: What kind of data is essential for training?

A: A rich dataset containing multimedia annotations, historical contexts, and user engagement metrics is crucial for effective training.

Q: Can the model be adapted for other musical genres?

A: Yes, by adjusting the data sources and emotional-label systems, the model can accommodate different genres and educational contexts.

Q: What are the computational requirements?

A: The model can be run on high-performance computing platforms, requiring GPUs for optimal processing speed and efficiency.