Exploring a Deep Learning Framework for Alzheimer’s Disease Prediction Using MRI Scans

Introduction to the Proposed Framework

Alzheimer’s Disease (AD) poses a significant challenge in terms of early detection and management. Given the increasing prevalence of this neurodegenerative disorder, innovative technological solutions are imperative. A recent framework combining deep learning and explainable AI has been proposed to enhance the prediction of AD using MRI scans. This framework comprises several stages, including handling data imbalance, model design, training, feature extraction and fusion, and classification.

Dataset Overview

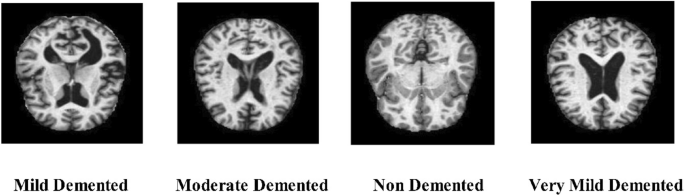

The core of this research is an MRI dataset centered around Alzheimer’s Disease, sourced from an open platform, Kaggle. This dataset is divided into four distinct categories:

- Mildly demented

- Moderately demented

- Non-demented

- Very mildly demented

With a total of 6,400 images available, the dataset unfortunately suffers from an imbalance, with some categories featuring as few as 52 images, which can hinder the efficacy of machine learning models. To address this issue, data augmentation techniques were implemented using rotations, flips, and other variations, effectively doubling the dataset to 12,800 images, thereby facilitating better model training.

Dealing with Data Imbalance

Overfitting is a common pitfall in neural network training, especially in cases where data is insufficient. The imbalance in the dataset can lead to inadequate training for minority classes, which diminishes the robustness and interpretability of the predictions. To combat this, multiple augmentation techniques such as rotation, zooming, and flipping were utilized, allowing for a more diversified training dataset and thus improving the model’s ability to generalize to unseen data.

Architectural Innovations: Inverted Residual Bottleneck Model

The proposed framework leveraged an innovative architecture known as the Inverted Residual Bottleneck Model with Self-Attention. This is particularly renowned for enhancing efficiency in mobile and embedded systems while maintaining high-performance levels. Unlike traditional bottleneck models, which reduce dimensionality, this model expands the feature size initially before applying depth-wise convolutions, effectively optimizing for representational capacity.

Within the architecture lies a multiple parallel structure inspired by multi-branch networks. This design allows the model to learn robust features more efficiently, bypassing the computational constraints of earlier designs. The initial layers employ 3×3 filters, progressing through various levels of depth, ultimately preparing the feature maps for integration with attention mechanisms.

The Vision Transformer Component

An integral part of the framework is the use of Vision Transformer (ViT) architecture, renowned for its self-attention mechanisms that allow for concurrent assessment of different aspects of the input data. ViTs effectively reshape 2D images into sequences of flattened patches, implementing sophisticated attention layers that enable the model to investigate long-term dependencies across input data. This enhances the model’s ability to draw insights from MRI data, crucial for accurate AD prediction.

Feature Extraction and Fusion Techniques

Post-training, features are extracted from significant layers, including self-attention layers and a global average pooling (GAP) layer. A novel serially controlled search update approach is applied to fuse these features, enabling the model to select the most relevant characteristics that contribute to accurate predictions.

The feature vectors are consolidated using a spiral search approach, which resembles biological searching patterns, effectively allowing the model to refine its feature set. The fusion process enhances the model’s ability to discern patterns pertinent to AD prediction.

Classifying Outcomes with Neural Networks

Once the features have been successfully fused, the final classification is achieved using shallow neural network classifiers. This step involves the deployment of a Shallow Wide Neural Network (SWNN), which comprises various structures allowing for flexibility depending on task complexity. This neural network’s design enables it to navigate through hierarchies and learn intricate relationships within the dataset.

Explainable AI Component: LIME

To enhance the interpretability of the model’s predictions, the framework integrates Local Interpretable Model-Agnostic Explanations (LIME). This technique serves to elucidate how input features influence outputs, fostering transparency in AI-driven predictions. With LIME, the analysis is broken down into manageable steps:

- Segmenting the image into pertinent features.

- Generating synthetic images by altering features randomly.

- Classifying these synthetic images using the trained model.

- Fitting a regression model to estimate feature importance.

Summary of Implementation and Predictions

The proposed framework exemplifies a sophisticated approach to predicting Alzheimer’s Disease from MRI scans. By utilizing data augmentation, innovative architectural components, and explainable AI techniques, the model not only enhances prediction accuracy but also provides insights into its decision-making process. As AD continues to pose challenges for researchers and clinicians, such frameworks hold promise for improving early diagnosis and intervention strategies, potentially leading to better management of patients and their care.