Advanced Multi-Camera Abnormal Behavior Detection with Spatiotemporal Deep Learning

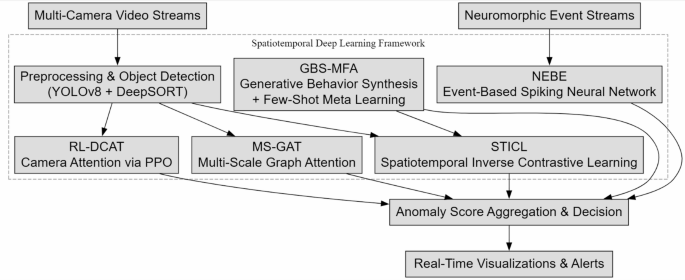

In the realm of multi-camera surveillance, the detection of abnormal behaviors in crowded environments poses significant challenges. Traditional methods often grapple with low efficiency and heightened complexity, necessitating innovative approaches. This article delves into an improved method employing Spatiotemporal Deep Learning, specifically designed for multi-camera abnormal behavior detection.

The Need for a More Efficient Approach

Current surveillance techniques frequently suffer from inefficiencies, particularly when addressing crowded scenes filled with complex interactions. Conventional models, particularly those based on CNNs, process frames independently, which often leads to missed nuances in human behavior. The paradigm shift proposed here aims to address the intricate interdependencies among individuals in such settings, employing graph-based structures to capture relationship dynamics that are inherently non-Euclidean.

Introducing the Multi-Scale Graph Attention Network (MS-GAT)

The cornerstone of this enhanced methodology is the Multi-Scale Graph Attention Network (MS-GAT). This approach is geared toward effectively capturing the spatiotemporal dependencies found within multi-camera environments. By representing individuals as nodes and their interactions as edges, the model construes these connections based on motion similarity, spatial closeness, and behavioral characteristics.

Understanding the Graph Structure

In the MS-GAT framework, the graph is defined as ( G = (V, E, A) ), where:

- V signifies nodes (individuals).

- E contains edges derived from defined interaction criteria.

- A is the adjacency matrix, which captures these relationships dynamically.

The adjacency matrix is continually updated as per the formula:

[

A(i,j) = exp(-\beta |x_i – x_j|^2) \cdot exp(-\gamma |v_i – v_j|^2) \cdot exp(-\delta |b_i – b_j|^2)

]

Here, ( x_i ) and ( x_j ) denote spatial locations, ( v_i ) and ( v_j ) indicate velocity vectors, and ( b_i ) and ( b_j ) reference bounding box attributes. The parameters ( \beta, \gamma, \delta ) modulate the significance of these features.

Multi-Scale Graph Attention Mechanism

Following the establishment of the graph structure, MS-GAT incorporates a multi-scale graph attention mechanism, which refines the importance of edges relative to both local and global interactions. The attention weight ( \alpha(i, j) ) between nodes is calculated using a LeakyReLU activation function:

[

\alpha(i,j) = \frac{exp(LeakyReLU(W[h_i | hj]))}{\sum{k \in N(i)} exp(LeakyReLU(W[h_i | h_k]))}

]

This approach ensures that the system learns to prioritize more critical interactions, leading to improved anomaly detection accuracy.

Computing the Anomaly Score

Ultimately, the anomaly score ( S_v ) for each individual is computed using the equation:

[

Sv = \frac{1}{T} \sum{t=1}^{T} ||h_{vt} – h^t||^2

]

where ( h_{vt} ) represents the node embedding at time ( t ) and ( h^t ) indicates the mean embedding of normal behavior. This technique allows for a nuanced differentiation of normal versus anomalous activities, particularly in dense environments.

Reinforcement Learning for Dynamic Camera Focus

A distinctive element of this framework is Reinforcement Learning (RL), implemented through the RL-DCAT (Reinforcement Learning Dynamic Camera Attention Technology). This mechanism optimizes camera allocation based on observed anomaly distributions, significantly enhancing the model’s focus and efficiency. The reward function, defined as:

[

R_t = -\lambda1 \sum{i=1}^{N} (1 – I(S_i > \theta)) C_i + \lambda2 \sum{i=1}^{N} I(S_i > \theta) P_i

]

balances computational costs against anomaly probabilities, promoting effective resource allocation as situations evolve.

Adapting to Rare Anomalies

In the context of infrequent events, the STICL (Spatiotemporal Contrastive Learning) framework further equips the model with mechanisms to address variability in anomaly types. An inverse contrastive loss function for dissimilarity learning is employed to ensure that the model robustly learns from both common and rare behaviors, optimizing generalization capabilities.

Neuromorphic Event-Based Encoding (NEBE)

To tackle latency issues synonymous with traditional frame-based methods, the Neuromorphic Event-Based Encoding (NEBE) is leveraged. Neuromorphic sensors detect pixel-level changes asynchronously, encoding these shifts into event streams. This technology allows the system to respond instantly to high-frequency movements, providing streamlined processing:

[

S(x,y,t) = \begin{cases}

1, & \text{if } I(x,y,t) – I(x,y,t – \Delta t) > \theta \

-1, & \text{if } I(x,y,t) – I(x,y,t – \Delta t) < -\theta \

0, & \text{otherwise}

\end{cases}

]

This event-driven model significantly augments the system’s ability to rapidly detect motion anomalies.

Generative Behavior Synthesis with Meta-Learned Few-Shot Adaptation (GBS-MFA)

Given that real-world anomalous events can be sparse, the GBS-MFA framework emerges to synthesize rare behaviors. Using a Conditional Generative Adversarial Network (cGAN), this framework generates novel abnormal motion embeddings based on known normal behaviors. The generator ( G ) creates anomalies conditioned on existing data:

[

z_a = G(x_n, y)

]

This strategy enhances the model’s adaptability, allowing it to recognize and react to previously unobserved events.

Harmonizing Components for Robust Detection

The confluence of NEBE and GBS-MFA within this framework yields a comprehensive solution for multi-camera anomaly detection. By reducing frame processing requirements and synthesizing rare behavioral patterns, the system achieves high-speed motion analysis while maintaining robust detection capabilities.

Composite Anomaly Scoring

The model culminates in a composite anomaly score, expressed as:

[

S_{final} = w1 S{NEBE} + w2 S{GBS} + w3 S{STICL}

]

where the weights ( w_1, w_2, w_3 ) are optimized through reinforcement learning to ensure an equilibrium between efficiency, adaptability, and speed.

Conclusion

Through the integration of innovative techniques like spatiotemporal deep learning, graph-based modeling, and generative frameworks, a new era in multi-camera surveillance is on the horizon. This robust methodology not only addresses previous inefficiencies but also propels anomaly detection into a scalable and adaptable realm, setting the stage for future advancements in security and surveillance technologies.