Understanding Profiling Side-Channel Attacks and Deep Learning Applications in Cryptographic Security

Cryptographic security often relies on the mathematical complexity of algorithms to protect sensitive information, but physical implementations can unintentionally leak information that attackers can exploit. Profiling side-channel attacks (SCA) reveal how attackers can harness physical phenomena—like power consumption, electromagnetic radiation, and timing variations—to extract cryptographic keys, thereby circumventing the security provided by traditional algorithmic defenses.

Notation in Cryptography

In cryptographic discussions, we often use specialized notation to clarify key concepts. Calligraphic letters, denoted as (\mathscr{X}), are used to represent finite sets. In the context of cryptography, the notation (|\mathscr{X}|) indicates the cardinality of respective sets. For instance, when dealing with random variables and vectors (X) over a set (\mathscr{X}), we use lowercase letters (x) and (\textbf{x}) for the realizations of these random variables. The (i)-th entry in a vector (\textbf{x}) is denoted by ({\textbf{x}}_i), which corresponds to specific key candidates and plaintext bytes.

Let’s clarify the roles of different variables: (k) is a key candidate belonging to key space (\mathscr{K}), and (k^*) represents the correct key. Each entry ({\textbf{x}}_i) has an associated key guess (k_i) and plaintext (PT_i).

Profiling Side-Channel Attacks

Mechanism and Classification

In essence, profiling side-channel attacks exploit physical leakages emanating from devices when processing cryptographic data. These leakages can emerge in various forms, leading to classifications of attacks based on the type of physical information extracted:

- Timing Attacks: Focus on measuring how long cryptographic operations take.

- Power Analysis Attacks (DPA and CPA): Examine fluctuations in power consumption during encryption.

- Electromagnetic Attacks: Capture unintended electromagnetic radiation emitted by electronic components.

- Acoustic Attacks: Analyze sound produced during computation.

While non-profiling attacks can uncover secret keys without direct knowledge of the target’s internal workings, profiling attacks use clone devices to map leakages to keys, making these attacks significantly more potent.

Phases of Profiling Attacks

Generally, profiling attacks unfold in two distinct phases:

-

Profiling Phase: Here, the attacker uses a clone device to create a dataset of (N) profiling traces. These traces are labeled with leakage values corresponding to specific secret keys, facilitating the training of a model. As data arrives from multiple plaintext-key pairs, they are assumed to be independently and identically distributed (i.i.d).

- Attack Phase: In this phase, the attacker collects (Q) traces from the target device under attack. Using the trained model from the profiling phase, predictions are made about key candidates based on the leakage captured in these attack traces.

Deep Learning in Side-Channel Analysis

The rise of deep learning has provided powerful tools for addressing profiling attacks, particularly for classification tasks involving cryptographic keys. Supervised machine learning has shown significant promise in this space, particularly techniques like Support Vector Machines (SVMs), Random Forests (RFs), and deep learning models.

Deep learning’s significant advantage lies in its ability to automatically extract features without requiring complex feature engineering. By leveraging large datasets, deep learning models can recognize intricate patterns across power traces, allowing for successful key recovery.

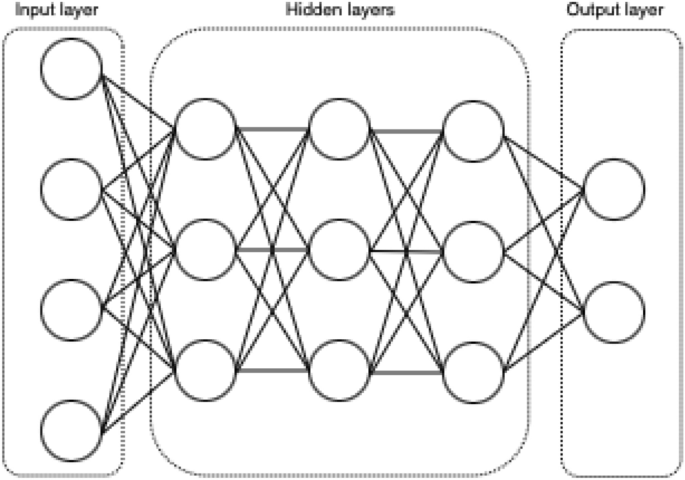

Multi-Layer Perceptron (MLP)

The MLP is a foundational type of neural network comprised of multiple layers, allowing it to learn complex mappings from input data (power traces) to outputs (secret keys). Each layer contains activation functions to help the network learn non-linear characteristics. The computational steps of an MLP can be concisely represented:

[

a^{(l)} = \sigma \left( W^{(l)} a^{(l-1)} + b^{(l)} \right), \quad l = 1, 2, \ldots, L

]

where (W) are weights, (b) are biases, and (\sigma) is the activation function.

Convolutional Neural Networks (CNN)

CNNs are another class of neural networks specifically designed to handle data with a grid-like structure, such as images and structured power traces. They consist of convolutional layers, pooling layers, and fully connected layers, which translate into a rich hierarchical feature extractor capable of discerning intricate patterns.

A typical operation in a CNN can be expressed as:

[

a^{(l)} = \sigma \left( \text{Conv} \left( W^{(l)}, a^{(l-1)} \right) + b^{(l)} \right), \quad l = 1, 2, \ldots, L

]

where (\text{Conv}(W^{(l)}, a)) denotes the convolution operation, showcasing how the model builds complexity through successive layers.

The SPECK Cipher: Context and Vulnerabilities

SPECK, a lightweight block cipher introduced by the NSA in 2013, serves as a vital case study in cryptographic security due to its design suited for resource-constrained environments. It features various block and key sizes, making it adaptable for diverse use cases. However, like many block ciphers, SPECK is vulnerable to side-channel attacks due to its repeated operations (like bitwise shifts and modular additions) that can leak power consumption data.

Within the context of our analysis, we focus specifically on the SPECK-32/64 variant.

Round Function and Attacker’s Perspective

SPECK’s round function involves intricate bit manipulation. For instance:

[

{X^1}{i+1} = \left( ({X^1}{i} {\ggg } 7) + {X^0}_{i} \right) \oplus k_i

]

This transformation begins by splitting the plaintext into two words and performing shifts and modular additions, which can all contribute to discernible power profiles. The adversary’s interest is in the output of these operations, as they provide direct insight into the current round keys.

The Attack Strategy: Unprotected vs. Protected Implementation

Unprotected Implementation

An unprotected version of the SPECK-32/64 cipher runs without countermeasures regarding side-channel vulnerabilities. This approach often emphasizes performance and efficiency at the cost of increased susceptibility to extraction of sensitive key material. Since each operation produces power spikes that correlate with the internal processes, attackers can utilize these patterns to unveil secret information.

Protected Implementation

Conversely, a protected implementation adopts countermeasures to mitigate side-channel risks. This could involve the application of masking techniques, which obscure sensitive variables during computation, or constant-time algorithms that minimize execution time variations. The ultimate goal is to produce an implementation that is resilient to adversarial probing while maintaining the cipher’s efficiency.

Ensemble Learning in Profiling Attacks

Ensemble learning enhances the robustness of side-channel analysis by aggregating predictions across multiple models. This methodology helps to mitigate overfitting, improving the generalizability of predictions across varying datasets.

Various ensemble methods like bagging, boosting, and stacking lend themselves to this analysis, making them powerful allies in the realm of side-channel attacks. Emerging applications also show promise for ensembles in deep learning contexts, which strengthens the attacker’s arsenal against modern cryptographic implementations by leveraging the collective intelligence of diverse models.

Through these advancements, we can uncover more sophisticated attacks on well-established cryptographic algorithms, continuing to challenge the security of widely-implemented systems in today’s digital landscape.