Advanced Topic Modeling and Classification Techniques for Predicting Software Defects

Introduction to Software Defect Prediction

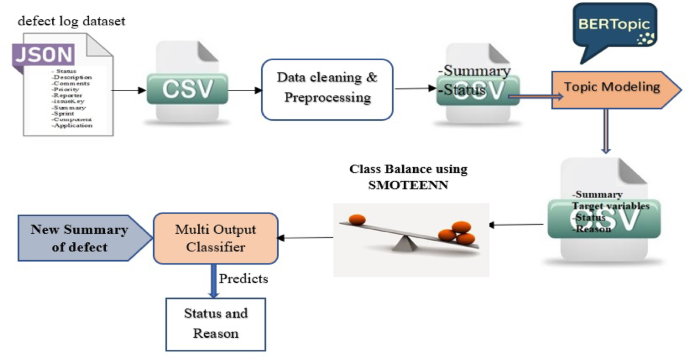

Software defect prediction is critical in software engineering, aiming to enhance the reliability and quality of software products. Traditional methods primarily relied on manual reviews and rudimentary statistical analyses. However, with advancements in machine learning and natural language processing (NLP), it’s now possible to utilize more sophisticated methodologies. Advanced topic modeling and classification techniques, particularly through models like BERTopic, enable teams to not only predict software defects but also uncover their underlying root causes effectively.

Understanding BERTopic for Topic Modeling

At the heart of modern software defect analysis is BERTopic, a robust topic modeling technique that leverages embeddings from the BERT (Bidirectional Encoder Representations from Transformers) architecture. This model captures the semantic context of defect reports, allowing for a nuanced understanding of defect patterns. By employing BERTopic, developers can generate dynamic, highly interpretable topics derived from the semantics of the data.

The Multi-Output Classifier Framework

In the realm of software defect prediction, a multi-output classifier plays a pivotal role. Unlike traditional classifiers that predict a single outcome, multi-output models can simultaneously predict multiple outputs—such as defect presence and root cause. This architecture allows for a comprehensive outlook on software issues, blending classification and topic modeling seamlessly.

The multi-output classifier utilizes various base classifiers, including Logistic Regression (LR), Decision Trees (DT), K-Nearest Neighbors (KNN), and Random Forests (RF). This ensemble approach not only boosts accuracy but also provides diverse perspectives on defect causation.

Data Preparation and Preprocessing

Successful application of advanced models begins with meticulous data preparation. The defect log data, often in JSON format, first undergoes transformation into a more analyzable CSV format, paving the way for efficient data processing. Preprocessing steps include cleaning, normalization, and tokenization, followed by the elimination of non-contributive stopwords.

A significant preprocessing technique utilized in this space is the SMOTEENN (Synthetic Minority Over-sampling Technique + Edited Nearest Neighbors) algorithm. It addresses imbalances between defect categories by generating synthetic data points and subsequently cleaning the dataset. By balancing class distributions, the algorithm enhances the model’s capabilities in identifying defects and their respective causes.

Textual Data Preprocessing Steps

The textual preprocessing phase encompasses several crucial steps:

- Lowercasing and Removal of Bracket Content: Standardizing text improves uniformity and reduces the complexity of the feature space.

- Removal of Punctuation and Alphanumeric Tokens: This step eliminates non-contributive noise, enhancing the focus on meaningful words.

- Duplicate Removal: This process ensures that repeated entries do not skew the learning process.

- Tokenization: Text is segmented into discrete lexical units, essential for the modeling process.

- Stopword Removal: High-frequency function words that add little semantic value are eliminated.

- Stemming: Words are reduced to their root forms, consolidating variations and enhancing semantic grouping.

- Document Reconstruction: The final step involves the recombination of stemmed tokens into normalized string formats, preparing the data for vectorization.

Topic Modeling with BERTopic

Using BERTopic in defect log analysis provides a powerful framework for extracting meaningful topics. The model employs two key components:

- BERT Embeddings: These are essential for capturing the contextual meaning of defect summaries, transforming them into high-dimensional vectors suitable for clustering and further analysis.

- Dimensionality Reduction (UMAP): Given the high-dimensional nature of BERT embeddings, UMAP is employed to reduce these vectors to a more manageable size, ensuring that the global structure of the data is preserved during the reduction process.

Clustering with HDBSCAN

Once data is embedded and reduced, clustering follows—usually via the HDBSCAN algorithm. This non-parametric clustering method groups data based on density, identifying clusters of defects that may have similar underlying issues. The output includes a structured representation of topics, with each cluster reflecting a specific root cause area.

Interpreting Clusters into Topics

After clustering, BERTopic generates representative keywords for each identified topic using c-TF-IDF scores. This enables a human-readable insight into the defects’ content. For example:

- Topic 0: Keywords like “incorrect,” “app,” and “cert” relate to data handling issues, leading to a label of ‘Test Data Issue.’

- Topic 1: Words such as “scroll,” “desktop,” and “design” signal alignment issues with user interface design, classified under ‘As per Design.’

- Topic 2: With terms like “duplicate” and “close,” this topic indicates a propensity for premature closure in defect tracking, labeled ‘Duplicates.’

- Topic 3: Keywords related to content management and publishing activities lead to the classification of ‘Test Environment.’

The Use of Class Balancing Techniques: SMOTEENN

SMOTEENN is particularly notable in addressing imbalances between classes. By extending minority class samples with synthetic instances (from SMOTE) and cleaning the dataset through ENN, the classification system becomes more robust and reliable. This strategy helps in effectively dealing with datasets where certain defect types are underrepresented.

Multi-Output Classifier Implementation

The architecture harnesses a multi-output classifier that enables simultaneous predictions concerning defect status (e.g., valid or invalid) and defect reasons (e.g., design flaws, duplicates). This method overcomes the limitations of single-output classifiers, allowing for richer insights into software defect scenarios.

Each base classifier in the multi-output framework possesses distinct strengths; thus, customizing classifiers to suit different target variables leads to more accurate defect management strategies. For example:

- Logistic Regression (LR): This offers efficient predictive capabilities for linear outputs.

- Decision Trees (DT): These capture non-linear relationships effectively for categorical data.

- KNN: It excels in localized decision boundaries, crucial when similar defect types are present.

- Random Forests (RF): This ensemble method reduces noise effects and guards against overfitting.

- Voting Classifier: This aggregates predictions from multiple classifiers, enhancing reliability through collective decision-making.

By integrating advanced topic modeling like BERTopic with a sophisticated multi-output classification approach, software development teams can enhance defect management processes dramatically. The comprehensive insights derived from such models not only pinpoint defects but also inform on preventative measures, fostering a culture of continuous improvement within the realm of software engineering.