Monitoring Data and Pre-processing in Tunnel Construction Projects

Introduction to Monitoring Datasets

Monitoring data plays a pivotal role in managing and assessing the safety and performance of construction projects. This is especially true for complex structures like tunnels, where deformation measurements can provide critical insights into structural integrity during construction. In this context, the dataset for Tunnel G, collected from April 2022 to March 2023, consists of 300 representative deformation measurements across various construction methods and monitoring sections.

Table 1: Summary of Monitoring Data Source Sections

This table outlines the different monitoring sections utilized in the Tunnel G construction project, detailing the various methods employed throughout the process.

The Problem of Outliers and Noise Reduction

One significant challenge in working with monitoring data is the presence of outliers, which can skew model training accuracy and compromise the quality of data fitting. When these anomalous data points infiltrate the dataset, they can lead to poor generalization capabilities in models, diminishing their effectiveness. Consequently, effective preprocessing techniques are crucial.

To tackle this challenge, the quadratic exponential smoothing method is utilized. This technique, rooted in time series analysis and forecasting, focuses on assigning greater weights to more recent data points. In contrast, older data points are given less weight, which significantly aids in reducing noise and enhancing the quality of the dataset.

Equations for Quadratic Exponential Smoothing

The methods for calculating the predicted value and horizontal value can be expressed using the following equations:

-

Predicted Value:

$$ F{t} = \alpha \cdot Y{t} + L_{t-1} \cdot (1-\alpha) \quad (26) $$ - Horizontal Value:

$$ L{t} = \alpha \cdot Y{t} + L_{t-1} \cdot (1-\alpha) \quad (27) $$

In these definitions:

- ( F_t ) is the predicted value at time ( t ).

- ( \alpha ) represents the smoothing parameter, which ranges from 0 to 1.

- ( Y_t ) is the observed value at time ( t ).

- ( L_{t-1} ) is the observed value at the previous moment.

Data Normalization

Once noise is reduced, the next step involves data normalization. The monitoring data are linearly scaled to fall within the range of [0, 1]. This ensures that the input features are on comparable scales, facilitating more effective model training. The normalization formula is presented as follows:

$$ X{n} = \frac{X – X{min}}{X{max} – X{min}} \quad (28) $$

Here:

- ( X ) is the original value.

- ( X_n ) is the normalized eigenvalue.

- ( X{max} ) and ( X{min} ) are the maximum and minimum values of the original dataset, respectively.

Dataset Division for Training and Testing

To prevent overfitting and enhance the model’s generalization and stability, the normalized dataset is divided into training and testing subsets. A common ratio used is 70% of the data for training and 30% for testing. This separation allows for an accurate evaluation of the model’s performance.

Deep Learning Model Construction

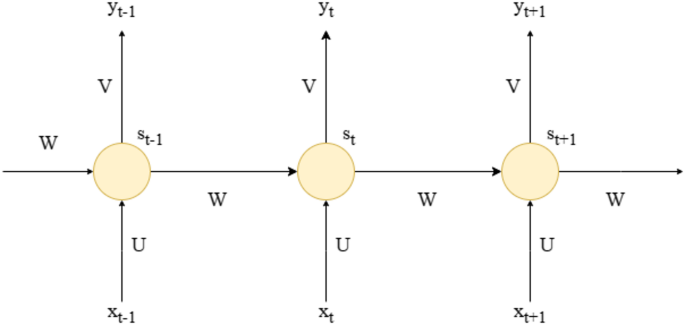

When it comes to deep learning architectures, various models exist tailored for different tasks and data types. Notable models include Feedforward Neural Networks (FNN), Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN), Generative Adversarial Networks (GAN), Graph Neural Networks (GNN), and Attention Mechanisms. Each architecture comes with unique characteristics suited for specific applications, as summarized in Table 2 below.

Table 2: Classification of Neural Networks by Network Structure

This table categorizes various neural networks based on their structural characteristics and intended applications.

Steps for Model Construction

For the purpose of this study, deep learning models such as RNN, Long Short-Term Memory networks (LSTM), Gated Recurrent Units (GRU), and CNN-GRU models are employed. The general steps taken for constructing these models include:

-

Data Preparation: Collecting high-quality datasets, eliminating redundant or noisy entries, and extracting relevant multidimensional features.

-

Normalization: This step, as outlined above, ensures that input features are on comparable scales.

-

Model Architecture Design: Selecting and designing an appropriate model architecture tailored to the task requirements, which involves choosing suitable activation functions, loss functions, and optimizers.

-

Dataset Division: Categorizing the dataset into training, validation, and test subsets, often employing mini-batch training for enhanced convergence speed.

-

Model Training: Configuring parameters like learning rate, batch size, and number of epochs, and monitoring training using loss functions and evaluation metrics to prevent overfitting.

-

Model Evaluation: Assessing model performance on the test set using various metrics, followed by plotting loss and accuracy curves.

- Model Optimization: Refining the model architecture, tuning hyperparameters, and implementing regularization techniques to improve overall performance.

Integrating Bionic Algorithms for Model Enhancement

Bionic algorithms, inspired by biological processes and ecological behaviors, provide innovative optimization methods for enhancing deep learning models.

Steps for Bionic Optimization

-

Creating a Time-Series Dataset: Transform the data into a suitable format for CNN-GRU modeling.

-

Model Configuration: Import and configure the Sequential model from Keras, adjusting parameters like the number of convolutional kernels and kernel size.

-

Training the Model: Train the model while evaluating the current solutions and continuously adjusting parameters based on the performance outcomes. If a certain accuracy is not achieved, training is continued until the criteria are met.

-

Hyperparameter Optimization: Employ techniques like the Whale Optimization Algorithm (WOA) to fine-tune model parameters.

-

Final Model Training: Utilize the WOA-optimized parameters to train the final model, closely monitoring loss functions and accuracy.

- Model Saving: Once training concludes, the optimal model weights are stored for future use.

Evaluating Forecasting Effectiveness

Evaluation metrics play a crucial role in understanding model performance. In this analysis, the root mean square error (RMSE) and mean absolute percentage error (MAPE) serve as indicators to quantify the errors between predicted and actual values. The formulas used for these metrics are:

-

RMSE:

$$ RMSE = \left[\frac{1}{n} \sum_{i=1}^{n} (y – \hat{y})^2 \right]^{\frac{1}{2}} \quad (29) $$ - MAPE:

$$ MAPE = \left(\frac{1}{n} \sum_{i=1}^{n} \frac{|y – \hat{y}|}{y}\right) \times 100\% \quad (30) $$

Here, ( n ) represents the total number of samples, and ( y ) and ( \hat{y} ) denote the actual and predicted values, respectively.

Comparison of Model Performance

To validate the predictive prowess of the WOA-CNN-GRU model against other neural networks, it is imperative to perform comparative analyses with models such as RNN, LSTM, GRU, and CNN-GRU, showcasing their varying parameter settings and performance metrics as outlined in Table 3.

Table 3: Comparison of Model Parameter Settings

This table illustrates the parameter configurations for both baseline models and the WOA-CNN-GRU model, facilitating a detailed comparison of their performance.

Training Accuracy Assessment

The WOA-CNN-GRU model is particularly evaluated against diverse work methods and sections. The surface settlement dataset DK24 + 985:Z1, collected from a step-method section, serves as input data for this evaluation. Initial training results reveal that the model achieves over 95% prediction accuracy in surface settlement forecasting, as demonstrated in figures and tables showcasing training performance and loss function curves.

Figure 11: Training Results of Different Models

This visual representation compares the training outcomes across various models employed in the study.

Figure 12: Loss Function Curves of Different Models

Loss function curves effectively illustrate the training dynamics of the models under consideration.

From the results present in Table 4, the WOA-CNN-GRU model’s RMSE of 0.1257 mm demonstrates exceptional accuracy, significantly surpassing that of the other models, while MAPE at 0.51% indicates a strong relative performance.

Performance Validation

The trained WOA-CNN-GRU model is applied across various monitoring data sources, revealing that RMSE and MAPE consistently remain below thresholds of 1 mm and 1%, respectively, as illustrated in Table 5. This highlights the model’s exceptional reliability and effectiveness in forecasting surrounding rock deformations during the high-speed rail tunnel construction process, ultimately contributing to enhanced construction safety and efficiency.

This discourse provides a thorough examination of monitoring data, preprocessing techniques, various deep learning models, and the application of bionic algorithms in predicting deformations in construction contexts. Through systematic evaluation and comparison, the results underscore the significance of accurate forecasting in improving both safety and efficiency in engineering projects.