Understanding the Model Framework for Time Series Prediction in Coal Spontaneous Combustion

In recent years, there has been heightened interest in using advanced machine learning techniques to predict phenomena such as coal spontaneous combustion. Understanding how to construct a robust model framework is essential for achieving high accuracy in predictions. This article will delve into the intricate details of a model that processes known and labeled time series data to make predictions on coal pyrolysis.

Data Input: Slicing and Dicing Time Series

The journey begins with data, specifically slices that encapsulate both known and labeled time series information. Each slice is a structured representation of sequential data points, essential for the following phases of the model’s architecture. Manipulating these slices is crucial for ensuring that the model can learn meaningful patterns inherent in temporal data.

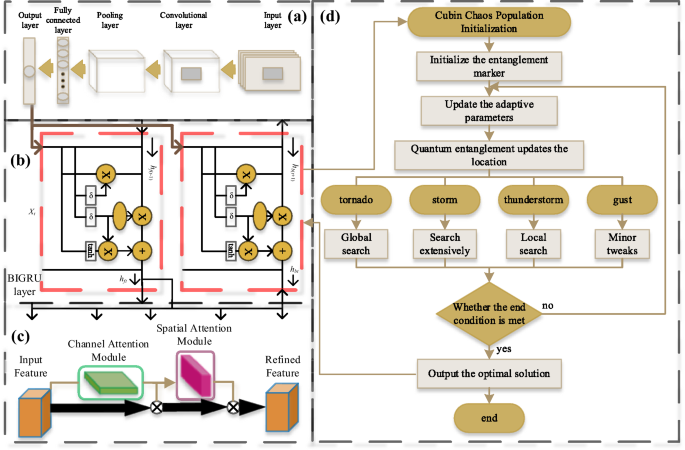

Convolutional Neural Network (CNN): Extracting Local Features

The slices are routed through a one-dimensional convolutional neural network (CNN) first. Here, local features are extracted using a Conv1D layer configured with 64 filters and a kernel size of 2. This early stage of feature extraction is vital, capturing short-term dependencies in the time series data. To enhance model capability, the ReLU activation function is applied, fostering nonlinearity in the output, which is necessary for more nuanced learning.

Following this feature extraction, a Maximum Pooling layer (MaxPooling1D) reduces the data dimensions by pooling with a window size of 2, which enables the model to focus on the most prominent features while expanding its receptive field. This reduction is crucial, as it helps prevent overfitting while ensuring the model retains significant information.

Bidirectional Gated Recurrent Unit (BiGRU): Capturing Temporal Dependencies

Once the features are pooled and reshaped, they are fed into a Bidirectional Gated Recurrent Unit (BiGRU) layer. The advantage of BiGRU lies in its ability to capture complex temporal dependencies from data, effectively processing sequences both forwards and backwards. This dual-directional capability enriches the model’s understanding of the input time series, allowing for a more comprehensive view of past data points that might influence future outcomes.

Hyperparameter Optimization: Harnessing the ITOC Algorithm

To enhance model performance, the Improved Tornado Optimization Algorithm (ITOC) is employed. ITOC finely tunes the hyperparameters, which are critical for optimal model function. During this phase, parameters such as the learning rate (ranging from 0.001 to 0.1), the number of neurons in the BiGRU layer (from 32 to 256), and the convolution kernel sizes (ranging from 2 to 8) are adjusted meticulously.

The optimization process kicks off with the initialization of a population of potential solutions, each representing a unique set of hyperparameter combinations. ITOC iterates through several generations, employing an intelligent updating strategy to converge towards the optimal hyperparameter set meticulously. Each iteration involves model instantiation based on the current parameters, followed by training and evaluation against validation data, usually employing metrics like mean square error.

Introducing Attention: Convolutional Block Attention Module (CBAM)

Once the data has passed through the BiGRU layer and its complex temporal patterns have been captured, it proceeds to the Convolutional Block Attention Module (CBAM). Here, channel and spatial attention mechanisms are applied sequentially to refine the learning process. In the channel attention stage, average and maximum pooling operations generate descriptors, enabling the MLP to create channel attention weights. This mechanism emphasizes vital features while suppressing irrelevant ones.

Afterward, spatial attention mechanisms come into play, merging channel-attended features and passing them through a convolutional layer. This focuses the model’s attention on the most pertinent spatial regions, ensuring that the output is both powerful and streamlined.

Model Output: Producing Predictions

Once the attention mechanisms have transformed the feature maps, the data is flattened and passed to a fully connected Dense layer. This layer serves as the final output generator, culminating in predictions relevant to coal spontaneous combustion, such as temperature changes. Each layer—CNN, BiGRU, and CBAM—works harmoniously, fueled by the hyperparameters optimized through the ITOC algorithm.

Analyzing Gas Evolution in Coal Pyrolysis

With a better understanding of the model framework, one can delve into the specifics of coal pyrolysis mechanisms, which play a crucial role in predicting spontaneous combustion risks. Studies, including some by Scholar Tromp, have systematically described the mechanisms at play during the pyrolysis process, which can be conceptualized into primary cracking, secondary reactions, and condensation.

Understanding these mechanisms helps frame the evolved gas products, as free radical-mediated pathways form during initial stages of pyrolysis. The breakdown of weak chemical bonds and the release of lighter hydrocarbons, such as methane and ethylene, are significant in understanding how temperature influences product evolution.

Experimental Data: Simulation of Spontaneous Combustion

Data sourced from coal auto-ignition experiments illustrates how experimental setups can simulate real-world combustion conditions. Parameters such as temperature distributions and gas evolution characteristics are carefully monitored. The data allows for a better understanding of the spontaneous combustion process through the establishment of correlation analyses. By employing the Pearson correlation coefficient, key gas indicators (like CO, C2H4, and O2) are identified correlating strongly with temperature variances.

Insights into Predictive Modeling

Moving forward, predicting spontaneous combustion requires an analytical grasp of how gas indicators evolve with temperature variations. For instance, as temperature increases, CO and C2H4 concentrations show marked increases while O2 concentrations drop, indicating the intensity of combustion reactions at play.

Statistical testing assists in validating these relationships and supports the model’s data-driven structure. The predictive model, utilizing the ITOC-CNN-BiGRU-CBAM architecture, is designed to understand and identify these intricate patterns.

Execution and Performance Evaluation

During model execution, the dataset is partitioned effectively into training, validation, and test sets, utilizing balanced and stratified methods to maintain reliability. Each model segment aims for a high predictive power, validated through precise performance metrics like mean squared error (MSE) and R-squared values.

Through meticulous validation and hyperparameter optimization, the model iteratively refined its predictions for coal spontaneous combustion, showcasing a remarkable increase in prediction accuracy compared to its initial state.

In summary, constructing a model framework for time series predictions in coal spontaneous combustion is a complex yet essential task. Each component, from data handling to feature extraction, attention mechanisms, and fine-tuning hyperparameters, plays a pivotal role in ensuring accurate and reliable model predictions.