Dataset Description for Cloud Workload Forecasting Framework

In validating our framework’s robustness, we conducted experiments using multiple widely-recognized datasets from both real-world and synthetic cloud environments. This enables us to understand the dynamics of cloud workloads better and optimize our resource management techniques. Below, we explore some of the datasets used in our study, the preprocessing steps applied to ensure high-quality data, the evaluation metrics for performance assessment, and a baseline for comparison.

Datasets Overview

Google Cluster Trace

The Google Cluster Trace dataset serves as a cornerstone for our experiments. It includes a vast amount of resource usage information gathered from over 12,000 machines over the course of one month. This data is rich in granular metrics, comprising CPU, memory, disk I/O, and network utilization metrics, all recorded at 5-minute intervals. This level of detail allows for a nuanced understanding of workload patterns and resource requirements in a large-scale production environment.

Microsoft Azure Trace

Next, we leverage the Microsoft Azure Trace, which is publicly available and captures a variety of workloads from Azure virtual machines. Similar to the Google dataset, this trace includes essential metrics like CPU, memory, and network usage, also sampled every 5 minutes. The diversity of workloads in this dataset provides valuable insights into how resource utilization varies across different types of applications.

Bitbrains Synthetic Dataset

Last but not least, we utilize the Bitbrains Synthetic Dataset, which is designed to simulate bursty and seasonal workload patterns commonly observed in enterprise cloud settings. This dataset enables controlled evaluation of model adaptability under dynamic conditions, important for testing the efficacy of our forecasting and resource allocation strategies.

Data Preprocessing

Before model training and inference, we applied a rigorous data preprocessing pipeline to ensure high-quality, consistent input. This step is crucial as the quality of the data can significantly impact the performance of machine learning models.

Normalization

All workload metrics were scaled to a normalized range of ([0, 1]) using min-max normalization. This translates to promoting stable neural network training, preventing any particular feature from dominating because of varying scales.

Missing Value Imputation

To handle missing or corrupted entries, we employed linear interpolation. This method helps maintain temporal continuity in the dataset, which is especially important given that we’re dealing with time-series data.

Windowing

For our time-series forecasting models—such as LSTM, BiLSTM, and TFT—we constructed input sequences using a sliding window approach. This involved setting a fixed historical length (L) and a prediction horizon (H).

Feature Engineering

At each time step, 23 system-level features were extracted, covering all key aspects of the resource usage. This comprehensive feature set allows us to better understand the workloads and their peculiarities.

Train/Validation/Test Split

To ensure unbiased model evaluation and facilitate effective hyperparameter tuning, we partitioned the datasets into a ratio of 70% for training, 15% for validation, and 15% for testing.

Workload Aggregation

Depending on the evaluation scenario, data may be aggregated at different granularities (e.g., hourly or every 5 minutes). This adjustment allows us to simulate varying operational conditions, which is essential for comprehensive modeling.

List of Input Features

In Table 7, we summarize the 23 input features per time step, each contributing to the depth of analysis in our models. These features include quantifiable metrics from various operational aspects, aimed at presenting a holistic view of workload behavior.

Evaluation Metrics

To assess the effectiveness of our workload forecasting and resource allocation mechanisms, we adopted a set of performance indicators that span multiple dimensions:

Mean Absolute Error (MAE)

This metric represents the average magnitude of prediction errors, irrespective of direction. A lower MAE indicates better forecasting performance.

Root Mean Squared Error (RMSE)

RMSE penalizes larger errors more heavily than MAE, thereby providing a robust measure of model accuracy.

Mean Absolute Percentage Error (MAPE)

Expressing errors as a percentage of actual values, MAPE is particularly useful for relative performance comparisons across different scales.

Scaling Efficiency (SE)

Defined as the ratio of allocated resources to actual usage, SE close to 1 indicates optimal resource provisioning with minimal under- or over-allocation.

SLA Violation Rate

This metric captures the proportion of time steps where resource provisioning failed to meet application demands. Lower values signify a more reliable system.

Energy Consumption

Calculated based on CPU-hours and cloud-specific energy models, we also incorporate carbon-aware metrics that stem from energy-efficient scheduling practices.

Cost Savings

Utilizing Amazon Web Services Elastic Compute Cloud (AWS EC2) pricing, this metric illustrates the monetary benefits of dynamic and intelligent scaling strategies.

Together, these metrics offer a holistic view of model performance, considering predictive accuracy, operational efficiency, reliability, energy sustainability, and economic cost.

Baseline Models

To evaluate the performance of our proposed BiLSTM-MARL-Ape-X framework, we compare it against a well-established set of baseline models across three core areas: workload prediction, resource allocation, and training optimization.

Workload Prediction Models

We consider various classical and deep learning-based models for time-series forecasting, including:

- ARIMA: A classical time-series model for linear data.

- LSTM: Widely adopted for capturing long-range dependencies in sequential data.

- TFT (Temporal Fusion Transformer): A transformer-based model utilizing attention mechanisms for robust forecasting.

Resource Allocation Models

We evaluate reinforcement learning (RL) and heuristic-based methods for dynamic resource scaling:

- TAS (Threshold Auto-Scaling): A rule-based reactive mechanism scaling resources based on predefined thresholds.

- DQN: A reinforcement learning algorithm employing Q-learning for resource management.

- TFT+RL: A hybrid approach that combines forecasting with RL for decision-making.

- MARL: A scalable method utilizing multiple decentralized agents for both cooperative and competitive environments.

Training Optimization Models

For scalable and efficient policy learning, we also include various baseline models. This extensive assembly of baseline models provides a comprehensive benchmarking foundation for evaluating the contributions of each module within our proposed framework.

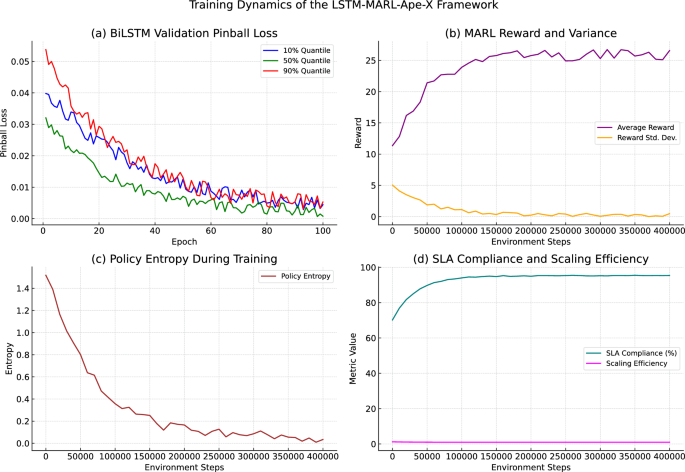

Proposed Framework

In this section, we’ll delve into our proposed LSTM-MARL-Ape-X framework, designed specifically for intelligent, carbon-aware auto-scaling in cloud environments. The framework integrates three core components:

- BiLSTM-based Workload Forecaster

- Multi-Agent Reinforcement Learning (MARL) Decision Engine

- Distributed Experience Replay Mechanism Inspired by Ape-X

Workload Forecasting Using BiLSTM

To effectively capture the temporal dependencies in cloud workloads, we propose a BiLSTM network augmented with an attention mechanism and quantile regression output. This architecture processes sequences in both forward and backward directions, allowing for a more nuanced understanding of workload patterns.

Architectural Advantages

Compared to transformer-based models, our BiLSTM design offers several benefits:

- Higher Computational Efficiency: Suitable for edge deployment.

- Lower Inference Latency: Critical for real-time scaling needs.

- Fewer Trainable Parameters: This reduces the risk of overfitting.

Uncertainty-Aware Training

Our model utilizes an hour of historical metrics and predicts three quantiles using the pinball loss function. This approach allows for robust auto-scaling policies that can accommodate uncertainty.

Reinforcement Learning-Based Auto-Scaling

Our MARL system deploys distributed agents, each tasked with managing a subset of virtual machines. Agents observe a hybrid state space that combines both forecasted metrics and real-time operational data.

Multi-Objective Reward Design

We crafted a reward function integrating multiple components to encourage balanced decision-making that considers performance, sustainability, efficiency, and stabilization.

Ape-X Distributed Training Architecture

We implement a modified version of the Ape-X framework, which combines experience collection with uncertainty-aware prioritization, enhancing training efficiency through parallel processing.

Integrated LSTM-MARL-Ape-X Algorithm

Our integrated framework unifies time series forecasting, intelligent scaling, and distributed training into a continuous loop of forecasting, decision-making, and learning. Each component plays a pivotal role in ensuring responsive, efficient resource management within cloud environments.

Implementation Details

For practical implementation, we utilize Python, PyTorch, TensorFlow, and Ray RLlib, ensuring that our methods are scalable and efficient.

Training Strategy and Reproducibility

To maintain transparency and reproducibility, we provide detailed training configurations and adhere to stringent data-splitting strategies.

Evaluation Methodology

An extensive evaluation strategy is adopted, using stress tests, deployment in simulated real-world environments, and an economic analysis to measure cost-effectiveness.

This comprehensive breakdown of our dataset, preprocessing, metrics, baselines, and proposed framework lays the foundation for understanding our approach to intelligent, carbon-aware auto-scaling in cloud computing. By focusing on accurate workload forecasting and dynamic resource allocation, we hope to contribute positively to the sustainability and efficiency of cloud infrastructure management.