Workflow Refinement and Gold-Standard Set: Enhancing Information Extraction in Clinical Reports

Introduction

The world of medical information extraction is ever-evolving, shaped by advancements in technology and a better understanding of clinical contexts. This article delves into the intricate workflow refinement process followed in constructing a gold-standard set for clinical report annotations, particularly focused on renal cell carcinoma (RCC). By harnessing the potential of a diverse dataset and employing a “human-in-the-loop” approach, researchers have developed a pipeline that promises enhanced accuracy and reliability.

The Development Set

The journey began with a development set comprising 152 pathology reports that reflected a myriad of clinical contexts. These reports included instances of both local/regional RCC and metastatic RCC, along with non-RCC malignancies and benign kidney neoplasms. The comprehensive nature of this dataset facilitated the systematic refinement of the extraction pipeline through iterative cycles that involved both human expertise and advanced machine learning techniques.

Diverse Clinical Contexts

Out of the 152 reports, 89 contained local/regional RCC, while 41 highlighted metastatic cases. An additional nine reports presented non-RCC malignancies, like urothelial carcinoma, and another 13 detailed benign or uncertain neoplasms, such as renal oncocytoma. This diversity was crucial in understanding the unique extraction challenges posed by different tumor types and staging, allowing for a more comprehensive error ontology.

Iterative Refinement Process

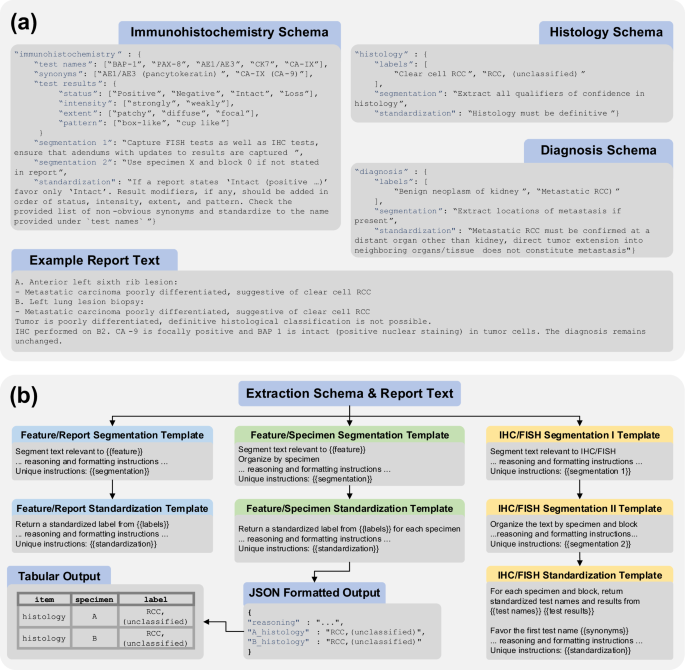

Throughout the refinement process, discrepancies in extracted data were documented meticulously, with a structured flowchart outlining sources and contexts of error. This iterative approach allowed researchers to focus on broader questions regarding information extraction, enhancing the overall accuracy of the pipeline.

Error Context Documentation

Several tables provided examples of error contexts, offering deeper insights into the specific challenges faced during each iteration. Each refinement cycle contributed to the systematic enhancement of the extraction schema, which was versioned with each iteration (e.g., V1, V2, V3). The ultimate result was a set of gold-standard annotations that effectively reflected the desired pipeline output.

The Role of LLMs in Refinement

The incorporation of a large language model (LLM), specifically GPT-4o, underpinned the refinement phases. It played a pivotal role in aligning the annotation process with established gold-standard outputs. After six iterations, the error rate significantly decreased to just 0.99%, with no major annotation errors identified. This transformation exemplifies how AI can dramatically improve data extraction accuracy when guided by rigorous human oversight and systematic iteration.

Challenges Encountered

Despite the progress made, the team faced several inherent challenges during the annotation process. These challenges could be categorized into three main areas: report complexities, specification issues, and normalization difficulties.

Report Complexities

Certain characteristics of pathology reports historically generated significant discrepancies. For instance, complex outside consultations often led to minor misunderstandings in IHC/FISH results due to inconsistent naming conventions and ambiguous interpretations. Issues arose primarily when IHC/FISH tests were presented for some specimens but not others, leading to duplicated results across similar histological classifications.

Specification Issues

Defining the desired information scope proved essential for accuracy. Precision in identifying relevant entities when multiple labels were applicable was a key focus. Moreover, optimizing the granularity of IHC results required a shift from exhaustive lists to structured vocabularies capturing dimensions of status, intensity, and extent—allowing for a more standardized representation of pathology.

Normalization Difficulties

Normalization remained a daunting task, especially when dealing with free-text entries and varied terms. Specific terms, such as “diffusely,” presented unique challenges in achieving verbatim consistency with the gold-standard annotations. Investigating these discrepancies highlighted how the tokenizer’s byte pair encoding behavior contributed to these inconsistencies, necessitating a nuanced understanding of the model’s limitations.

Addressing Medical Nuances

The complexities of integrating medical history also posed challenges. Clinical domain expertise became crucial to clarify the meaning behind terminologies like “consistent with” or “compatible with,” which often carried more definitive connotations in pathology than in common usage. Similarly, distinguishing between local and distant lymph node metastases required additional contextual adjustments in the prompts.

Leveraging LLM Interoperability

The interoperability of various LLMs was assessed to determine how well different models could perform in alignment with the gold-standard annotations. Comparing pipeline outputs using GPT-4o, Qwen2.5, and Llama 3.3 revealed varying levels of accuracy. While GPT-4o consistently outperformed others with an 84.1% exact match accuracy, applying fuzzy matching criteria yielded further improvements across the models, demonstrating that core prompt logic remains transferable despite fluctuations in performance.

Validation Against Existing Data

To validate the effectiveness of the finalized pipeline, tests conducted across a larger dataset of kidney tumor reports showed remarkable performance in accurately identifying key tumor histologies. The pipeline achieved a macro-averaged F1 score of 0.99, underscoring its clinical utility in amending and enhancing existing structured data.

Reacting to Discrepancies

A detailed review of discrepancies, particularly concerning histological subtypes, indicated consistent issues with integrating medical history. This underscores the necessity for mechanisms that can flag complex cases needing human review, especially when automated systems may misinterpret foundational medical information.

Beyond Regex: The LLM Edge

In comparison to traditional regex-based tools, the LLM pipeline demonstrated significant superiority, especially in extracting rarer kidney tumor subtypes. While regex performed adequately with common subtypes, it struggled with historical variations in terminology and results presented in complex reports. The LLM pipeline, however, maintained a high level of precision, further elucidating the benefits of embracing modern AI methods for data extraction in healthcare.

Conclusions on Internal Consistency

Assessing the internal consistency across a broader cohort revealed a high degree of concordance between the extracted histologies and associated IHC results. This not only confirmed the reliability of the extraction pipeline but also showcased the model’s adaptability in accurately interpreting complex clinical data.

In summary, the detailed journey of refining an LLM-driven pipeline for medical information extraction illustrates the profound challenges and solutions found within the domain of clinical reporting. The comprehensive dataset, iterative refinement, integration of human expertise, and innovative technologies converge to enhance the quality and reliability of medical data extraction workflows, paving the way for improved patient outcomes and clinical decision-making.