The EDLMOA-CHM Technique: Enhancing Health Monitoring in the IoMT

In the evolving landscape of healthcare technology, the Internet of Medical Things (IoMT) presents a transformative opportunity for improving health monitoring and patient safety. In this context, the EDLMOA-CHM technique emerges as a promising solution, aimed at developing effective methods for monitoring health conditions, thereby enhancing overall healthcare system security.

Overview of the EDLMOA-CHM Technique

The EDLMOA-CHM method is structured in several key stages:

- Data Pre-processing

- Feature Selection

- Ensemble Classification Process

- Parameter Tuning of the Model

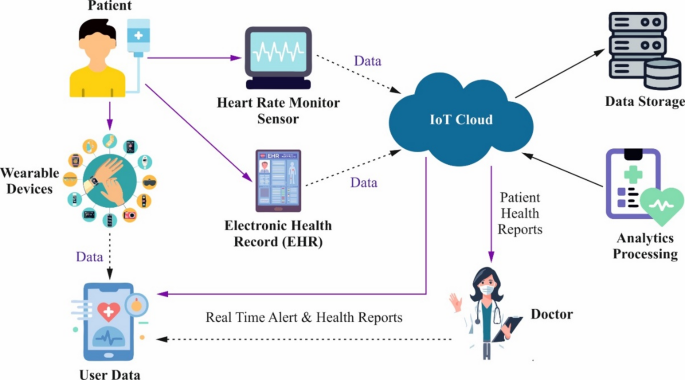

The systematic application of these stages facilitates the comprehensive evaluation of health monitoring strategies as depicted in the accompanying diagram (Figure 2).

Data Pre-processing Stage

The journey begins with data pre-processing, which utilizes the Z-score normalization method. This technique transforms raw data into a standardized format, advantageous for datasets with varying scales.

Advantages of Z-score Normalization

- It centers features around the mean with a unit standard deviation, mitigating the influence of extreme values.

- This method enhances the convergence of machine learning algorithms, promoting robustness against outliers and ensuring that learning algorithms perform stably.

- Z-score normalization preserves the distribution shape of the data—a crucial factor for models that assume normally distributed input features.

The formula for calculating the Z-score is given by:

[

Z_{-}Score=\frac{(y – \mu)}{\sigma}

]

Where:

- (y) is the data point.

- (\mu) is the mean.

- (\sigma) is the standard deviation.

This ensures that raw data values reflect their deviation from the population mean, optimally situated between -3 and +3.

BGWO-Based Feature Selection Process

Next, we delve into the feature selection process, employing the Binary Grey Wolf Optimizer (BGWO) technique. The BGWO is distinguished for its balanced exploration and exploitation capabilities, mimicking the social hierarchy and hunting behaviors of grey wolves.

Key Features of BGWO

- It avoids local optima and converges quickly, making it well-suited for high-dimensional datasets.

- The binary nature facilitates efficient handling of discrete feature selection problems, reducing complexity while preserving accuracy.

The feature selection process involves updating the positions of alpha, beta, and delta wolves to suggest the best solutions found thus far. The core mechanism is illustrated by the following adaptation:

[

X{i}^{t+1} = Crossover(x{1}, x{2}, x{3})

]

This ensures that promising solutions are effectively explored and exploited.

A specific fitness function is used, balancing the number of features and classification accuracy, thus optimizing the feature subset for improved interpretability and model performance.

Ensemble Classification Process

The next phase involves the application of ensemble models—Temporal Convolutional Network (TCN), Gated Recurrent Unit (GRU), and Hierarchical Deep Belief Network (HDBN)—for classification. This choice is motivated by the strength of ensemble methods in improving overall model robustness.

Temporal Convolutional Network (TCN)

TCNs are adept at managing long-range temporal dependencies. By employing 1D convolutional kernels, they effectively expand input sequence lengths while maintaining smaller model dimensions:

[

P = 1 + (\lambda – 1) \cdot x \cdot \sum \limits_{l} {\delta^l}

]

Where:

- (\lambda) is the kernel size,

- (x) is the number of stacks,

- (\delta) is the dilation rate.

Attention-Based BiGRU Model

The Attention-based BiGRU model enhances the traditional GRU by leveraging attention mechanisms to focus on significant input portions. This model concurrently processes previous and future contexts, substantially improving model interpretability and classification proficiency. The attention mechanism allows for dynamic relevance weighting, essential in decision-making processes.

Hierarchical Deep Belief Network (HDBN)

The HDBN structure is pivotal for extracting hierarchical representations from the input data. Comprising multiple layers of Restricted Boltzmann Machines (RBMs), HDBN identifies complex relationships within the data through supervised fine-tuning, forming a robust representation of the data.

POA-Based Parameter Tuning Model

To optimize model performance further, Pelican Optimization Algorithm (POA) is employed for hyperparameter tuning. This approach is noted for its global search capabilities and quick convergence, allowing it to effectively navigate the optimization landscape.

Mechanism of POA

The POA operates by defining pelicans as potential solutions and iteratively adjusting their positions based on performance evaluation. The dynamic behavior of hunting and attacking emulates natural optimization processes, with the method adjusting its search radius over iterations to enhance convergence towards optimal solutions.

Key equations driving the POA include:

- Position Update Mechanism

- Fitness Determination

The efficiency of the hyperparameter selection contributes to the overall accuracy of the classification model, ensuring that the final model is well-tuned to the specific requirements and characteristics of the dataset.

This structured approach ensures that the EDLMOA-CHM technique not only enhances monitoring in the IoMT but also contributes significantly to patient safety and healthcare system robustness. Through comprehensive stages of data processing, feature selection, ensemble classification, and effective parameter tuning, the methodology stands out as a forward-thinking solution in medical technology.