“Leveraging Machine Learning to Analyze Charge Interactions and Energies”

Leveraging Machine Learning to Analyze Charge Interactions and Energies

The Core Concept and Its Significance

At the intersection of chemistry and machine learning lies a novel methodology aimed at accurately modeling the behavior of charged systems. This approach, known as the Latent Ewald Summation (LES), harnesses the power of machine learning to predict long-range interactions and charges based directly on energy landscapes and atomic forces. This method is pivotal for a multitude of applications, ranging from materials science to drug design, where understanding charge interactions is essential.

Traditionally, modeling electrostatic interactions has been complex and computationally demanding. The introduction of machine learning offers a paradigm shift, enabling predictions that are not only faster but also capture nuanced behaviors of charged systems, which are often inadequately addressed by conventional methods.

Key Components and Variables

The LES framework operates through several critical elements:

-

Short-Range Interactions (SR): The first step in modeling is capturing local interactions, which encompass the behaviors of atoms in close proximity. Short-range models consider these interactions as they tend to dominate the potential energy landscape.

-

Long-Range Interactions (LR): Once short-range interactions are accounted for, the focus shifts to long-range effects, which are crucial for accurately modeling systems with charged entities. The LES framework effectively separates these interactions through mathematical formulations that optimize computational efficiency.

- Hidden Variables (Charges): In LES, the hidden variables represent flexible atomic charges. The flexibility of this definition allows the model to adapt to various chemical environments and states, significantly enhancing its descriptive power.

Lifecycle of the Process

To understand how machine learning models are developed in this context, it’s helpful to consider a step-by-step lifecycle:

-

Data Collection: The initial phase involves gathering substantial datasets containing energy and force information from quantum mechanical calculations or experiments.

-

Training the Model: The collected data is then used to train the machine learning algorithms. During training, the model learns to correlate atomic configurations with their respective energies and forces.

-

Validation and Testing: After training, the model undergoes rigorous validation to ensure accurate predictions in new scenarios. It is tested against separate data to evaluate its effectiveness.

- Application: Finally, the model is applied in various scenarios, such as predicting molecular interactions in drug design or modeling solid-electrolyte interfaces.

Practical Examples

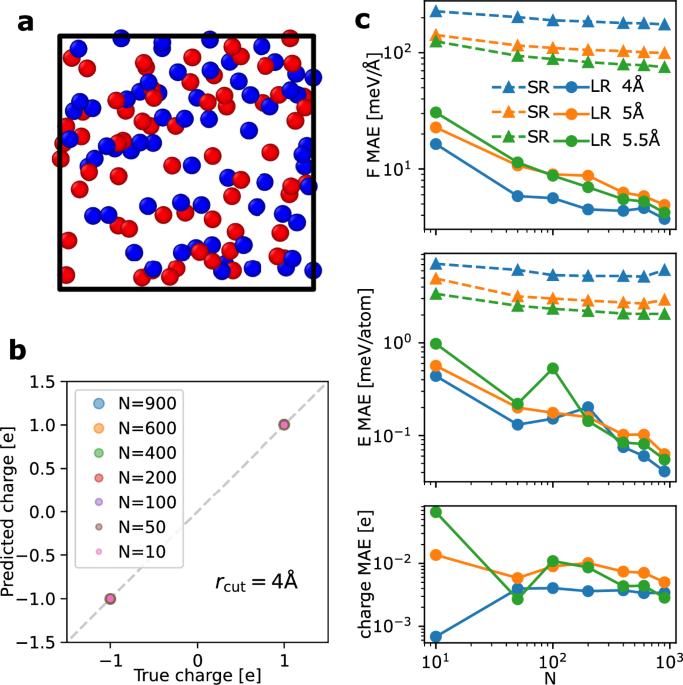

Gas of Point Charges

In a benchmark experiment, a configuration of point charges was analyzed using the LES model. Each configuration included atoms with alternating positive and negative charges, which interacted via both Coulomb potentials and Lennard-Jones forces. Remarkably, the model could predict atomic charges with high fidelity, even with minimal training data, underscoring its efficiency in learning complex interactions.

Aqueous Electrolyte Solutions

Another illuminating example is the prediction of forces and energies in potassium fluoride (KF) aqueous solutions, demonstrating the effectiveness of machine learning in modeling multi-species systems. By leveraging LES, researchers were able to accurately capture the interplay between water molecules and ions, yielding insights into the underlying electrostatic interactions critical for understanding electrolyte behavior.

Molecular Dimer Interactions

Molecular dimers also serve as a practical application of the LES framework. In tests with small datasets, the model efficiently predicted the binding energies and forces between charged molecules. This capability is essential for applications such as drug discovery, where understanding molecular interactions is crucial.

Common Pitfalls and Avoidance Strategies

One of the most significant pitfalls in machine learning modeling is overfitting, where a model performs well on training data but poorly on unseen data. To mitigate this risk, employing a well-defined validation set is essential.

Additionally, when handling systems exhibiting charge transfer, it’s critical to ensure that the model can adapt to varying environments. Implementing flexible latent variables is a strategic approach to managing this complexity.

Tools and Metrics

Commonly utilized tools in the LES framework include Python libraries such as TensorFlow and PyTorch for model development. Moreover, metrics such as Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) are standard measures to evaluate model accuracy, providing a quantitative understanding of model performance.

Variations and Alternatives

While the LES framework is groundbreaking, several alternative approaches exist:

-

3G-HDNNP: Fourth-generation high-dimensional neural network potentials focus on learning explicit charges for better accuracy but can struggle with long-range interactions.

- ACE-based Models: Traditional Atomic Cluster Expansion models can also predict energies; however, they often miss the long-range electrostatic behaviors well-captured by LES.

Each of these methods has its benefits and drawbacks, requiring careful consideration based on application needs.

FAQ

What are the advantages of using machine learning for modeling charges?

Machine learning models can significantly reduce computation time while providing accurate predictions for complex charge interactions and energies, which are essential in various domains from materials science to biology.

How does the LES framework differ from traditional methods?

LES uniquely separates short-range and long-range interactions, allowing for more precise modeling of charged systems without the overall computational burden typically associated with conventional electrostatic modeling methods.

This innovative approach not only streamlines the process but opens new avenues for research and application across diverse scientific fields.