Advancements in Brain Tumour Diagnosis: A Transformative Model

Introduction

The landscape of medical imaging is ever-evolving, particularly in diagnosing critical conditions like brain tumours. Traditional methods often struggle with challenges such as noisy and sparse datasets, leading to unreliable predictions. This situation not only hampers diagnostic precision but also threatens the trust clinicians place in technological advancements. Addressing these limitations calls for innovative approaches that maximize scalability, reliability, and interpretability in clinical settings.

Challenges in Brain Tumour Diagnosis

Diagnosing brain tumours through imaging is intricate, primarily due to the noisy nature of medical data. The datasets can often be sparse, leading to insufficient information for accurate predictions. Traditional models have shown a tendency to rely heavily on intensity-based features, which can result in oversight of critical information. Furthermore, these models often lack transparency in their predictions, making it challenging for medical professionals to interpret results confidently.

Innovative Model Proposal

To counter these challenges, an advanced model has been introduced that integrates deep learning techniques, robust data augmentation strategies, and explainable artificial intelligence (XAI). This approach aims to provide a reliable, interpretable, and adaptable solution for brain tumour diagnosis, highlighting the criticality of overcoming the limitations of traditional methodologies.

System Architecture

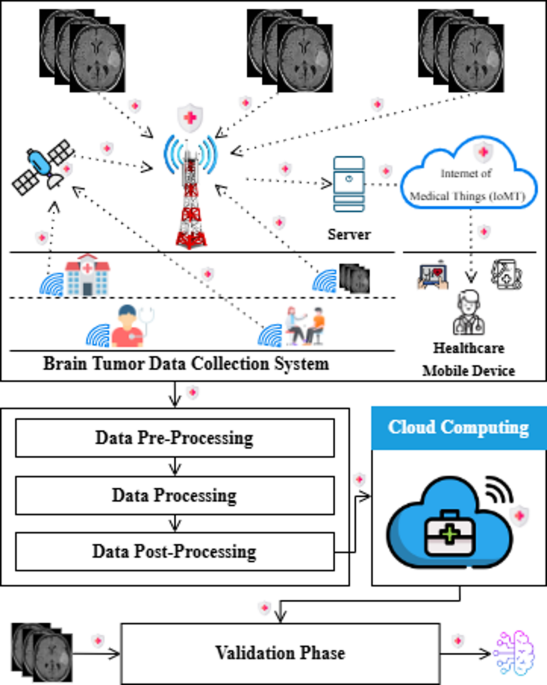

The proposed model leverages a comprehensive system, as depicted in Figure 1, which integrates data from multiple sources including hospitals, mobile medical devices, and even satellites via the Internet of Medical Things (IoMT). This interconnected ecosystem enables a rich collection of imaging data, which undergoes a three-stage process: pre-processing, processing, and post-processing.

-

Pre-Processing: This stage focuses on data enhancement, including standardizing and cleaning the collected images. It ensures that the data fed into the model is both consistent and of high quality.

-

Processing: Here, advanced deep learning models analyze the enhanced data to detect potential brain tumours. This stage is where the model truly shines, employing sophisticated techniques to identify patterns indicative of tumours.

- Post-Processing: This phase emphasizes the need for interpretability. The results obtained are refined, and insights are generated to inform clinical decision-making. The post-processed outcomes are then made available through cloud computing, facilitating real-time accessibility for medical professionals.

The Role of Cloud Computing

Figure 2 illustrates the crucial role cloud computing plays in this advanced framework. After data is collected and processed, it is stored on cloud-based servers, which centralizes data management. This setup provides secure, scalable, and real-time access to healthcare professionals, enabling seamless collaboration and ensuring that vast amounts of medical imaging data can be analyzed efficiently.

Visualization Tools

Once the data is present in the cloud, clinicians can utilize advanced Visualization Tools to interpret processed information easily. These tools transform complex datasets into clear, actionable insights, thereby supporting quicker decision-making. Whether tumours are detected or not, the results are presented in an interpretable format, facilitating enhanced diagnostic workflows.

Streamlined Workflows

The meticulous flow from post-processing to cloud integration underscores the system’s efficiency. By streamlining these workflows, the model significantly improves diagnostic accuracy, paving the way for more effective clinical practices.

Data Collection and Preprocessing

Data Collection

The initial phase involves the collection of data specifically curated from brain images. High-quality and accurate images at this stage are vital since any errors can propagate through subsequent processing phases.

Preprocessing Layers

In the preprocessing pipeline, the collected MRI images undergo a series of transformations to enhance their usability for analysis. Images are resized to standardized dimensions, and pixel intensities are normalized. Real-time data augmentation techniques, such as rotations and flips, are applied to improve model performance by increasing training diversity.

Deep Learning Phase

The heart of the proposed system lies in the deep learning phase, which trains the model to discern between brain images categorized as “Tumour” and “No Tumour.” A range of advanced algorithms, including CNN, VGG16, and NASNet Large, was evaluated. Among these, NASNet Large proved to be the most effective, demonstrating superior accuracy and the ability to optimize its structure autonomously.

Convolutional Operations

Convolutional operations are central to feature extraction in NASNet Large. The model leverages depthwise separable convolutions to enhance computational efficiency while retaining critical data features.

Predictions

As the model transitions into the prediction phase, it evaluates the patterns discerned during the training stage. It aims for a comprehensive evaluation of its ability to generalize across varying data inputs.

Explanation through XAI Techniques

The use of Explainable Artificial Intelligence (XAI) techniques, such as LIME and Grad-CAM, allows the model’s predictions to be not only accurate but also interpretable. These techniques illuminate the decision-making process, helping clinicians understand the key features that informed the model’s predictions.

LIME

LIME focuses on individual predictions by perturbing input data to observe resultant output changes. It identifies significant features influencing classification decisions, thereby making the model more transparent.

Grad-CAM

Grad-CAM visually highlights the regions within the brain images that the model concentrated on while making predictions. This method provides clarity on which data aspects are most pivotal for detecting tumours, thereby enhancing trust in AI decisions.

Cloud Deployment and Real-Time Validation

Upon successful training and validation, the model is deployed in cloud environments, facilitating real-time data analysis. This cloud-based architecture supports scalability and ensures the model can evolve as new data becomes available.

Efficient Decision-Making

The final phase relies on the model to make clear and actionable decisions based on its predictions. If a tumour is detected, information is promptly communicated to clinicians for further action, while data associated with negative findings is systematically managed to prevent unnecessary processing.

The blending of deep learning, XAI, and cloud computing fundamentally reshapes the future of brain tumour diagnostics. By enhancing accuracy, transparency, and efficiency, this model represents a significant stride forward in the quest for reliable medical imaging solutions.