Data Preprocessing Methods in Machine Learning for Non-Targeted Analysis

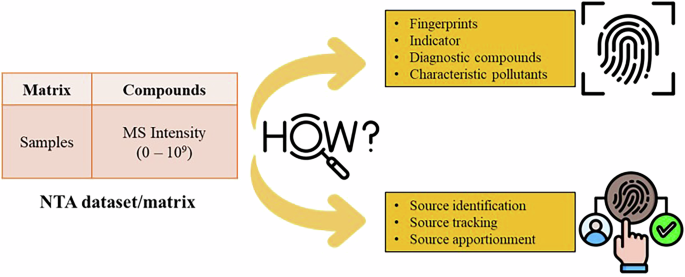

Data preprocessing is a crucial stage in machine learning (ML), particularly in non-targeted analysis (NTA), where the focus is on extracting valuable information from complex datasets, like those generated from mass spectrometry (MS). A high-quality preprocessing workflow is essential for enhancing reliability and robustness in subsequent analyses. This article explores various data preprocessing methods, highlighting their importance and practical applications in ensuring data quality and consistency.

Data Alignment Across Different Batches

In MS data, variations can arise due to differences in analytical platforms or acquisition dates. Therefore, data alignment is vital to ensure comparability of chemical features across all samples. This primarily involves three key steps: retention time correction, mass-to-charge ratio (m/z) recalibration, and peak matching.

1. Retention Time Correction

Retention time correction addresses shifts in retention times caused by variations in chromatographic conditions. Software platforms like XCMS use the obiwarp algorithm for nonlinear retention time alignment, ensuring robustness against complex shifts, unlike landmark-based strategies employed by MS-DIAL and MZmine, which use predefined anchors.

2. m/z Recalibration

This step standardizes mass accuracy across different batches, ensuring consistency in high-resolution mass spectrometry data. Automation tools like Thermo Xcalibur facilitate real-time calibration, while platforms such as FT-ICR MS utilize known m/z values for recalibration.

3. Peak Matching

Post-correction, peak matching algorithms consolidate identical chemical signals across multiple batches. Modern algorithms employ multi-parameter scoring systems that consider factors like peak shape similarity and isotopic patterns to enhance alignment accuracy. Despite technological advances, challenges remain with low-intensity signals and co-eluting compounds, necessitating rigorous quality control.

Data Filtering

Data filtering is an essential process to enhance dataset robustness by reducing noise. Strategies often employ occurrence frequency to determine which compounds should be retained. For instance, in the study by Ekpe et al., only compounds detected in at least 40% of samples were kept.

Frequency and Fold Change Thresholds

Applying fold change (FC) thresholds further identifies compounds significantly altered between sample groups. Common thresholds include FC > 2 for upregulated compounds and FC < 0.5 for downregulated ones. Multiple testing correction methods, like the Benjamini-Hochberg false discovery rate (FDR), can refine compound selection, minimizing false positives.

Data Transformation, Zero Value Imputation, and Normalization

Due to the wide range of MS intensity values, appropriate data transformation, zero value imputation, and normalization are crucial for stabilizing variance and improving data distributions.

Data Transformation

Transformations, such as logarithmic or square root, can reduce skewness and improve comparability. Zero values in datasets usually fall into two categories: true zeros (indicating absence) and non-true zeros (censored values below detection limits). Non-true zeros can be imputed using median substitution, Tobit regression, or quantile-based strategies.

Normalization Techniques

Normalization corrects technical variability, ensuring that sample comparisons reflect true differences. Techniques include Total Ion Current (TIC) normalization and quantile normalization. Within different ML models, normalization requirements vary. For instance, decision trees are robust to unscaled data, whereas Support Vector Classifiers (SVC) prefer normalized input.

Dimensionality Reduction Techniques

NTA datasets often present a high number of variables relative to samples, complicating visualization and interpretation. Dimensionality reduction is crucial for transforming data into a lower-dimensional space while preserving essential information.

Feature Extraction (FE)

FE aims to extract informative features from raw data, enhancing downstream analysis. Common methods include Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE). Each method has its own advantages, making the choice dependent on dataset characteristics and analytical goals.

Feature Selection (FS)

FS identifies a subset of variables that effectively model the problem, helping enhance model performance. Various methods, including filters and wrappers, can be employed, with careful consideration of trade-offs for optimal feature subsets.

Clustering Algorithms

Clustering is an unsupervised learning method to group similar data points, revealing patterns within complex datasets. Common clustering algorithms include K-means, Hierarchical Cluster Analysis (HCA), and Self-Organizing Maps (SOM).

1. Hierarchical Cluster Analysis (HCA)

HCA creates a dendrogram representing the hierarchical relationships among samples or features. This graphical representation helps in interpreting how closely related certain compounds or sample types are.

2. K-Means Clustering

K-means partitions the dataset into a predefined number of clusters based on minimizing within-cluster variance. The algorithm iteratively refines cluster centroids, facilitating insights into inherent groupings.

3. Self-Organizing Maps (SOM)

SOMs project high-dimensional data onto a two-dimensional grid, preserving topological relationships. This aids in visual interpretation of clusters, identifying similarities, and discerning patterns.

Classification Models in ML-Assisted NTA

The vastness and complexity of environmental datasets make ML-based classification models pivotal for discovering diagnostic information. These models provide a scalable approach to identify and classify contaminants.

Key Classification Models

Models like Logistic Regression, Support Vector Classifiers, and Random Forests are frequently applied in NTA. While simpler models perform well with smaller datasets, more complex models, such as neural networks, demand larger datasets to generalize effectively.

Considerations for Successful Implementation

1. Size and Quality of Dataset

The size and quality of the dataset significantly impact model performance, with high-quality datasets being paramount for stable predictive results.

2. Evaluation Metrics

Precision, recall, F1-score, and Matthews Correlation Coefficient (MCC) are key metrics for evaluating model performance. Selecting appropriate metrics based on the context of the analysis ensures reliability.

3. Model Tuning and Optimization

Hyperparameter tuning is crucial to optimizing model performance. Decisions like the depth of decision trees or the number of hidden layers in a neural network greatly influence generalization.

4. Model Interpretability

Understanding model predictions is vital for informing regulatory decisions. Linear models offer inherent interpretability, while methods such as SHAP and LIME enhance the interpretability of complex models.

In sum, the comprehensive data preprocessing methods outlined here—ranging from alignment and filtering to normalization and dimensionality reduction—play a vital role in ensuring robust machine learning outcomes in non-targeted analysis. By carefully implementing these methods, researchers can enhance the identification and interpretation of environmental contaminants effectively.