Deep Learning-Aided Heading Estimation: A Fusion of Inertial and Visual Data

In recent years, the rapid advancement in smartphone technology has opened up new avenues in pedestrian navigation systems, particularly through the integration of inertial sensors and visual data. This study presents an innovative framework designed to enhance heading estimation by fusing inertial sensor data, specifically gyroscope, accelerometer, and magnetometer readings, with visual structural cues extracted from images captured by smartphone cameras. The approach, fundamentally grounded in deep learning, promises to improve the accuracy and reliability of navigation in structured environments.

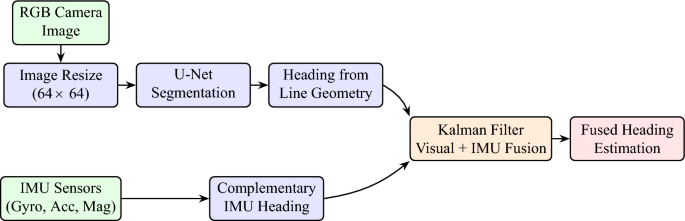

The Framework Overview

The heart of this framework lies in its ability to enhance gyroscope-based heading estimates using visual constraints obtained from ground reference lines, which are prevalent in urban settings. By employing a lightweight U-Net-based convolutional neural network (CNN), the system detects and segments critical straight-line features from low-resolution RGB images in real time. This segmentation process outputs a binary mask that highlights the dominant ground-aligned features, which serve as vital visual heading cues.

Training the U-Net model required a curated dataset of labeled images depicting ground reference lines, with a focus on balancing recognition accuracy against minimal latency. Given the constraints of mobile devices, all images were downscaled to a resolution of 64×64 pixels before training, facilitating efficient processing without significant loss of essential information.

Combining Inertial Measurements

Smartphones are equipped with Micro-Electro-Mechanical Systems (MEMS) sensors, which operate within a specific coordinate system referred to as the body coordinate system. For navigational purposes, transformation to the navigation coordinate system is necessary—a process accomplished using a rotation matrix. Several equations govern how smartphone heading can be calculated using accelerometer and magnetometer measurements. For instance, roll and pitch can be calculated from specific axes of the accelerometer, and magnetic north can be derived from magnetometer data combined with the sensor’s orientation.

Despite the availability of these sensors, challenges such as sensor errors and environmental interferences can lead to significantly biased heading estimates. Therefore, complementary filtering methods are often employed, combining accelerometer, magnetometer, and gyroscope measurements to produce a more accurate heading estimate that compensates for cumulative errors of individual sensors.

Visual Heading Computation

A key innovation in this study is the use of smartphone camera images to compute pedestrian heading in real time. Transforming the smartphone’s coordinate system into a local ground coordinate system allows for the projection of reference lines captured by the camera. The azimuth of these reference lines can subsequently be calculated—introducing an angle deviation that is critical for improving navigation accuracy.

With the smartphone capable of capturing real-time imagery, the task of angle deviation calculation also becomes feasible. The study implemented a mechanism to recognize ground reference lines through smartphone images using deep learning techniques, specifically by training a model on a dataset consisting of hundreds of labeled reference line images.

Real-time Ground Reference Line Recognition

To achieve effective real-time recognition of ground reference lines, the study utilized the smartphone’s camera in conjunction with advanced computer vision and deep learning methods. Special textured tape was placed on the ground at the experimental site, which aided in identifying the reference lines with precision exceeding 92%.

Training involved resizing images to 64×64 pixels to strike a balance between speed and accuracy, accommodating the limited processing capabilities of smartphone hardware. Not only did this facilitate real-time processing, but it ensured the algorithm remained light enough for mobile deployment.

Visual-Inertial Heading Fusion

By integrating the visual and inertial measurements, the proposed framework adopts a Kalman filter approach to refine the initial heading estimates. This method continuously corrects the heading based on updates received from both high-frequency gyroscopic data and low-frequency but more stable visual measurements.

The state vector defined by the Kalman filter comprises the estimated heading and the mean angular rate of rotation, which evolves through time with each sensor update. When the system detects reliable ground reference lines, it incorporates visual heading information to correct any accumulated errors from the inertial sensors. This dual approach ensures that the framework can effectively navigate the challenges posed by real-world environments, balancing the need for rapid updates with long-term heading stability.

System State and Noise Considerations

The Kalman filter maintains a recursive estimate of the pedestrian’s heading state by continuously predicting and correcting based on new measurements. The process accounts for uncertainties in heading updates through Gaussian noise terms, which reflect the inherent variability present in pedestrian dynamics and sensor readings.

Through this sophisticated estimation framework, the proposed method enhances both the responsiveness and robustness of pedestrian navigation systems, blending cutting-edge deep learning techniques with traditional inertial navigation approaches.

A Step Beyond

This study lays the groundwork for future advancements in pedestrian navigation technologies by showcasing the significant advantages of fusing visual and inertial data. The integration demonstrates not just theoretical frameworks but practical applications that can empower everyday users through improved location-awareness capabilities in urban environments.

By tapping into the simple yet profound notion of leveraging smartphones—devices found in nearly every pocket—this innovative approach aims to redefine how we understand and interact with navigation, making it smarter, more intuitive, and fundamentally transformative.