“Imagining ChatGPT in 1987: Impact on AI Development and Market Opportunities”

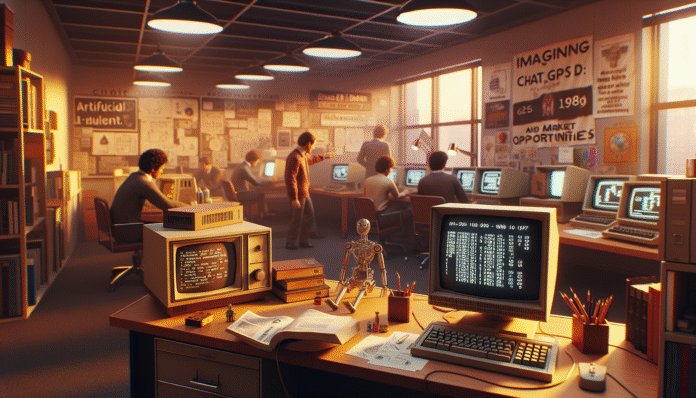

Imagining ChatGPT in 1987: Impact on AI Development and Market Opportunities

The State of AI in 1987

In 1987, the field of artificial intelligence (AI) was grappling with challenges marked by the onset of the second AI winter, a period characterized by reduced funding and waning optimism after the hype surrounding expert systems. These systems, such as MYCIN for medical diagnosis, operated based on rigid rules and lacked the flexibility and generative capabilities seen in today’s AI models like ChatGPT. For instance, while MYCIN could identify bacterial infections using predetermined logic, it couldn’t evolve or learn from new data, which limited its application.

The impact of a hypothetical ChatGPT emerging during this period would have been transformative. It could have introduced natural language understanding and generation at a time when computers were mainly focused on computation and predefined responses, fundamentally reshaping user interactions with technology.

Core Concept and Its Significance

The core concept of deploying a model like ChatGPT in 1987 revolves around the notion of generative AI, which refers to algorithms capable of creating new content based on existing data patterns. This contrasts sharply with earlier AI applications that primarily relied on static data and rules.

Envisioning ChatGPT in the 1980s emphasizes the missed opportunities for innovation in user interfaces and business processes. For example, had companies been able to integrate a conversational AI into their customer service systems in 1987, they could have considerably improved customer interaction, reducing wait times and enhancing service quality—a significant leap from passive interactions where users had to follow strict protocols.

Key Components of AI Advancement

The emergence of foundational technologies is crucial to understanding AI’s journey. For ChatGPT, the transformer architecture, introduced in 2017, exemplifies a leap in data processing capability that would have been alien in the 1980s. The role of massive datasets for training AI models is another cornerstone; in 1987, the volume of data available was significantly limited compared to what is accessible today.

Hypothetically, if an AI like ChatGPT had been available, it would have flourished in an era when computers started becoming widespread, like the IBM PC AT. This device had limited processing power and memory, significantly restricting the potential for running advanced AI algorithms. The theoretical applications of AI would have prompted an early push for better hardware and infrastructure, laying groundwork for future developments.

Implementation Challenges in Historical Context

Implementing a generative AI model in 1987 would have faced several insurmountable challenges primarily stemming from hardware limitations. At that time, the backpropagation algorithm, pivotal for training neural networks, was only just gaining traction following its introduction by scholars like David Rumelhart and Geoffrey Hinton.

In practical terms, trying to run a model as sophisticated as ChatGPT would have led to prolonged processing times and increased costs. For example, the limitations in RAM and processing speeds (around 6-8 MHz) would make even simple tasks like text generation nearly impossible.

Business Opportunities Unlocked by AI

Considering business strategies, a ChatGPT-like model would have unlocked massive market opportunities, particularly in industries like customer service and retail. The hypothetical ability to automate responses in real-time could have dramatically reduced operational costs.

Today, chatbots in customer service save companies billions, but back in 1987, the concept was rudimentary. If companies had adopted generative AI earlier, they could have gained advantages in efficiency, competitive positioning, and customer loyalty much sooner, revealing a missed potential that is now evident in sectors utilizing AI solutions extensively.

Technological Milestones That Enabled AI Evolution

The evolution of AI hinges on several technological milestones, such as the introduction of deep learning paradigms and the exponential growth of computational power. The potential of an early ChatGPT would have highlighted these needs, pushing industries toward adopting improved data processing technologies sooner.

Transformative technologies like GPUs, which became prevalent later on, are integral to training large AI models. In the absence of these advancements in the 80s, the idea of scalable generative AI would have faced significant roadblocks, serving as a reminder of how interdependent technological progress is.

Common Mistakes and Overcoming Them

In hindsight, several mistakes made in the development of AI during its early years were due to overestimating capabilities without understanding hardware constraints. For example, investing in sophisticated AI without the required computational capabilities led to disillusionment and stagnation.

Have there been an early ChatGPT, organizations would have been warned against implementing such components without ensuring compatible infrastructure. Today, recognizing the importance of scalable technology solutions alongside AI advancements can mitigate these past errors.

Alternative AI Models: Pros and Cons

Alternative approaches to AI in the late 20th century included rule-based systems or traditional machine learning algorithms. While these had their strengths in specific applications like diagnostics, they were rigid and less adaptable compared to generative models like ChatGPT.

Such alternatives often failed to manage dynamic queries or maintain conversations fluidly. Hence, industries are encouraged to adopt adaptable frameworks that merge traditional machines with generative capabilities—an avenue lent clarity from an imagined early instance of ChatGPT.

Evolving Regulatory Landscape Impacting AI

The regulatory environment surrounding AI has changed dramatically since the 1980s. In 1987, minimal oversight allowed AI to develop without much external influence, which contrasts with today’s comprehensive frameworks emphasizing ethical considerations in technology deployment.

Hypothetically, had ChatGPT appeared in 1987, regulatory constraints might have emerged sooner. Awareness around ethics and AI’s societal impact could have shaped the development of guidelines that promote responsible usage, an essential factor as businesses today grapple with data privacy and misuse concerns.

Future Outlooks and Quantum Computing

Looking ahead, quantum computing presents exciting possibilities for AI evolution. Although it was unimaginable in 1987, the potential to handle immense amounts of data at unprecedented speeds could turbocharge AI capabilities.

If an early version of ChatGPT had emerged then, we might have seen a push toward foundational quantum research earlier in the tech industry, paving the way for innovations we anticipate in the near future. Thus, the trajectory from rudimentary AI to sophisticated systems mirrors technological advancement and societal readiness to adopt more complex solutions.