Enhanced Breast Cancer Detection Using Thermogram Images: The BCDGAN Framework

Breast cancer remains a critical public health issue, with early detection being paramount for effective treatment. Recent advancements in artificial intelligence (AI) have paved the way for innovative diagnostic tools, and among these, the proposed BCDGAN (Breast Cancer Detection using Generative Adversarial Networks) framework shines brightly. By utilizing the DMR-IR81 benchmark dataset of thermogram photos, researchers have embarked on an exploration of AI-driven methods to enhance breast cancer identification.

Challenges in Thermographic Imaging

Thermographic imaging has proven to be an invaluable tool in medical diagnostics; however, it is not without its challenges. Like many medical imaging modalities, thermogram photos are often hindered by issues such as noise, artifacts, and variances in image quality. Factors such as device limitations, patient movement, and environmental conditions contribute to these challenges. These variables can severely affect the performance of AI models unless they are adequately trained to accommodate them. Recognizing these pitfalls, the BCDGAN framework introduces several techniques along the imaging pipeline to enhance its generalization performance, particularly when dealing with noisy data.

Data Preprocessing Techniques

To tackle the inherent noise and low contrast in thermographic images, BCDGAN employs a systematic data preprocessing approach. Techniques such as Gaussian and median filtering are utilized to suppress random noise, enabling the model to concentrate on significant thermal patterns rather than the superficial, pixel-wise noise that can obscure important features. Histogram equalization further bolsters this foundation by expanding the dynamic range of thermal images and adjusting changes in illumination and contrast.

By thoroughly preparing the dataset through such preprocessing methods, BCDGAN becomes more robust to inherent image variability. This robustness is further tested by intentionally corrupting the dataset with Gaussian noise, motion blur, and contrast distortions. Impressively, the framework manages to maintain a high classification performance, demonstrating a mere ∼1.8% decrease in accuracy when faced with these challenges.

The Importance of Synthetic ROIs

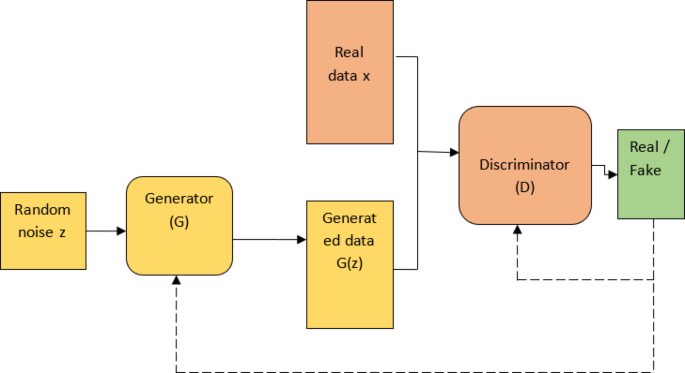

A standout feature of the BCDGAN framework is its ability to generate synthetic Regions of Interest (ROIs) via generative adversarial networks. This generation not only exposes the classifier to a more diverse range of thermal variations but also enhances the overall generalization capabilities of the model. By integrating hybrid feature extraction techniques that leverage convolutional neural network (CNN)-based architectures, BCDGAN effectively captures more representative thermal patterns. This amalgamation of synthetic data generation and enhanced feature extraction emerges as a powerful strategy against the variability in thermograms inherent in clinical settings.

Training Methodology

The training regimen for BCDGAN has been meticulously crafted to ensure stability and efficiency. Utilizing the Adam optimizer with a learning rate of 0.0002, the model’s architecture incorporates specific parameters such as β1 = 0.5 and β2 = 0.999. A batch size of 32 is maintained for 100 epochs, complemented by early stopping based on validation loss to prevent overfitting.

Furthermore, the framework employs a Wasserstein GAN with Gradient Penalty (WGAN-GP) configuration, incorporating five discriminator updates per generator update with a gradient penalty coefficient of 10. This meticulous setup facilitates stabilized convergence and helps mitigate the risks of mode collapse often associated with adversarial training.

Performance Metrics and Results

The comprehensive performance evaluation of BCDGAN reveals its impressive efficacy in breast cancer detection compared to existing cutting-edge deep learning models. As outlined in the experimental results, the model exhibits outstanding precision, recall, F1-score, and accuracy metrics, signifying its robustness in differentiating between malignant and benign cases. For instance, BCDGAN achieved an accuracy of 99.01% with a precision of 99.54%, outperforming models such as VGG19, ResNet50, and DenseNet121 on all evaluation fronts.

Figures illustrating the training dynamics, such as loss curves and confusion matrices, further validate the robustness of the BCDGAN framework. The training and validation loss curves indicate a smooth convergence, underscoring the model’s ability to generalize well beyond the training datasets.

Visual Evidence of Model Effectiveness

Visual representations also play a crucial role in the assessment of BCDGAN’s performance. The Grad-CAM (Gradient-weighted Class Activation Mapping) visualizations show critical zones of thermal activity that the model prioritizes during predictions. By elucidating areas of interest in thermogram images, these heat maps not only enhance interpretability but also foster clinician confidence in the AI’s diagnostic capabilities.

Comparison with State-of-the-Art Techniques

When compared to existing state-of-the-art methods, BCDGAN consistently outperformed alternatives, exhibiting superior classification metrics that make it a promising approach for clinical integration. The framework’s ability to incorporate GAN-driven data augmentation enhances diverse feature representation, addressing one of the fundamental challenges in medical imaging—class imbalance.

Table comparisons with other models reveal that BCDGAN holds a significantly higher AUC-ROC score, confirming its superior capability in distinguishing between malignant and benign cases. Each ablation study further highlights the synergistic effect of combining adversarial learning with hybrid deep learning techniques.

Conclusion on Clinical Applications

As AI technologies continue to evolve, the integration of frameworks like BCDGAN into clinical practice for breast cancer detection appears highly feasible. Its impressive accuracy and reliability mark a remarkable step toward advancing medical diagnostics, potentially transforming how clinicians approach breast cancer screening and early detection.

Incorporating these advanced techniques holds the promise of not only improving diagnostic accuracy but also enhancing patient outcomes through timely and precise interventions. As this research unfolds, it represents a significant leap forward in the quest to leverage technology for better health solutions.