Sentiment Analysis Framework for COVID-19 Tweets: A Comprehensive Methodology

The global landscape experienced upheaval during the COVID-19 pandemic, leading to varying public sentiments captured in social media discussions. This article explores a proposed methodology for sentiment analysis of COVID-19-related tweets, emphasizing a structured pipeline designed for effectiveness and accuracy.

Methodology Overview

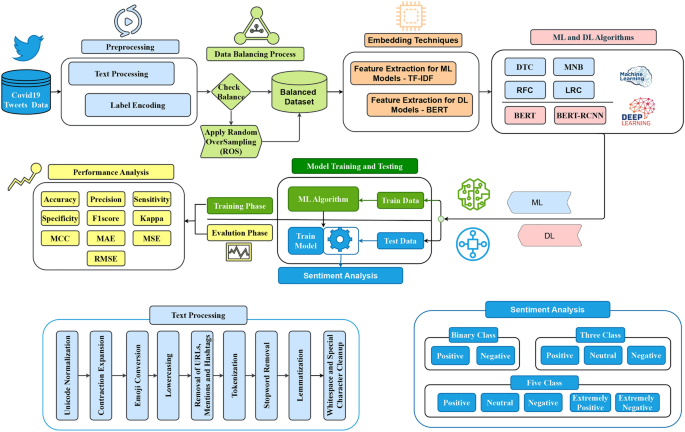

The sentiment analysis framework follows a meticulous pipeline consisting of several critical phases:

- Preprocessing

- Data Balancing

- Feature Extraction

- Model Training

- Performance Evaluation

Each of these components plays a vital role in ensuring robust sentiment classification, as illustrated in the accompanying figure (Fig. 1).

Text Preprocessing

Before analysis, raw tweets undergo extensive preprocessing to standardize the text for machine learning models. This stage includes:

- Unicode Normalization: Harmonizing text by removing accents and special characters.

- Contraction Expansion: Converting contractions (e.g., “can’t” to “cannot”).

- Emoji Conversion: Replacing emojis with descriptive text to retain sentiment value.

- Lowercasing: Ensuring uniformity by converting all text to lowercase.

- Removal of URLs, Mentions, and Hashtags: Filtering out social media artifacts irrelevant to sentiment analysis.

- Tokenization: Splitting text into individual words or tokens.

- Stopword Removal: Excluding common words while preserving critical negations.

- Lemmatization: Reducing words to their base forms for better generalization.

- Whitespace Normalization: Eliminating redundant spaces and non-essential symbols.

This comprehensive preprocessing enhances the quality of textual data, setting a solid foundation for subsequent analysis.

Label Encoding

To convert sentiment labels into numerical values manageable for machine learning models, label encoding is applied. Depending on the task’s complexity, three encoding schemes are utilized:

- Binary Classification: Positive (1) and Negative (0).

- Three-Class Classification: Positive (1), Neutral (0), and Negative (-1).

- Five-Class Classification: Dividing sentiments into categories: Extremely Positive (3), Positive (2), Neutral (1), Negative (0), and Extremely Negative (-1).

This transformation ensures that sentiment categories are effectively represented as numeric values, conducive for machine learning models.

Data Balancing

Class imbalance poses a significant challenge in sentiment analysis. To address this, Random OverSampling (ROS) is implemented, a technique that duplicates minority class samples to match the majority class count. By creating a balanced dataset, the model learns effectively from all sentiments, reducing bias towards the prevailing class.

Dataset Description

The dataset utilized comprises 44,955 tweets collected from various nations during the COVID-19 lockdown. Sourced from Kaggle, the dataset includes vital attributes:

- Location: Indicates where the tweet was posted.

- TweetAt: Captures the timestamp of the tweet.

- OriginalTweet: Contains the text analyzed.

- Sentiment: Categorizes the tweet into Positive, Neutral, or Negative.

This diverse dataset provides rich insights into public emotions and opinions during the pandemic, facilitating extensive sentiment analysis.

Sentiment Classification Tasks

In the sentiment analysis experiment, tweets are classified into varying sentiment categories:

- Binary Sentiment: Distinguishing between Positive and Negative sentiments.

- Three-Class Sentiment: Differentiating Positive, Neutral, and Negative sentiments.

- Five-Class Sentiment: Analyzing sentiments in a more granular context, including Extremely Positive and Extremely Negative categories.

Challenges in the Dataset

Several challenges hinder the efficacy of sentiment analysis, including class imbalance and mislabeling. The presence of noisy data can degrade model performance. Variability in formats or resolutions may further introduce inconsistencies that impact analysis. Therefore, addressing these challenges requires meticulous preprocessing, balancing, and evaluation.

Feature Extraction

For feature extraction, we employ different techniques tailored to the models:

- Deep Learning Models (e.g., BERT-LSTM) utilize BERT embeddings, which offer rich contextualized representations.

- Machine Learning Models employ TF-IDF embeddings that reflect the importance of words in the context of the dataset.

These techniques maximize the strengths of both deep learning and machine learning approaches.

BERT Embeddings for Deep Learning

The BERT (Bidirectional Encoder Representations from Transformers) model generates embeddings that capture nuanced meanings based on context. Utilizing the BERT tokenizer, input sentences are transformed into tokenized representations, allowing effective processing of complex linguistic structures.

TF-IDF for Machine Learning

TF-IDF evaluates word significance within a document relative to the entire dataset. This sparsated representation can efficiently handle high-dimensional text data, enhancing classification accuracy through focused feature representation.

Model Training and Evaluation

The sentiment analysis employs both machine learning and deep learning models:

- Machine Learning: Models such as Decision Tree Classifier (DTC), Random Forest Classifier (RFC), Multinomial Naive Bayes (MNB), and Logistic Regression Classifier (LRC) are trained using extracted features.

- Deep Learning: The BERT-LSTM architecture, which combines BERT embeddings with LSTM layers, enhances sequential learner capabilities.

Performance evaluation of these models incorporates various metrics:

- Accuracy (ACC)

- Precision (PREC)

- Recall (REC)

- F1 Score (F1)

- Specificity (SPEC)

- Kappa (KAPPA)

- Mean Absolute Error (MAE)

- Mean Squared Error (MSE)

- Root Mean Squared Error (RMSE)

These evaluations ensure quantitative measures of model effectiveness.

Hyperparameter Tuning

Hyperparameters are essential for optimizing model performance. For machine learning models, a learning rate of 0.1 is used, while for deep learning models like BERT-LSTM, a smaller learning rate of (5 \times 10^{-5}) is preferred. Other hyperparameters include:

- Batch Size: Set at 32 for ML models and 64 for deep learning models.

- Epochs: 100 for ML models and 20 for deep learning to prevent overfitting.

- Optimizer: Stochastic Gradient Descent (SGD) for ML and AdamW for deep learning.

Fine-tuning of the BERT-LSTM Model

To maximize performance, the BERT-LSTM model undergoes a fine-tuning process whereby pre-trained weights are adapted to the target dataset using a lower learning rate. This procedure ensures efficient training with minimized overfitting, adjusting to domain-specific features through an iterative process of backpropagation.

Conclusion

The outlined methodology for sentiment analysis of COVID-19 tweets provides a structured and effective approach. By incorporating a range of preprocessing techniques, balanced data representation, cutting-edge feature extraction, and comprehensive model evaluations, this framework is poised to capture the complexities of public sentiment during unprecedented times. This analysis not only augments understanding of public opinion during the pandemic but also offers a foundational framework that can be adapted for further social media sentiment analysis across different contexts.