Understanding Human Object Vision through Neural Representations

Human object vision is a fascinating topic that intertwines neuroscience, psychology, and machine learning. With advancements in neuroscience, researchers have begun to uncover how the brain processes visual information and recognizes objects. This article explores key studies and findings that shed light on the intricate workings of our visual system.

The Basis of Functional Specificity

One seminal work in the field is by Nancy Kanwisher, who discussed the notion of functional specificity in the brain. According to Kanwisher (2010), specific brain regions are dedicated to processing different aspects of visual stimuli. For example, the fusiform gyrus is known to be crucial for face recognition, showcasing how certain neural mechanisms are adept at handling particular types of visual information. This research has paved the way for understanding the functional architecture of the mind.

Object Representations in the Occipitotemporal Cortex

Konkle & Oliva (2012) provided further insights by examining how objects are represented in the occipitotemporal cortex. Their study revealed that the human brain organizes object responses not just categorically, but also dimensionally—based on real-world size. This suggests that our brain employs a complex organizational structure that reflects a more nuanced understanding of the physical world.

Mapping Object Space

Recent studies have pioneered the mapping of what is termed “object space” in primate brains, as conducted by Bao et al. (2020). Their findings indicated that the inferotemporal cortex maintains spatial representations that are crucial for object recognition. This research illustrates that our neural systems don’t merely respond to objects as static entities; they are constantly processing spatial relationships, making sense of how these objects interact within our visual fields.

Cross-Species Insights

Kriegeskorte et al. (2008) explored the similarities between human and monkey brains in matching categorical object representations in the inferior temporal cortex. This comparison is invaluable because it highlights evolutionary insights—showing that certain neural functions have been conserved across species. The implications of these findings extend into the realm of artificial intelligence, where machine learning models can be designed to mimic these biological processes.

Neural Dynamics of Perceived Similarity

Understanding perceived similarity further enriches our understanding of visual cognition. Cichy et al. (2019) reviewed spatiotemporal neural dynamics and how they relate to perceived similarity for everyday objects. Their work suggests that the brain processes related objects in a cohesive manner, revealing a dynamic interplay that contributes to our ability to recognize and categorize visual inputs swiftly.

Deep Learning Models and Neural Representation

One of the most exciting developments in the field is the application of deep neural networks. Kriegeskorte (2015) argued that these models provide a robust framework for modeling biological vision and brain information processing. Notably, a study by Ratan Murty et al. (2021) highlighted how computational models of category-selective brain regions can undergo high-throughput tests of selectivity.

Understanding Scene Perception

Understanding how the brain perceives scenes is also vital for a comprehensive view of object vision. Epstein and Baker (2019) explored scene perception and emphasized the interplay between stationary objects and the dynamic contexts in which they exist. By dissecting the neural mechanisms of scene understanding, we gain insights into how context shapes visual recognition.

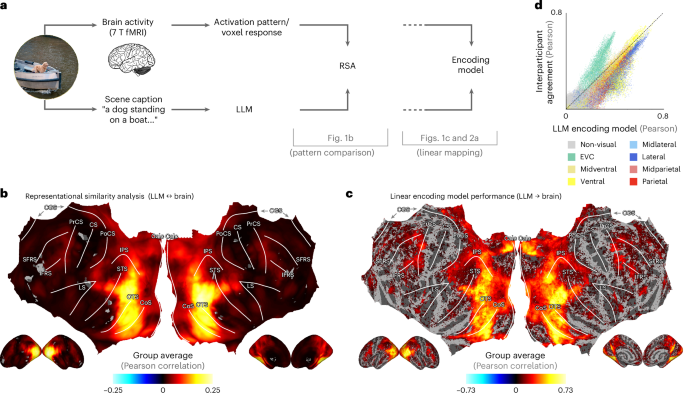

The Intersection of Vision and Language

Research by Popham et al. (2021) has unveiled fascinating connections between visual and linguistic representations. Their findings indicate that both visual cortex and language processing areas align in their encoding of semantic information. This intersection holds immense potential for integrating neuroscience with artificial intelligence systems that model human cognition.

Individual Differences in Neural Responses

Recent studies have highlighted individual distinctions in how brains process visual information. Mehrer et al. (2020) examined variations among different deep neural network models, noting that even slight changes in architecture could result in significant discrepancies in performance. This suggests a complex, heterogeneous system of processing and representation in the human visual cortex.

The Role of Attention

Attention plays a critical role in how we process visual scenes. Çukur et al. (2013) demonstrated that attention alters the way semantic representations are organized across the human brain. By spotlighting certain features while ignoring others, attention guides our understanding and interaction with complex environments.

Unraveling Object Recognition

The process of recognizing objects involves various neural pathways. Ishai et al. (1999) discussed the distribution of object representation across the ventral visual pathway. Understanding this distribution aids in grasping how different types of information, such as shape and color, are integrated to form coherent object representations.

Establishing Ecological Relevance

As researchers move toward making neural networks that outperform traditional models, ecological validity becomes essential. Studies, such as one by Mehrer et al. (2021), voice the need for datasets that closely resemble real-world visual experiences. This shift towards ecologically motivated data collection has significant implications for both neuroscience and computational models in machine learning.

Current Technologies and Future Directions

The advancement of techniques, such as functional connectivity imaging, allows a deeper understanding of how different regions of the brain collaborate during the visual processing of objects. As technology continues to evolve, collaborative efforts between neuroscience and artificial intelligence will likely yield further insights into the mechanisms underlying human vision.

Through these interconnected studies, we begin to unravel the complex tapestry of human object vision, laying the groundwork for both scientific advancements and potential applications in artificial intelligence. Each finding not only contributes to our theoretical understanding but also propels the integration of neuroscience into technological innovations.