The Rise of AI Deception: Impersonation of U.S. Officials

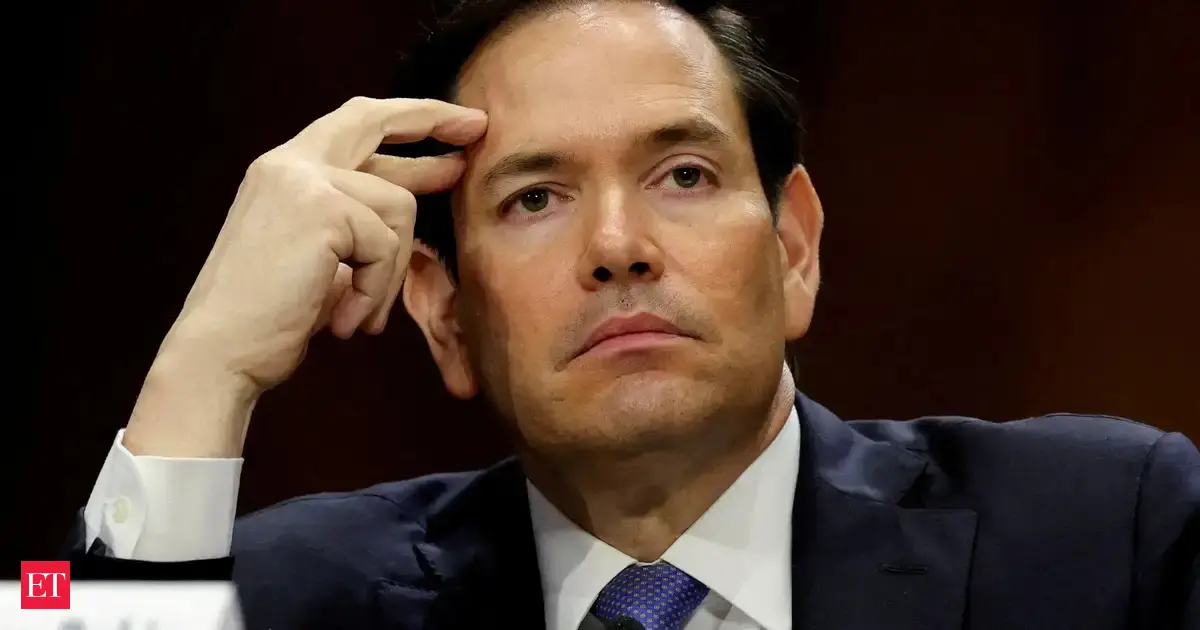

In a brazen act that highlights the vulnerabilities within modern cybersecurity, a sophisticated imposter posed as U.S. Secretary of State Marco Rubio, leveraging artificial intelligence to craft convincing voice and text messages. This alarming incident has sent ripples through high-level political circles, affecting foreign ministers, a U.S. senator, and a state governor, as detailed in a classified cable obtained by U.S. media sources.

The Mechanism of Deception: AI at Play

The impersonator utilized AI-generated content to create an authentic-sounding presence, setting up a Signal account under the name "Marco.Rubio@state.gov." The attacks commenced mid-June, with at least two officials receiving AI-generated voicemails designed to advance the dialogue. The messages prompted further communication via the encrypted messaging app Signal, a move indicative of the impersonator’s intent to engage in deeper, potentially sensitive conversations. While the detailed content of the messages remains undisclosed, the implications are alarming.

Motivation Behind the Attack

According to U.S. officials, the perpetrator’s primary objective appears to be the extraction of sensitive information or unauthorized access to secure accounts. This scenario has raised immediate concerns regarding the broader implications of sharing information with third parties. The cable issued a clear warning: while there is no direct cybersecurity threat posed by this specific campaign, the possibility of compromised accounts remains a significant risk.

State Department’s Response

In response to this evolving threat landscape, the U.S. State Department has initiated an internal investigation and issued alerts to embassies and consulates worldwide. A spokesperson confirmed ongoing monitoring efforts to safeguard sensitive communications, although further details were withheld for security reasons. This incident has become part of a broader cybersecurity alert that underscores the necessity of vigilance in an era fraught with technological misuse.

A Broader Context: Disinformation Campaigns

Interestingly, this incident is not isolated. U.S. officials are linking it to a larger disinformation campaign that has seen a resurgence in its effectiveness through advanced AI tools. For instance, a concurrent operation, suspected to include Russian actors, began targeting the Gmail accounts of journalists and activists starting in April. These operations further exploit fake identities, including that of government officials, heightening the stakes in digital trust and security.

The Growing Threat of "Smishing" and "Vishing"

The FBI recently raised alarms about the growing prevalence of "smishing" (SMS phishing) and "vishing" (voice phishing) attacks, which are increasingly employing AI-generated content. These deceptive tactics leverage the impersonation of senior officials, complicating the challenge of trust among associates and allies. The continued impersonation of Marco Rubio, particularly after earlier incidents involving deepfake videos misrepresenting his views on Ukraine, points to a troubling trend where such attacks are becoming alarmingly sophisticated and harder to detect.

The Arms Race: Detection vs. Deception

Experts are emphasizing the escalating battle between technologies used for deception and those designed to detect it. "It’s an arms race," says Siwei Lyu, a computer science professor at the University at Buffalo. Advancements in AI deepfake technology are outpacing the development of detection tools, making it increasingly difficult to discern genuine communications from fabrications.

Legal and Regulatory Ramifications

As concerns over deepfake technology intensify, experts and lawmakers are advocating for stricter regulations and criminal penalties against the misuse of generative AI. The pressing need for advanced detection technologies is becoming a focal point in discussions about national security and personal privacy, emphasizing how societal norms must adapt to combat these modern cyber threats.

In light of these developments, the incident involving the impersonation of Marco Rubio serves as a critical reminder of the vulnerabilities facing political figures and institutions in an age where misinformation and deception can unfold at unprecedented scales. The urgency for enhanced safeguards and regulatory frameworks becomes evident as the technological landscape continues to evolve.