Data Collection in Medical Research: A Comprehensive Overview

MRI Reports and Patient Data

In the realm of medical research, data collection is foundational, informing diagnosis and treatment protocols. In this investigation, we meticulously gathered MRI reports from 6,174 tumor patients who underwent scans between January 1, 2019, and December 31, 2024, at three different medical facilities. This diverse data set encompassed reports authored by radiologists specializing in various anatomical systems, including the nervous, digestive, and urinary systems.

Each report provided a detailed account of normal anatomical structures in the scanned regions, highlighting any abnormal lesion signals and offering preliminary diagnoses. Two independent reviewers played a crucial role, analyzing the original MRI reports and corresponding scans to classify the findings into three categories: benign, atypical, or malignant. In instances of disagreement, a third oncologist intervened to resolve conflicts. Importantly, to maintain the integrity of the data, the original reports were not altered, and all identifiable information—such as patient details, examination dates, and physician names—was anonymized, ensuring patient confidentiality.

Study Design and Chatbot Interaction

This study incorporated innovative technology through the utilization of two chatbots: GPT o1-preview, developed by OpenAI (referred to as Chatbot 1), and Deepseek-R1, developed by DeepSeek (designated as Chatbot 2). The interaction with these chatbots took place over a month, from February 1 to March 31, 2025.

During this period, the chatbots were presented with specific queries derived from the original reports. To maintain consistency, all MRI reports and queries were exclusively in English. Their tasks included interpreting the reports in layman’s terms, classifying lesions, assessing surgical necessity, and recommending treatment plans based on report content.

To eliminate bias, a new chat session was initiated for each report analysis, and each response from the chatbots was meticulously documented.

Readability Assessments: A Vital Component

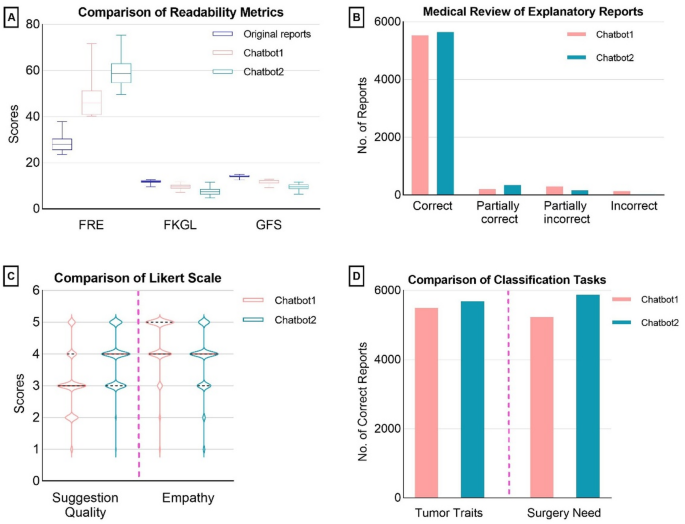

An essential part of this research involved evaluating the readability of both original MRI reports and the explanatory notes generated by the chatbots. Utilizing an online tool, we analyzed readability using three key indices: the Flesch-Kincaid Reading Ease (FRE) score, the Flesch–Kincaid Grade Level (FKGL), and the Gunning Fog Score (GFS).

Upon the study’s completion, the responses generated by the chatbots underwent a rigorous medical review. Each response thread received independent evaluations from two medical reviewers, and discrepancies were settled with the consultation of a third oncologist.

Categories of Findings from Medical Review

The medical review of the chatbots’ explanatory reports categorized findings into four distinct levels. The term "correct" indicates that all content from the original report was accurately represented without errors. "Partially correct" refers to omissions that do not significantly impact patient management. "Partially incorrect" includes minor inaccuracies that could slightly affect patient management but are not severe enough to change diagnosis or treatment. Lastly, "incorrect" signifies critical errors that could substantially impact how the patient is managed, such as misidentifying a tumor’s location.

The inquiries concerning tumor classification and the necessity of surgical intervention were assessed simply as correct or incorrect. Additionally, a Likert scale was employed to evaluate the quality of treatment suggestions and the expressions of empathy from the chatbots during their responses, rating from 1 (very poor) to 5 (excellent).

Ethical Considerations in Research

Ethical considerations are paramount in medical research. In this study, an exemption from ethical review was granted by the Academic Ethics Review Committee of Peking Union Medical College Hospital, Chinese Academy of Medical Sciences (Exemption No. SZ-3192). All data were de-identified to protect the privacy of the human subjects involved. The Institutional Review Board of the same institution waived the requirement for original informed consent, permitting secondary analysis without additional consent. Adhering to the principles of the Declaration of Helsinki, the study also complied with the guidelines of the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE).

Statistical Analysis and Interpretation

For the statistical analysis, various methods were employed to compare readability differences and performance metrics between the chatbot-generated responses and the original MRI reports. The Friedman test was utilized for comparing overall readability, while the Wilcoxon signed-rank test assessed quality differences in the therapeutic recommendations and empathy demonstrated by the two chatbots.

The Chi-square test was applied to evaluate differences in medical review performance, facilitating a comprehensive understanding of how the chatbots performed relative to the original reports. All statistical analyses were conducted using SPSS software, version 26.0 (IBM), considering a two-tailed p-value of less than 0.05 as statistically significant.

This structured and analytical approach to data collection and evaluation not only ensures the reliability of findings but also enhances our understanding of integrating technology into medical diagnostics and patient management.