### Experimental Results of the Proposed Model

In this section, we delve into the experimental results of the proposed model, showcasing its performance against state-of-the-art methods. We provide a thorough analysis of the outcomes, explaining why our approach emerges as a stronger choice compared to existing methodologies.

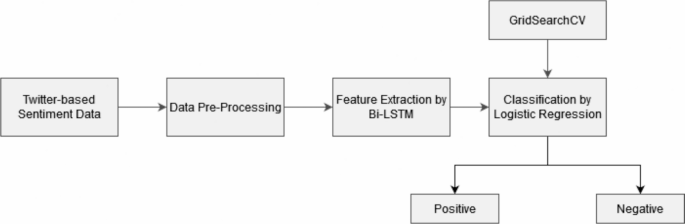

#### Overview of the Architecture

For our sentiment analysis task, we initially leveraged a Bi-LSTM-based model on preprocessed Twitter data. This model proficiently extracts meaningful features via its multiple hidden layers, employing the backpropagation algorithm to train effectively. Key parameters for training included a binary cross-entropy loss function, a batch size of 256, 15 epochs, and the Adam optimizer. To mitigate overfitting concerns, a learning rate scheduler was introduced, starting with an initial learning rate of 0.01. After the feature extraction, we employed a Logistic Regression (LR) classifier, which operated efficiently with hyperparameters outlined in Table 1. Our dataset was split into 80% for training and 20% for testing to facilitate a thorough evaluation.

To gauge the model’s reliability and performance, various metrics, including precision, recall, F1-score, and accuracy, were utilized.

#### Accuracy and Loss Curves

Figure 2 illustrates the accuracy and loss curves of the proposed Bi-LSTM model. Notably, these curves reveal that as the epochs increase, the loss decreases, and accuracy improves significantly. After about five epochs, both loss and accuracy stabilize, suggesting that the network efficiently extracts pertinent features while minimizing the risk of overfitting. This indicates reasonable accuracy in sentiment predictions, with the model achieving an overall accuracy of 79%.

To further substantiate our findings, we presented the confusion matrix of the Bi-LSTM architecture in Figure 3, which reinforced the model’s performance metrics.

#### Enhanced Approach for Better Performance

While the initial Bi-LSTM model demonstrated respectable performance, we found slight improvements could be realized through further processing of its features. We enhanced the model by channeling Bi-LSTM features through a dense layer and subsequently applying Logistic Regression with the parameters specified in Table 1. This adjustment led to performance metrics of 81.84% precision, 83.4% recall, 82.60% F1-score, and an accuracy of 82.42%. The confusion matrix for this combined Bi-LSTM and LR model, shown in Figure 4, illustrates improved efficacy in classifying sentiments. A summary of both models’ performances can be found in Table 3.

#### Table 3: Performance of the Suggested Models

Table 3 presents a comparative overview of the performance of the Bi-LSTM model and the improved Bi-LSTM integrated with LR. All evaluation metrics showcased enhancements with the GridSearchCV-based LR technique. For instance, the recall improved from 79.70% with the Bi-LSTM model to 83.38% with the enhanced version, indicating better detection of positive instances. Precision also rose from 78.70% to 81.84%, demonstrating improved prediction accuracy. The F1-Score increased from 79.20% to 82.60%, which reflects a more balanced approach to classifying both positive and negative instances. Accuracy improved from 78.99% to 82.42%, highlighting a significant overall enhancement in correct classifications.

#### Comparison with State-of-the-Art Models

Table 4 presents a comparison of our proposed model with methods discussed in the related work section, specifically concerning accuracy. Our findings reveal that the suggested model outperforms previous Bi-LSTM implementations by over 1% in accuracy. Compared to transformer-based models, it achieves an accuracy that is 0.32% higher.

Our model (Bi-LSTM-Optimized LR) shows superior performance over state-of-the-art methods due to several significant factors:

1. **Contextual Understanding**: The Bi-LSTM architecture captures contextual information in both directions, enhancing the sentiment analysis capabilities significantly.

2. **Utility of Hybridization**: By combining Bi-LSTM and LR, the model leverages the strengths of both deep learning and traditional machine learning techniques, leading to superior performance.

3. **Robust Hyperparameter Optimization**: The optimized hyperparameters and learning rate scheduler contribute to reduced overfitting, which enhances the model’s generalization abilities.

#### Limitations of the Proposed Model

Despite its advantages, the proposed model does exhibit some limitations during its implementation:

1. **Transparency Issues**: The inherent complexity of the Bi-LSTM layer may pose challenges such as sensitivity to noise. Future versions of the model could integrate techniques like attention mechanisms, Shapley Additive explanations (SHAP), and Layer-Wise Relevance Propagation (LRP) to improve interpretability, thereby making it more applicable in real-world scenarios.

2. **Computational Constraints**: Training the Bi-LSTM on large datasets can be computationally intensive due to sequential processing constraints and substantial parameter spaces. Future improvements can incorporate Gated Recurrent Units (GRU) or sophisticated architectures like transformer-based models to address these challenges.

3. **Understanding Sarcasm**: The model’s performance can be negatively impacted by sarcasm and irony, which are often intertwined with implicit meanings and contextual dependencies. Future iterations may benefit from integrating attention mechanisms, transformers, sarcasm-aware features, and sentiment shift detection to enhance classification accuracy.

As we progress, these insights will guide the refinement of our model, ensuring it remains robust and relevant in the rapidly evolving landscape of sentiment analysis and machine learning applications.