Understanding Vision Language Models (VLMs)

Vision Language Models (VLMs) are at the forefront of artificial intelligence, enabling sophisticated visual understanding coupled with textual input. These models are typically structured by feeding visual tokens extracted from a pretrained vision encoder into a pretrained Large Language Model (LLM) through a specialized projection layer. This synergy allows VLMs to leverage the rich, nuanced visual representation from the vision encoder while tapping into the vast knowledge and reasoning capabilities of LLMs. The applications of VLMs are diverse and impactful, ranging from accessibility assistants and user interface navigation to robotics and gaming, creating user experiences that are both engaging and functional.

The Accuracy-Latency Dichotomy

A salient characteristic of VLMs is that their accuracy tends to improve with higher input image resolutions. This is particularly relevant for tasks demanding fine details, such as document analysis or UI recognition. However, there’s a catch: as resolution increases, so does the time-to-first-token (TTFT)—a crucial metric in real-time applications. High-resolution images not only take longer for vision encoders to process but also generate more visual tokens, leading to increased pre-filling time for the LLM.

To illustrate, consider an example where a VLM is asked to identify a street sign in an image. In a scenario involving a low-resolution input, the model might struggle to respond accurately. Conversely, a high-resolution input allows it to correctly identify complex elements like a “Do Not Enter” sign. This trade-off illustrates the challenge of balancing accuracy and efficiency: striving for high accuracy can inadvertently slow down the processing speed.

The FastVLM Breakthrough

To tackle these challenges, a team of Apple ML researchers has introduced FastVLM, a new approach that improves accuracy while minimizing latency. Accepting the need for a streamlined design, FastVLM employs a hybrid architecture, specifically designed for high-resolution images, allowing it to process visual queries with greater precision and speed. It aims to meet the demands of low-latency, real-time applications, and ensure smooth operation on-device—essential for maintaining user privacy.

FastVLM’s innovative design includes a new backbone structure known as FastViTHD that improves upon traditional vision encoders. By producing fewer but higher-quality visual tokens, FastViTHD is optimized for high-resolution images, striking a balance between accuracy and efficiency.

Hybrid Vision Encoders: A Game Changer

One of the hallmarks of FastVLM is its clever use of hybrid vision encoders. Through systematic comparisons of existing pretrained models, researchers have determined that architectures like FastViT significantly outperform traditional alternatives. FastViT, which integrates both convolutional and transformer elements, achieves a remarkable accuracy-latency trade-off—approximately eight times smaller and 20 times faster than its transformer-only counterpart, ViT-L/14.

The efficiency of FastViT allows it to serve as a robust backbone for FastVLM, making it a formidable player in the world of VLMs. In comparing various models, it became evident that FastViT doesn’t just deliver on performance; it reshapes expectations regarding on-device AI capabilities.

FastViTHD: Tailored for High-Resolution Input

While FastViT proved to be an effective encoder, the quest for even greater accuracy led researchers to develop FastViTHD, specifically designed for handling high-resolution images more efficiently. This architecture includes an additional processing stage compared to its predecessor, enhancing its ability to produce a refined quality of visual tokens without sacrificing speed.

In evaluating FastViTHD’s performance combined with different LLMs, interesting insights emerged regarding optimal configurations. The findings emphasize that switching to a larger LLM could be more beneficial than focusing solely on increasing image resolution. The relationship between image resolution and LLM size emerged as a nuanced consideration, adding another layer of complexity to VLM design.

FastVLM: The Comprehensive VLM Solution

FastVLM, built upon the FastViTHD backbone, employs a Multi-Layer Perceptron (MLP) for projecting visual tokens into the LLM’s embedding space. This straightforward design promotes efficiency and effectiveness while maintaining the model’s accuracy.

FastVLM stands out for its ability to outperform traditional token pruning and merging methods, which are typically complex and may hinder overall performance. Instead, FastVLM focuses on generating high-quality visual tokens from its encoder, simplifying deployment without compromising accuracy.

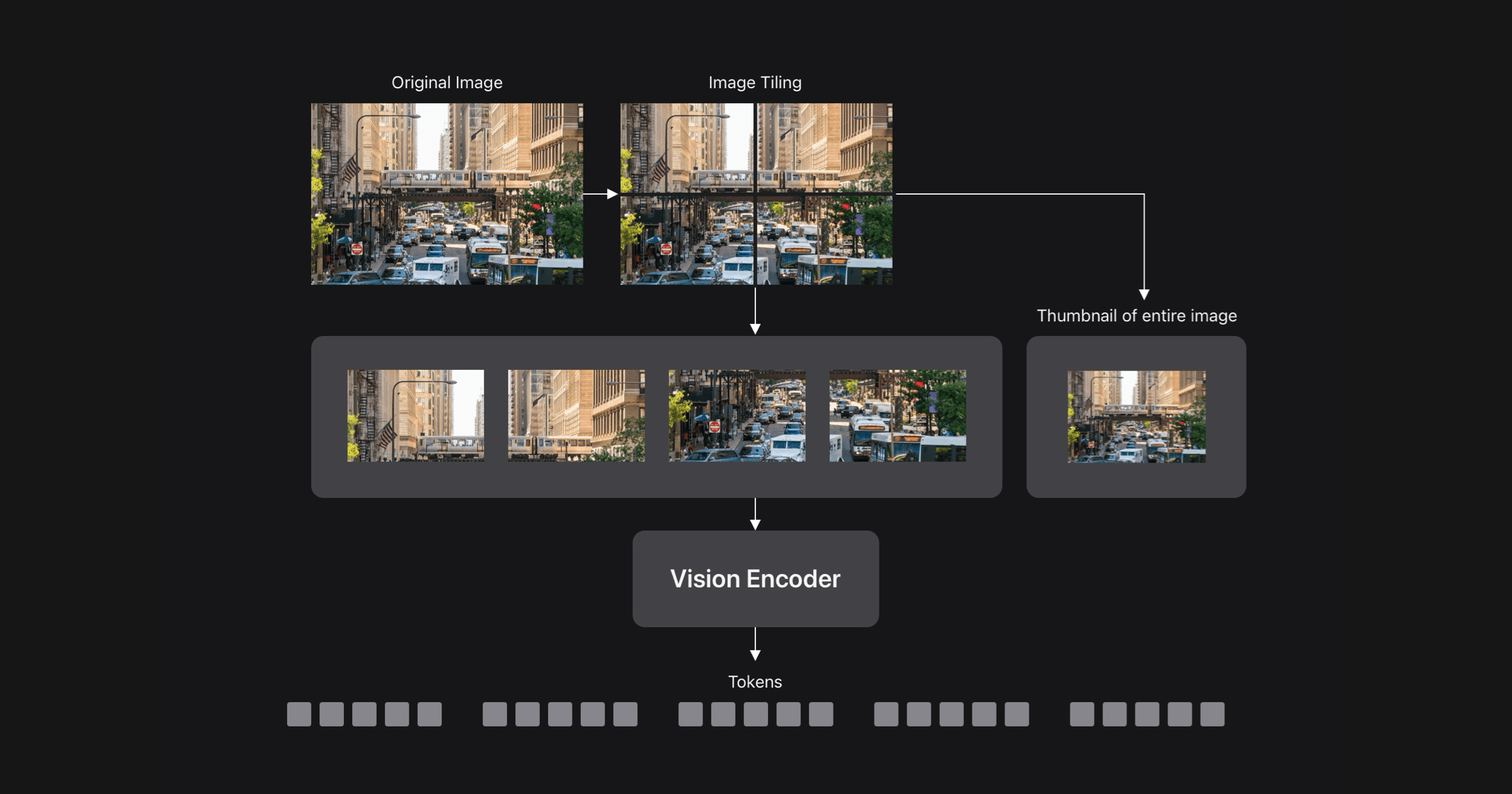

Combining Dynamic Tiling with FastVLM

To further enhance performance, the integration of dynamic tiling techniques is an avenue researchers have explored. Dynamic tiling involves dividing high-resolution images into smaller segments, processing each individually, then combining the results. While this method can be beneficial under specific conditions, findings reveal that FastVLM’s native handling of higher resolutions may render dynamic tiling unnecessary for most applications.

In testing FastVLM’s capabilities alongside dynamic tiling, results demonstrated a notable advantage for FastVLM, particularly at extreme image resolutions. The model consistently showcased the ability to maintain superior performance without the added complexity of dynamic tiling until the most demanding scenarios.

FastVLM vs. the Competition

When compared with other popular VLMs, FastVLM exhibited not only enhanced speed but also remarkable accuracy across benchmarks. Metrics indicate that it is significantly faster—85 times quicker than LLava-OneVision with a 0.5B LLM, for instance, and 5.2 times faster than SmolVLM. This provides compelling evidence that FastVLM is revolutionizing how real-time applications can incorporate visual-language understanding, facilitating on-device processing that is not just theoretical, but practically achievable.

By harnessing innovative technologies such as FastVLM, developers can build enhanced applications that provide users with seamless interactions, blending visual inquiry with natural language processing to create richer digital experiences.