“Exploring Vision-Language Models for Medical Report Generation and Visual Q&A: A Comprehensive Review”

Exploring Vision-Language Models for Medical Report Generation and Visual Q&A: A Comprehensive Review

Definition of Vision-Language Models (VLMs)

Vision-Language Models (VLMs) are a class of artificial intelligence systems designed to process and generate information by integrating visual and textual data. They combine the capabilities of image recognition and natural language processing (NLP) to facilitate tasks such as report generation and visual question answering (VQA).

Example

In a healthcare setting, a VLM can analyze medical imaging data, such as X-rays or MRIs, and generate comprehensive reports detailing findings in natural language, thereby assisting radiologists in streamlining their documentation processes.

Structural Deepener

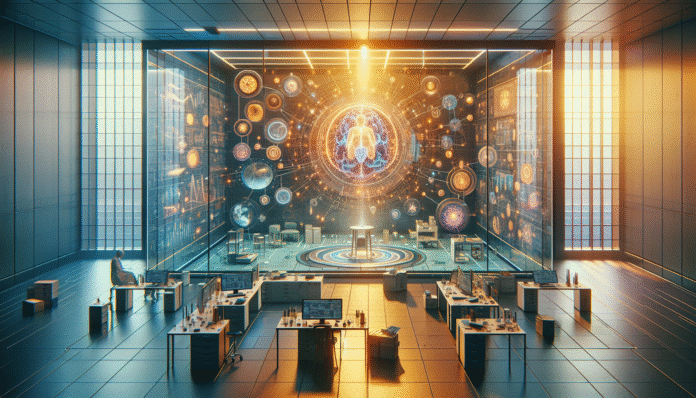

To visualize how VLMs operate in a healthcare scenario, consider the following diagram:

- Input Layer: An image (e.g., CT scan) and patient history text.

- Processing Layer: The model interprets the image for features like tumors and correlates them with relevant medical records.

- Output Layer: The system generates a coherent report summarizing the findings.

Reflection / Socratic Anchor

What underlying biases might a developer introduce when training a VLM on a limited dataset?

Application / Insight

Implementing VLMs in clinical settings can significantly reduce the time required to create medical reports, thereby allowing healthcare professionals to focus on patient care rather than administrative tasks.

The Process Behind Medical Report Generation

Medical report generation using VLMs involves several steps, including image input, feature extraction, and report synthesis.

Definition

This process refers to the systematic methodology that VLMs follow to convert visual data into structured and informative textual reports.

Example

A VLM may analyze a series of chest X-rays, identify abnormalities such as consolidation or effusion, and automatically draft a report summarizing these findings tailored to a specific case.

Structural Deepener

The lifecycle of the medical report generation can be outlined as follows:

- Image Acquisition: Capturing high-quality medical images.

- Feature Extraction: Utilizing computer vision techniques to identify key features.

- Data Interpretation: Applying medical knowledge to interpret findings.

- Automated Reporting: Generating a textual report based on the processed data.

Reflection / Socratic Anchor

Which part of this process might be vulnerable to inaccuracies, and how would that impact patient care?

Application / Insight

Recognizing potential pitfalls in the report generation process allows practitioners to integrate human oversight effectively, ensuring the accuracy and reliability of generated reports.

Visual Question Answering (VQA) in Healthcare

VQA is a critical application of VLMs that allows healthcare practitioners to query visual data using natural language.

Definition

Visual Question Answering (VQA) refers to the ability of a system to answer questions posed in natural language about visual inputs, enhancing the interaction between healthcare professionals and data.

Example

A physician might ask, “What is the size of the lesion in this MRI?” and receive an informed response directly correlating image assessment with the question.

Structural Deepener

The architecture of a VQA system can be represented as follows:

- Question Input: A natural language question.

- Image Input: An associated medical image.

- Joint Processing: The model processes both inputs to understand context.

- Output Generation: The system generates a specific answer, enhancing clinical decision-making.

Reflection / Socratic Anchor

How might the phrasing of a question influence the accuracy of the answer generated by a VQA model?

Application / Insight

Training VQA systems with diverse question types can improve response accuracy, offering nuanced answers for complex medical inquiries.

Challenges and Limitations of Vision-Language Models

Despite their promise, VLMs face several limitations that practitioners must navigate.

Definition

The challenges encompass technological, ethical, and practical issues that can arise during the deployment of Vision-Language Models in healthcare.

Example

Data privacy concerns can hinder the adoption of VLMs, as medical data is often sensitive and subject to stringent regulations.

Structural Deepener

The following table highlights common limitations:

| Challenge | Description | Potential Solutions |

|---|---|---|

| Data Scarcity | Insufficient labeled datasets for specific medical conditions | Collaborate with medical institutions for diverse data |

| Bias in Model Training | Models may reflect biases present in training data | Implement diverse datasets and regular audits |

| Interpretation Complexity | Complexity in analyzing nuanced medical images | Combine VLMs with human oversight |

Reflection / Socratic Anchor

What unintended consequences might arise from relying solely on AI for clinical decision-making?

Application / Insight

By addressing these challenges proactively, medical institutions can create a more robust framework for integrating VLMs into daily practice.

Conclusion

The intersection of Vision-Language Models with healthcare offers transformative potential, but it must be approached with diligence and an eye toward ethical considerations.

This text provides a comprehensive exploration of Vision-Language Models with a focus on their application in medical report generation and VQA, structured to enhance understanding, engagement, and practical implementation within healthcare settings.