The Evolution of Linguistic Theory: Insights from Chomsky’s Contributions

Noam Chomsky, a name synonymous with modern linguistics, has shaped our understanding of language for over half a century. His theories pivot on formal structures and cognitive mechanisms, bridging the gap between language and thought. This article delves into Chomsky’s major contributions, exploring the theoretical frameworks he developed and their implications for linguistics and artificial intelligence.

1. Three Models of Language Description (1956)

In his seminal paper, Three Models for the Description of Language, Chomsky outlined three types of formal grammars that could describe languages: finite-state grammars, context-free grammars, and context-sensitive grammars. Each model corresponds to a different level of complexity in linguistic structure:

-

Finite-state grammars: These are the simplest and can describe a finite number of states. They work primarily for regular languages, which can be generated by simple lexical rules.

-

Context-free grammars: More powerful than finite-state grammars, these allow for nested structures, thus enabling the representation of many natural languages’ syntactic phenomena.

- Context-sensitive grammars: The most complex of the three, they account for linguistic constructs that require context to interpret correctly.

Chomsky’s exploration illuminated the hierarchy of grammatical systems, laying the groundwork for computational linguistics and automated language processing. Read more here.

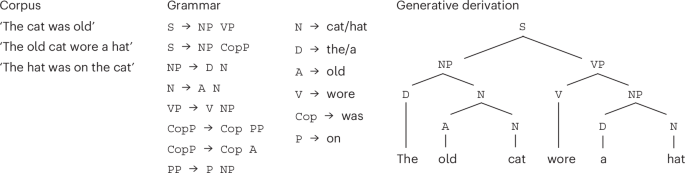

2. Syntactic Structures (1957)

Chomsky’s Syntactic Structures marked a revolutionary turn towards generative grammar, advocating that syntactic rules could generate a vast array of sentences from a limited set of grammar rules. This work was pivotal, as it shifted focus from mere observational linguistics to a scientific approach, allowing linguists to formulate and test hypotheses about language structure.

3. Finite State Languages with Miller (1958)

In collaboration with George A. Miller, Chomsky explored finite state languages, addressing the limitations of computational models at the time. This work advanced our understanding of how language can be represented algorithmically, especially within computational models. By delineating what finite state grammars can and cannot do, Chomsky and Miller posited fundamental limits on computational linguistic representations. Details available here.

4. The Strong Minimalist Thesis (2023)

Chomsky’s recent work, elaborated in Merge and the Strong Minimalist Thesis, further refines his theories. The minimalist approach suggests that the complexity of languages arises not from elaborate grammatical rules but from simpler core computational principles, using Merge as a basic operation that combines elements in linguistic structures. This theory has profound implications for understanding language processing in artificial intelligence.

5. Chomsky and the Evolution of AI

Chomsky’s theories resonate in the development of language models in AI. The advent of models like BERT and GPT has prompted scholars to revisit Chomsky’s ideas, examining how algorithms relate to human cognitive processes in language acquisition. For example, the question of whether language models simply mimic communication or exhibit genuine understanding remains a hotly debated topic.

Recent discussions reflect on the limitations of AI, particularly its inability to grasp nuances such as negation, demonstrating that while AI mimics human language to a degree, it lacks the underlying cognitive frameworks that characterize human communication.

6. Language Acquisition Theories

Chomsky revolutionized our understanding of language acquisition, proposing that humans are biologically predisposed to learn language. His notion of Universal Grammar suggests that all human languages share a common structural foundation. This theory directly influenced how researchers approach language learning and cognitive development in children, emphasizing innate capabilities rather than purely environmental factors.

7. Chomsky’s Critical Voice on AI

Chomsky hasn’t hesitated to critique contemporary AI systems, emphasizing that they operate fundamentally differently from human cognition. In his commentary on models like ChatGPT, he elucidates the stark differences between algorithmic processing and genuine linguistic competence. His arguments assert the necessity for a deeper understanding of the cognitive models underlying language as opposed to mere statistical patterns prevalent in AI.

8. Syntactic and Semantic Interplay

The interplay between syntax and semantics presents a complex area of study in linguistics. Chomsky’s frameworks illuminate how syntax can govern semantic interpretation, paving the way for later theories that tackle questions of meaning in linguistics and AI. This remains a vital area of exploration, especially considering how AI interprets language contextually versus how humans understand intention and meaning.

9. The Future of Linguistics: AI and Beyond

As AI technologies continue to evolve, integrating insights from Chomsky’s work will be essential for creating models that better replicate human linguistic capabilities. Researchers emphasize the importance of grounding AI in robust linguistic theories that reflect the nuances of human cognition.

10. Collaborations and Ongoing Research

Chomsky’s influence extends beyond linguistics into interdisciplinary research. Collaborations with cognitive scientists, mathematicians, and AI researchers underscore the multifaceted implications of his work. For instance, current studies explore how language models can provide new insights into human cognition, while also considering ethical ramifications.

A Lasting Impact

Chomsky’s theories and frameworks remain foundational for understanding both human language and creating accurate AI models. His approach continues to stir debate and provoke new lines of inquiry, ensuring that his legacy will influence future generations of linguists, cognitive scientists, and AI developers alike.