Datasets Collection in Music Classification: An In-Depth Examination

Introduction to Datasets in Music Research

In recent years, music classification research has gained significant attention, largely due to the increasing availability of large, well-annotated datasets. These datasets are crucial for training modern machine learning models to recognize and classify various musical features. Two notable examples are the MagnaTagATune dataset and the Self-built Ethnic Opera Female Role Singing Excerpt Dataset (SEOFRS). This article delves into the details of these datasets, their specific characteristics, and their significance in music analysis.

MagnaTagATune Dataset

The MagnaTagATune dataset is a staple in the realm of music classification, widely adopted for research on music classification and tag prediction. It comprises over 25,000 diverse music clips, each with a duration of 29 seconds. Each audio clip is meticulously annotated with various labels that define the emotional tone, instruments, and genre of the music.

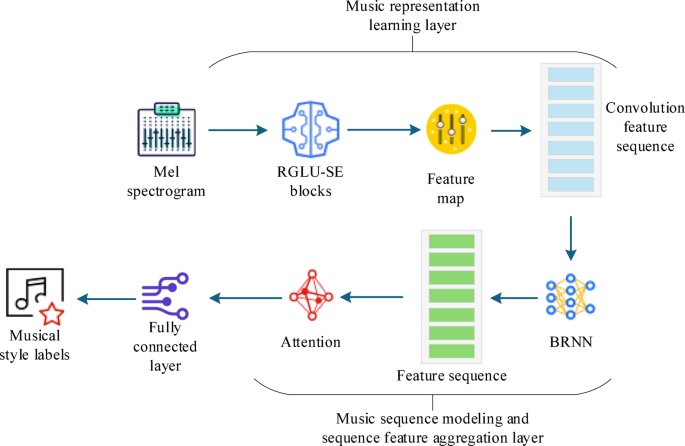

In preparation for analysis, all audio files undergo processing to convert them into mono format and resample them at a sampling rate of 16 kHz. This processing enhances the quality of audio data for subsequent analyses. When transformed into Mel spectrograms, the dataset employs a Fourier transform window length of 512 with a sliding window step size of 256 and 128 frequency bins, yielding a Mel spectrogram size of (1813, 128).

These Mel spectrograms effectively capture the salient features of the audio, making them an ideal input for machine learning and deep learning models.

Self-built Ethnic Opera Female Role Singing Excerpt Dataset (SEOFRS)

In contrast, the Self-built Ethnic Opera Female Role Singing Excerpt Dataset (SEOFRS) serves as a specialized resource focusing on traditional Chinese opera. This custom dataset comprises vocal segments by various female singers, representing a diverse array of character types and emotional expressions.

The curation of SEOFRS emphasizes high-quality, uncompressed audio files to ensure that the nuances of vocal features are easily identifiable. The audio samples include performances from a wide range of traditional opera roles, ensuring the dataset remains balanced and representative. Thus, efforts are made to prevent any overrepresentation of specific character types or emotional expressions.

Like the MagnaTagATune dataset, the audio files in SEOFRS are also subjected to preprocessing, including conversion to mono format and standardization to a 16 kHz sampling rate. The segments are meticulously sliced into uniform intervals of 5 seconds—a duration deemed optimal for capturing the essential variations inherent in ethnic opera singing. Following this, Mel spectrograms are generated using established audio signal processing methodologies, including Fourier transforms.

Mel Spectrograms as a Feature Representation

In both datasets, Mel spectrograms serve as the principal feature representation employed in the deep learning models. Given their computational efficiency and perceptual relevance, they are particularly well-suited for understanding complex musical features such as timbre and emotional expression.

Initial experiments compared Mel spectrograms with other common audio features, such as Mel-frequency cepstral coefficients (MFCC) and Chroma features. Results indicated that Mel spectrograms excel in capturing the subtle frequency changes essential for identifying emotional nuances and vocal techniques in ethnic opera performances.

For clarification, Table 1 (not shown) illustrates performance metrics, demonstrating that Mel spectrograms yield an Area Under Curve (AUC) of 0.912 on the MagnaTagATune dataset and an accuracy of 0.872 on the SEOFRS dataset, outperforming traditional features like MFCC and Chroma.

Data Quality Control and Preparation

Maintaining high data quality is essential for reliable model training and evaluation. To that end, rigorous quality control processes are applied after data processing. Each audio segment is meticulously reviewed to verify its integrity, ensuring it is free from background noise, recording distortion, and other deficiencies.

Ultimately, the datasets are divided into training, validation, and test sets, organized with an 8:1:1 ratio to maintain consistency across evaluation phases. To bolster the model’s robustness, ten-fold cross-validation is employed, effectively addressing potential overfitting issues by providing a more comprehensive assessment of the model’s performance.

Experimental Environment and Parameter Settings

To obtain meaningful insights from these datasets, specific configurations and parameters must be established. The experimental environment, as detailed in Tables 2 and 3 (not shown), employs extensive tuning processes for hyperparameters. This includes exploring a range of hidden layer sizes, learning rates, and batch sizes through a small-scale grid search.

For example, a 1D convolutional structure with 64 to 128 convolution kernels may be utilized to efficiently process this one-dimensional audio data. Proper configurations contribute to successful feature extraction and classification, accounting for temporal dynamics crucial for effective analysis of vocal performance styles.

Performance Evaluation Methods

Performance metrics, including classification accuracy and Area Under Curve (AUC), provide a quantitative measure of the effectiveness of the models in classifying music performance styles.

For example, the AUC can be effectively calculated based on the formulas discussed, ensuring the results yield a comprehensive understanding of different classification models’ capabilities, thereby facilitating optimal model selection for subsequent experiments.

Conclusion

Through carefully curated datasets like MagnaTagATune and SEOFRS, this study explores the potential of deep learning in music classification research. By applying sophisticated methodologies in audio feature extraction and rigorous evaluation parameters, researchers can leverage these datasets to enhance the understanding of musical styles and improve classification accuracy in real-world applications.

As this field evolves, the findings presented here not only affirm the importance of high-quality datasets but also highlight the significance of innovative processing techniques in advancing the study of music classification.