The Future of Computing: Insights into In-Memory Computing and AI Hardware Acceleration

In recent years, advancements in artificial intelligence (AI) and machine learning (ML) have surged, often at the cost of substantial energy consumption. As we push the limits of deep learning and complex computations, researchers are turning their attention to innovative hardware solutions that promise to mitigate these environmental challenges. One such promising area is in-memory computing (IMC), which leverages the unique characteristics of memory technologies to perform computations more efficiently. In this article, we will explore various facets of in-memory computing, accelerator architectures, and the environmental implications associated with these technologies.

Understanding In-Memory Computing

In-memory computing allows data to reside in-memory during processing, eliminating the traditional bottleneck associated with architectures that rely on moving data back and forth between memory and processors. This method not only increases efficiency but also enhances speed, especially for large neural networks. According to a recent analysis by Wu et al. (2022), the environmental implications of AI are vast, making sustainable AI solutions crucial as we proceed into an increasingly data-driven future.

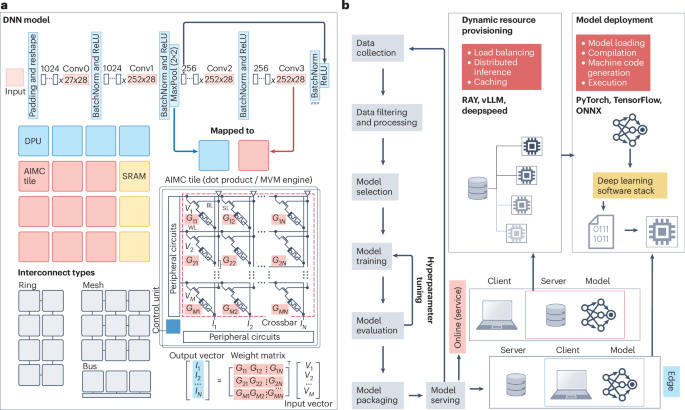

Accelerator Architectures: A Deep Dive

Accelerator architectures form the backbone of many modern deep learning systems. Chen et al. (2020) provide a comprehensive survey of existing architectures, emphasizing the unique designs tailored for deep neural networks (DNNs). These architectures include specialized processors such as GPUs, TPUs, and FPGAs, which offer significant performance improvements for computational tasks compared to general-purpose CPUs. Innovations in hardware design are paramount as they provide the necessary speed and efficiency for handling complex ML workloads.

Tensor Processing Units (TPUs)

The introduction of Tensor Processing Units (TPUs) has notably contributed to advancing deep learning. Jouppi et al. (2017) conducted an in-depth performance analysis of TPUs within datacenters, illustrating their ability to outperform traditional CPUs and GPUs in specific ML tasks. This performance edge significantly reduces the time and energy required for training complex models.

Memristor-Based Accelerators

Memristors, an emerging technology, are also making waves in computing. Huang et al. (2024) outline how memristor-based hardware accelerators offer improved performance and reduced energy consumption for AI applications. These devices enable low-power and high-speed computations, making them an attractive option for future AI hardware.

Efficiency Matters: Sustainable AI Approaches

The focus on sustainable AI is more urgent than ever, as the environmental costs of large-scale data center operations continue to grow. The analysis presented by Wu et al. (2022) casts light on these environmental implications, urging the development of eco-friendly hardware solutions. Analog AI, as explored by Ambrogio et al. (2023), represents a significant leap towards energy-efficient computations, providing techniques for speech recognition and transcription that consume less power.

Hardware-Software Co-Design

The hardware-software co-design approach is gaining traction in optimizing performance and efficiency for AI workloads. Recent works by Gallo et al. (2023) and Jain et al. (2023) investigate architectures that blend analog and digital components, resulting in systems that maximize efficiency while retaining high accuracy. This hybrid approach showcases the potential to tailor systems to specific applications and workloads, thereby reducing overall energy consumption.

Optimized Compilation for AI Accelerators

Effective compilation techniques are critical for maximizing the performance of IMC systems and deep learning accelerators. Advances presented by Siemieniuk et al. (2022) emphasize the need for automated toolchains that allow efficient mapping and execution of ML workloads on heterogeneous platforms. Developing such frameworks supports the effective deployment of AI applications while minimizing resource utilization.

The Road Ahead: Future Trends and Innovations

Looking forward, various trends are shaping the IMC landscape. The trend towards using non-volatile memory technologies, as discussed by Si et al. (2021), presents a path for more durable and energy-efficient computing. Research continues to focus on enhancing the accuracy and reliability of these systems while balancing computational demands and energy efficiency, as highlighted by Lammie et al. (2024).

Customizable Neural Network Architectures

Neural architecture search methods, particularly those focusing on in-memory computing, offer avenues for optimizing DNN structures tailored to specific applications. The review by Krestinskaya et al. (2024) illustrates how innovative architectures can adapt to the unique features of IMC hardware, paving the way for more versatile implementations.

The Synergy of Technology and Environment

The interplay between technological advancements and environmental concerns is pivotal. Collaborative efforts across academia, industries, and policymakers are essential to devise standards and guidelines that optimize performance without compromising environmental integrity. This synergy between innovation and sustainability is not merely advantageous—it is essential for the future of technology as we know it.

Research continues to evolve, shedding light on new methodologies, architectures, and hardware frameworks that promise significant contributions to the realms of AI, machine learning, and computing efficiency. As we harness these burgeoning technologies, the focus on environmental sustainability remains a guiding principle in their development and application.