Leveraging YOLOv5 for Accurate Pollinator Monitoring

Overview of YOLOv5 Performance

Recent studies highlight the use of optimized models like YOLOv5-small to monitor pollinator populations in real-time efficiently. Our results show that when trained on citizen science images, this model excels at localizing and classifying Hymenoptera and Diptera individuals. Specifically, it achieved a remarkable localization accuracy of 91.21% and a classification accuracy of 80.45% for Hymenoptera, while achieving a localization accuracy of 80.69% and classification accuracy of 66.90% for Diptera.

Interestingly, performance dipped for other flower visitors (referred to as OtherT) — typically smaller and blurrier insects. Nevertheless, the model displayed higher accuracy (92.51%) for this group. This suggests that it mislabels Hymenoptera or Diptera as OtherT less frequently than expected, making it a reliable choice for distinguishing between these categories in varying conditions.

The Need for Lightweight Models

The choice to use lightweight models such as YOLOv5-small stems from their promise of energy-efficient deployment, crucial for field settings where power resources are limited. YOLOv5-small outperformed its counterparts in F1 scores, aligning with prior studies that suggest that models with greater capacity typically yield better performance. This is a vital trend for future explorations, particularly as we consider higher-capacity architectures compatible with hardware constraints in situ.

Importance of Inference Time

A critical aspect of using these models in a real-world context is inference time — how quickly the model can make predictions. Fast inference is indispensable for real-time tracking, significantly impacting the accuracy of visitor counts per flower. Previous findings highlighted a maximum inference time of 49 frames per second for a single-class YOLOv5-nano detector on dedicated GPU hardware. Our YOLOv5-small model also shows potential for similar enhancements by adapting to run at lower resolutions, thereby improving inference speed.

Specific Challenges with Model Performance

One of the challenges encountered during testing was a higher rate of false positives (FP) in out-of-distribution (OOD) images compared to in-distribution datasets. In practical terms, this suggests the need for dataset-specific tuning. For instance, images dominated by single arthropods benefitted from lower non-maximum suppression (NMS) thresholds, while denser groups of overlapping bounding boxes required higher thresholds for better accuracy.

This highlights the complexity of localizing and identifying smaller and less sharp arthropods, such as many OtherT. Thus, optimizing NMS parameters based on specific datasets can further enhance detection accuracy.

Enhancing Model Detection and Classification Strategies

Our research indicates several promising future strategies for improving localization and classification performance:

-

Integration of Diverse Datasets: Future studies could see greater success by merging citizen-science contributions with field images to enhance model robustness. As numerous studies have pinpointed a shortage of annotated datasets for small arthropods—especially pollinators—such integration is vital for performing real-world monitoring.

-

Utilizing a Two-Step Approach: The implementation of two-step detection could streamline the process, first locating arthropods and then applying classification on the detected images. This method allows for greater flexibility, enabling users to apply tailored classification techniques suitable to their specific scenarios.

-

Pre-processing Images for Clarity: Improving the clarity of time-lapse images by preprocessing them to emphasize relevant features would be beneficial. While preprocessing in real-time may be energy-intensive, applying these techniques on stored images after capture could yield finer results.

-

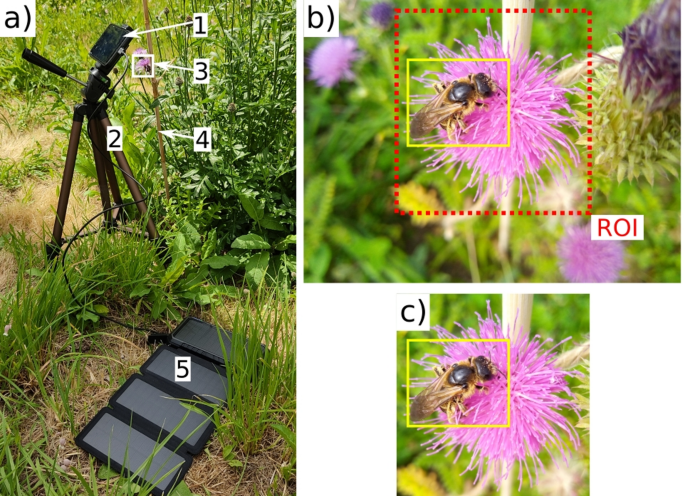

Focusing on Size and Sharpness: Previous research supports the notion that a target arthropod’s visibility is significantly influenced by its size and the image’s sharpness. Therefore, methods to maximize the size of arthropods in images—like employing a predetermined region of interest—will be essential in deepening detection accuracy, particularly in field settings.

- Tiling for Enhanced Localization: Dividing full-frame images into smaller tiles can facilitate more accurate localization of smaller objects without compromising detail. Although this method may increase computational demand, it represents a significant opportunity for improving performance.

Addressing Misclassification Challenges

A notable concern is the confusion between Hymenoptera and Diptera species, primarily driven by mimicry, particularly in hoverflies like the Syrphidae family, which often resemble bees. The inherent difficulty in distinguishing these groups suggests that training datasets need to incorporate more diverse representations to enhance model generalization.

Future Directions in Model Development

Our study aims to address challenges through various proposed advancements, including the incorporation of citizen-science platforms that encourage the upload of cropped insect images. This community involvement can enrich datasets and will allow for finer classification details.

The path ahead also appears promising with the exploration of multi-view classification, leveraging sequential frames to improve identification accuracy. This approach mimics the methodology used by taxonomists, allowing for enhanced recognition despite image occlusion.

Ultimately, the integration of different deep learning strategies and advancements in computer vision can provide fresh insights for the monitoring of pollinator populations, ensuring biodiversity remains a priority in our ever-evolving ecological landscape. By honing these approaches, we can efficiently track and protect these vital species, fostering an era of informed conservation efforts.