Flocculation Dynamics and Knowledge Embedding

Understanding Flocculation Dynamics

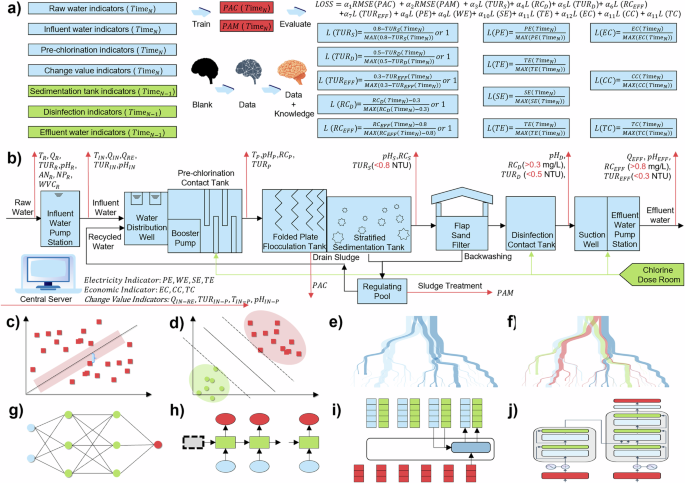

Flocculation is a crucial process in various environmental and industrial applications, particularly water treatment, where it aids in the removal of suspended solids. The dynamics of flocculation can be understood through two primary mechanisms: perikinetic and orthokinetic flocculation.

-

Perikinetic Flocculation: This occurs due to Brownian motion, the random movement of particles in a fluid. Here, particle collisions are somewhat independent of external influences, making this process largely internal to the system. Interestingly, while the size of particles does not affect the occurrence of perikinetic flocculation directly, larger particles experience diminished effects of Brownian motion due to their increased inertia.

- Orthokinetic Flocculation: In contrast, this involves particle aggregation due to external forces such as hydraulic or mechanical agitation. This external energy promotes collisions between larger particles, enhancing their likelihood of aggregation.

In real-world scenarios, both mechanisms operate simultaneously within water bodies, leading to complex interactions that challenge straightforward mathematical modeling. Consequently, the interplay between particle size and collision efficiency—termed (\eta)—and water quality changes complicates the accurate determination of optimal flocculant dosage. Therefore, machine learning (ML) techniques, which can learn nonlinear relationships from diverse datasets, are increasingly being explored to address these challenges.

Embedding Environmental Knowledge into Models

To enhance the interpretability of machine learning models in flocculation processes, embedding relevant environmental knowledge is pivotal. The entire flocculation sequence typically follows a logical chain that includes:

- Addition of Flocculant: Initiates the chemical reactions necessary for flocculation.

- Change in Kinetics: Modifies the dynamic behavior of particles.

- Particle Flocculation: Leads to the aggregation of particles.

- Change in Water Quality: Affects downstream processing and effluent quality.

During the modeling phase, various economically relevant indicators—including both energy consumption (e.g., electricity usage) and cost metrics—serve as constraints to optimize model parameters effectively. In practice, machine learning employs historical water quality metrics from specific stages in the treatment process, utilizing both independent variables and constraints to facilitate real-time adjustments and predictions.

Data Collection and Treatment Processes

The data utilized for this study was gathered from a Drinking Water Treatment Plant (DWTP) in Guangzhou, China. This facility, designed to supply 800,000 tons of water per day, typically operates around 450,000 tons daily. The plant employs chlorine disinfection as a vital step in water treatment, utilizing several pre-chlorination methods.

Specific flocculants, like Polymerized Aluminum Chloride (PAC), were assessed for their efficacy in various stages. To ensure compliance, the plant adheres to stringent national water quality standards, with controls established throughout different treatment phases—specifically influencing turbidity and chlorine residual levels.

To improve operational efficiency, the study incorporated comprehensive data collection encompassing 38 different parameters that can be monitored in real time, covering raw water, influent, sedimentation, disinfection, and effluent stages. This multifaceted data approach facilitates a more robust understanding of how various treatment processes interconnect.

Machine Learning Principles

To assess the potential of ML in optimizing flocculation processes, eight different algorithms were evaluated, including:

- Ridge Regression (RIDGE): Baseline model leveraging linear regression with regularization.

- Support Vector Regression (SVR): Capable of fitting both linear and nonlinear data.

- Random Forest (RF): An ensemble method tapping into bagging for improved accuracy.

- Extreme Gradient Boosting (XG): Another ensemble technique but based on boosting methodologies.

- Deep Neural Networks (DNN): Multi-layered neural networks designed to capture complex relationships.

- Recurrent Neural Networks (RNN): Effective for time-dependent data but challenges include gradient vanishing issues.

- Long Short-Term Memory Networks (LSTM): A variant of RNNs that manage information flow more effectively.

- Transformers (TF): Advanced models focusing on global context through self-attention mechanisms.

By testing these models against historical data, the research aims to pinpoint which technique not only forecasts flocculation performance with high accuracy but also enhances operational efficiency.

Model Interpretability

Employing Shapley Additive Explanations (SHAP), a game theory-based interpretative technique, allows for detailed insights into how various features contribute to model predictions. SHAP enhances transparency, revealing critical relationships and guiding model adjustments to mitigate biases and improve error detection.

Moreover, analyzing subtree depths in Random Forest models provides additional interpretative benefits, enabling a balance between complexity and transparency. This can aid in diagnosing overfitting or underfitting, making the model’s decision-making process easier to understand and communicate to stakeholders.

Application Feasibility Verification

The practical applicability of the developed models extends into real-world settings, as demonstrated by a validation process at the DWTP utilizing scaled-down flocculation tanks. The design strategy and testing under controlled conditions bolster the confidence in the model’s predictions.

In the rigorous validation phase, performance indicators were monitored against established thresholds, ensuring compliance with environmental standards while also focusing on cost-effectiveness associated with chemical usage.

Finally, employing Monte Carlo simulations to explore the robustness of the model under varying degrees of data absence allows for comprehensive risk assessments. This methodology aids in simulating real-world scenarios, thereby preparing operators and decision-makers for potential uncertainties in water quality monitoring and treatment processes.

Through these advanced techniques, the combined efforts of ML and environmental science pave the way for more efficient, robust, and interpretable water treatment methodologies.