Enhancing Sign Language Recognition with the AHDLMFF-ASLR Model

The advancement of technology in areas such as communication has led to the development of innovative models aimed at enhancing Sign Language Recognition (SLR), especially for the deaf and speech-impaired individuals. One such model is the AHDLMFF-ASLR, a sophisticated three-tiered method that integrates cutting-edge techniques for real-time gesture interpretation.

Overview of the AHDLMFF-ASLR Model

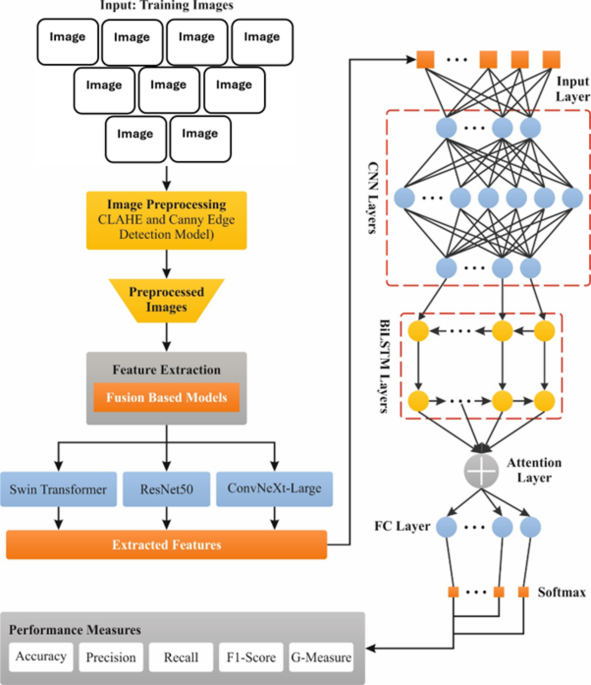

The AHDLMFF-ASLR model is designed to improve SLR by employing advanced methodologies across three critical stages: image pre-processing, feature extraction fusion, and hybrid classification. The overall process is visualized in Figure 1, which outlines the detailed flow of this innovative approach.

Image Pre-Processing Technique

The pre-processing stage is essential for enhancing the input quality of the model. At the heart of this process is the Contrast Limited Adaptive Histogram Equalization (CLAHE) method combined with Canny Edge Detection (CED).

-

CLAHE: This technique is chosen for its ability to improve local contrast without introducing excessive noise, which is common with global histogram equalization methods. The model effectively adapts to varying lighting conditions, enhancing the visibility of hand gestures crucial for SLR.

- Canny Edge Detection: CED is vital in defining the edges of the gestures, which are critical for recognizing movement and intention. By implementing a Gaussian filter and analyzing intensity gradients, CED detects edges while minimizing the impact of noise.

Both techniques work in unison to elevate the quality of input images, making the subsequent feature extraction process more precise.

Fusion of Feature Extraction Methods

Once the images are pre-processed, the next step involves the fusion of multiple feature extraction methods, specifically the Swin Transformer (ST), ConvNeXt-Large, and ResNet50 models.

-

Swin Transformer (ST): This model excels in understanding spatial context through its hierarchical framework, which captures long-range dependencies vital for recognizing complex gestures.

-

ConvNeXt-Large: A modern convolutional architecture that improves accuracy while maintaining computational efficiency. It systematically discards less significant features and prioritizes those that contribute most to the task.

- ResNet50: Known for its residual learning framework, ResNet50 stabilizes the training process for deeper networks, ensuring that essential features are preserved.

The combination of these models results in a rich, multiscale feature representation that enhances the overall recognition accuracy of the system.

Hybrid Classification Model

The final stage utilizes a hybrid classification approach based on the C-BiL-A technique, ensuring efficient capture of both spatial and temporal dependencies in sign language sequences.

-

Convolutional Layers: These layers extract local spatial features, crucial for understanding distinct gestures.

-

BiLSTM Units: The bidirectional Long Short-Term Memory units help in contextual understanding by considering both past and future frames in sequences, making it particularly effective in understanding dynamic gestures.

- Attention Mechanisms: By incorporating attention mechanisms, the model dynamically learns the significance of different time steps, allowing it to focus on the most relevant aspects of the gesture.

This hybrid approach ensures a robust classification process that accommodates the complexities inherent in sign language, thereby improving overall accuracy.

Throughout this model’s various stages, emphasis is placed on the preservation of essential details during image processing and feature extraction while maintaining computational efficiency. This leads to a system adept at interpreting the nuanced expressions of sign language, ultimately facilitating improved communication for the deaf and speech-impaired community. The AHDLMFF-ASLR’s multifaceted approach marks a significant step towards enhancing accessibility and understanding in communication.