Advancing Liver Transplant Outcomes with Machine Learning: The TRIUMPH Model

In the complex world of liver transplantation (LT), especially for patients with hepatocellular carcinoma (HCC), the accuracy of risk prediction models can make a significant difference in outcomes. A recent study validated a new machine learning-based risk prediction model known as TRIUMPH in an international multicenter external cohort. The findings indicate that TRIUMPH not only provides superior discrimination capabilities when compared to existing models like HALT-HCC, MORAL, and AFP but also demonstrates greater clinical utility through net benefit analysis.

What Makes the TRIUMPH Model Stand Out?

The efficacy of the TRIUMPH model can be attributed to two primary factors. First, it was developed using an extensive cohort of living donor liver transplantation (LDLT) patients from the University Health Network, which included approximately 20% of patients undergoing LDLT. In contrast, established models such as HALT-HCC, MORAL, and AFP were primarily based on cohorts from deceased donor liver transplantation (DDLT). The inclusion of a broad LDLT population allows for a more balanced assessment of LT recipients, leading to enhanced generalizability. This diversity is particularly reflected in TRIUMPH’s performance within subgroups like LDLT and those from the Kaohsiung Chang Gung Memorial Hospital.

Second, the machine learning methodology underlying TRIUMPH allows for the identification of multiple associations and the incorporation of a wider variety of risk factors. This model evaluates not only morphological characteristics, such as the size and number of lesions, but also integrates dynamic clinical parameters like alpha-fetoprotein (AFP) levels and neutrophil counts. Particularly noteworthy is its ability to account for dynamic changes that occur during bridging therapies while patients are on the waitlist—a critical aspect for developing effective selection criteria for transplantation.

Comparative Performance Against Established Models

When comparing TRIUMPH to existing models, the results are promising. While HALT-HCC showed statistically noninferior performance, TRIUMPH achieved a numerically greater advantage, especially among LDLT recipients and non-US populations. This highlights how regional practices and patient characteristics can impact model efficacy. For instance, HALT-HCC, developed at the Cleveland Clinic, performed optimally in validation centers located in the US. TRIUMPH’s superior performance in international settings is likely due to its diverse developmental cohort and its comprehensive machine learning approach that captures a multitude of potential predictors.

In terms of clinical utility, TRIUMPH excelled in net benefit decision analysis. This analysis weighs the true positive rate (which helps prevent futile transplants) against the false positive rate (which could deny transplants to potentially curable patients). Through various realistic risk thresholds—reflecting each patient’s likelihood of receiving a transplant based on waitlist status and organ availability—TRIUMPH provided superior net benefits, particularly from thresholds of 0.0 to 0.6.

A Step Towards Integrating Machine Learning in Organ Allocation

Machine learning is beginning to find its place in organ allocation, making leaps in prediction capabilities. For instance, the Optimized Prediction of Mortality (OPOM), recently evaluated by the OPTN/UNOS, aims to refine risk stratification for HCC patients. However, OPOM is limited to predicting waitlist dropout rates and doesn’t account for the pivotal prognostic factor of post-transplant survival. The TRIUMPH model, on the other hand, stands out by not only predicting waitlist outcomes but also future recurrence risks post-transplant, making it a potential complementary tool in organ allocation protocols.

While TRIUMPH is not the only machine learning-based model in this domain—three others, including MoRAL-AI, RELAPSE, and TRAIN-AI, have also been developed—its holistic approach allows for effective pre-transplant decision-making based upon available data. MoRAL-AI focuses on specific variables like tumor diameter and AFP but has limited generalizability due to its emphasis on LDLT recipients in South Korea. Meanwhile, RELAPSE and TRAIN-AI have their strengths but differ significantly in methodology, which impacts their performance and applicability.

Limitations and Future Directions

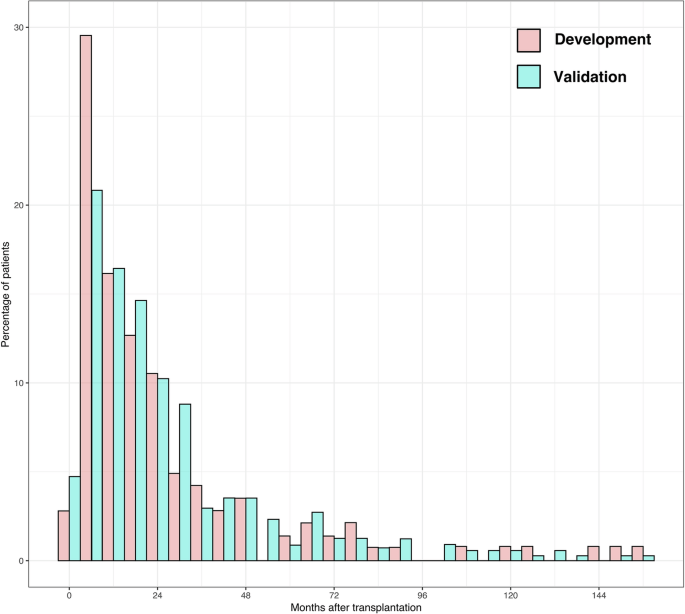

Despite the promising validation results, several limitations were identified in the study. Being a multicenter retrospective approach, there is an inherent risk of selection bias, particularly given the varied management strategies across centers. Moreover, discrepancies in the patient populations between the Toronto development cohort and the validation cohort may have further influenced the outcomes, particularly due to differing rates of hepatitis B virus (HBV) prevalence and the proportions of locally advanced HCC.

Incorporating data from a diverse range of international centers in future TRIUMPH model iterations could improve its generalizability and address regional biases that may exist. The intentional merging of data from both living and deceased donor grafts serves to reflect real-world practices, providing a unified tool for guiding decisions across various transplantation scenarios.

Though models focusing on either option exist, they are less appealing for a system that demands a flexible, comprehensive solution that encompasses both pathways. It’s worth noting that existing literature indicates no direct correlation between graft quality and oncological outcomes in as-treated analyses.

While TRIUMPH represents a significant advancement in predictive modeling for liver transplantation, there remains a need for continued development, validation, and consensus within the transplant community. Future advancements in machine learning could lead to more robust models capable of accommodating the nuanced landscapes of transplant medicine, ultimately enhancing patient outcomes on a global scale.