Exploring Music Genre Classification Using Hybrid Models

Introduction

In today’s digital era, the classification of music genres using advanced machine learning algorithms presents a fascinating and complex challenge. At the heart of this task lies the effective extraction of sound features, which subsequently undergo classification to identify distinct genres. This process is known as multi-class classification, where the primary objective is to map data points—extracted sound features—to their respective genre labels. A hybrid method employing SqueezeNet optimized by Promoted Ideal Gas Molecular Motion (PIGMM) is proposed for this purpose, showcasing how innovative techniques can pave the way for improved accuracy in genre classification.

Defining Multi-Class Classification in Music Genre

Music genre classification entails categorizing various musical compositions into predefined groups based on shared characteristics. The input data consists of training samples that encompass both data points (sound features) and labels, which in this context represent different genres, such as rock, classical, jazz, or hip-hop. The aim is to learn a function ( f ) that efficiently approximates the relationships between the data points and their corresponding labels. Utilizing SqueezeNet, an advanced deep neural network architecture, allows us to tackle this complex classification problem effectively.

SqueezeNet: A Lightweight Deep Learning Model

SqueezeNet has emerged as a remarkable architecture, particularly tailored for image classification tasks, yet its strengths extend to audio classification when adapted for spectrograms. One of its defining characteristics is the focus on maintaining high accuracy levels while significantly decreasing the model’s complexity. SqueezeNet achieves considerable efficacy through network compression techniques, replacing conventional convolutional layers with more compact fire modules. These modules consist of a squeeze layer, which reduces dimensions, followed by an expansion layer that enhances the channel output, enabling SqueezeNet to capture both local and global auditory features.

The distinct architecture of SqueezeNet incorporates fire modules, enabling rapid calculations without compromising accuracy. At the core of these modules, the sum of ( 1 \times 1 ) convolutions is combined with ( 3 \times 3 ) convolutions, enhancing the model’s capability to recognize intricate audio patterns. This design philosophy underscores SqueezeNet’s adaptability for audio tasks, where spectrograms are employed as two-dimensional time-frequency representations—a format akin to grayscale images in image processing.

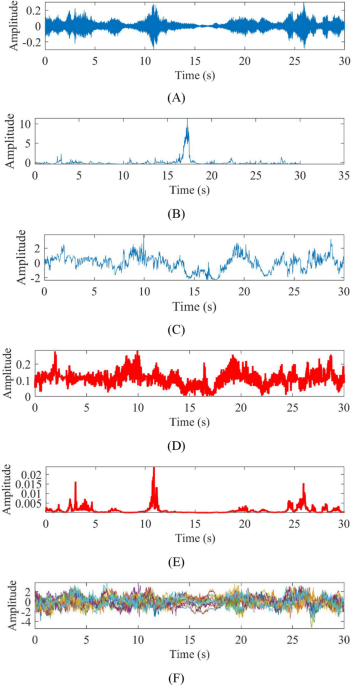

Training the Model Using Spectrograms

Training the SqueezeNet model involves utilizing spectrograms that are linked with genre labels. Initially, spectrograms serve as the visual representation of sound, reflecting various acoustic features. The model learns to classify these spectrograms based on acquired features, providing a sophisticated approach to recognizing the sonic patterns that define various genres. The utilization of deep supervision, where multiple layers of classification are integrated, further bolsters model accuracy.

Data augmentation techniques, such as generating additional training spectrograms, enhance the network’s ability to generalize, ensuring robust identification of varied features and configurations. This effectively prepares the model to deal with diverse audio environments and complexities.

Utilizing Performance Indices for Evaluation

To evaluate the efficacy of the classification model, Mean Squared Error (MSE) serves as a pivotal performance index. By determining the discrepancies between predicted and actual values, MSE functions as a gauge for optimization throughout the training process. Hyperparameter tuning becomes crucial in this context, as optimal parameters significantly influence the model’s accuracy and robustness. Various optimization algorithms, particularly metaheuristics like stochastic gradient descent (SGD), illustrate their importance in converging on a minimal MSE value.

Promoted Ideal Gas Molecular Motion (PIGMM)

The PIGMM optimizer introduces a novel approach to optimize the SqueezeNet model by incorporating principles inspired by the behavior of gas molecules. Molecule Collision Possibility (MCP) emerges as a unique variable to determine collision rates among candidate solutions, enhancing the adaptation of solutions as they explore the problem space.

With PIGMM, molecules’ velocities and positions are dynamically adjusted based on physical properties of gas interactions. This innovative approach facilitates the exploration of the solution space, allowing for an effective escape from local minima—an often encountered challenge in optimizing deep learning models for music genre classification.

Enhancements Through Chaos Maps and Opposition-Based Learning

To further refine the PIGMM optimizer, chaos maps and opposition-based learning methodologies are integrated. Chaos theory offers insights into dynamic and complex systems, facilitating a balance between exploration and exploitation during optimization processes. By substituting random values with chaos functions, PIGMM enhances convergence speed and robustness, making it particularly adept at managing high-dimensional audio data.

The opposition-based learning approach is noteworthy for its ability to navigate the initial search processes, generating opposite positions alongside original locations to expand the solution space. This broadening of choices enhances the model’s capacity to identify optimal solutions effectively.

Validation of the PIGMM Optimizer

Rigorous comparisons with other established optimization algorithms, including Pelican Optimization Algorithm, Tunicate Swarm Algorithm, and others, reveal the competitive edge of the PIGMM optimizer. Through extensive testing across various benchmark functions, the optimizer exhibits promising performance, demonstrating its robustness and capability to overcome typical optimization hurdles.

The PIGMM’s integration with SqueezeNet not only achieves remarkable classification accuracy but also highlights its adaptability in addressing the intricacies associated with music genre classification tasks. Thus, it embodies a compelling advancement in the realm of audio processing and machine learning, presenting exciting prospects for future research and application in music technology.

In summary, the exploration of sound feature extraction through advanced hybrid models represents a promising frontier in music genre classification. As methodologies like PIGMM and SqueezeNet evolve, they continue to reshape how we understand and classify music, transforming artistic expression into data-driven insights that resonate across the digital landscape.