The Impact of Text Augmentation on Machine Learning Classification Models

Introduction

In the rapidly evolving field of machine learning, data classification plays an integral role, especially in processing textual information. The recent literature reveals a growing interest in leveraging various machine learning models for data classification tasks. This article explores the effects of text augmentation on classification accuracy using traditional machine learning algorithms, rather than delving into more complex deep learning architectures.

Selecting Traditional Machine Learning Models

In our research, we focused on six traditional machine learning algorithms: Multi-Layer Perceptron (MLP), Random Forest (RF), Gradient-Boosted Trees (GBT), K-Nearest Neighbors (kNN), Decision Trees (DT), and Naive Bayes (NB). The primary motivation for opting for these models is their efficiency in determining whether text data augmentation enhances classification outcomes. Traditional models typically require less time and fewer data than deep learning models, making them more suitable for our investigation.

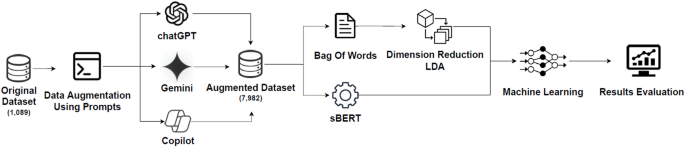

Methodological Framework

For our experiments, we utilized two well-established techniques for transforming text into vector representations: Bag of Words (BoW) and sBERT embeddings. Each model underwent hyperparameter optimization, ensuring a comprehensive approach to our analysis. For a clear overview of the range of hyperparameters optimized, see Table 3.

All algorithms were trained and tested using stratified k-fold cross-validation with (k=5), thereby ensuring consistent evaluation conditions. The models’ performance was assessed using key metrics: accuracy, recall, precision, and F1 score.

Training Details

Throughout our experiments, a total of 15,296 models were trained and evaluated, specifically targeting the highest accuracy for each algorithm. The datasets utilized are detailed in Table 2.

Preliminary Research: Dimensionality Reduction for BoW Models

A significant challenge when processing text data is the high dimensionality of the resulting vectors. Based on prior research by Stefanovic and Kurasova, we implemented various filters to preprocess our textual data. These included removing numbers and punctuation, converting tokens to lowercase, and eliminating stop words, with the minimum token length set to three characters.

Initially, using the BoW technique resulted in vectors approximately 10,000 dimensions in size. This high dimensionality can stall model training, especially during hyperparameter optimization. Consequently, we conducted primary research utilizing Latent Semantic Analysis (LSA) to reduce dimensionality—a common practice in high-dimensional data analysis.

Experimenting with Dimensionality

In this preliminary phase, we trained MLP, RF, and kNN models both with and without dimensionality reduction. After rigorous testing, we found that reducing dimensionality to 40 significantly improved accuracy for MLP and kNN, while the RF model remained stable. The paired t-test indicated significant statistical differences, particularly for MLP and kNN. Therefore, we adopted the dimensional reduction strategy for the main research phase.

Main Research: Gen-AI Augmented Dataset Impact on Classification Accuracy

Armed with reduced dimensionality datasets, we embarked on the principal experiments involving the six machine learning models with the BoW method. Results from the original datasets without augmentation showed accuracy rates ranging from 82.28% (kNN) to 87.60% (RF) for two class tasks, and slightly lower scores for three class tasks.

Subsequently, we employed Gen-AI tools for text augmentation. The findings indicated a noticeable increase in model accuracy for four out of six algorithms, notably MLP, RF, GBT, and kNN. In contrast, decisions made by DT and NB yielded decreased accuracy after augmentation.

Comparative Analysis of Results

The comparative results for both the original dataset and augmented subsets are striking. As shown in Figure 3, the highest accuracy corresponds with the joint utilization of tools like ChatGPT and Copilot. This combination yielded the most significant performance boosts across multiple model configurations. In contrast, augmentation with the Gemini tool showed the least impact.

Hyperparameter Insights

Participation of ChatGPT and Copilot tools often pushed accuracy thresholds above 90%. Notably, as indicated in Table 6, varying hyperparameters influenced performance—e.g., MLP showed improvements in the number of iterations and neurons, while RF achieved the best results with 100-850 models based on the dataset size.

Exploring sBERT Representations

In a parallel approach, we also examined the use of sBERT embeddings, resulting in vectors with 384 dimensions. Interestingly, the accuracy achieved with sBERT generally lagged behind that of the BoW technique, with notable losses particularly in the DT and NB algorithms.

Augmented Data Impact Observations

The augmentation process stood out in producing remarkable accuracy improvements, especially with kNN. For subsets using the ChatGPT and Copilot tools, kNN recorded gains exceeding 20%. Conversely, certain configurations with NB showed lesser performance, often declining.

Final Insights on Accuracy Metrics

Table 9 and Table 10 illustrate the ultimate accuracy results from our experiments, emphasizing that the highest metrics were predominantly achieved using kNN. MLP models also registered significant results, especially when trained with hyperparameters optimized for multiple iterations.

Conclusion

Our exploration into text augmentation reveals a vibrant interplay between traditional machine learning models and innovative augmentation techniques—particularly through the lens of Gen-AI tools. The findings suggest that these augmentations can enhance classification results while maintaining a focus on classical model performance. As the research landscape continues to evolve, understanding these dynamics will be crucial for future developments in machine learning methodologies.