Experimental Evaluation of CSMCR and Hybrid Deep Learning Models for IDS

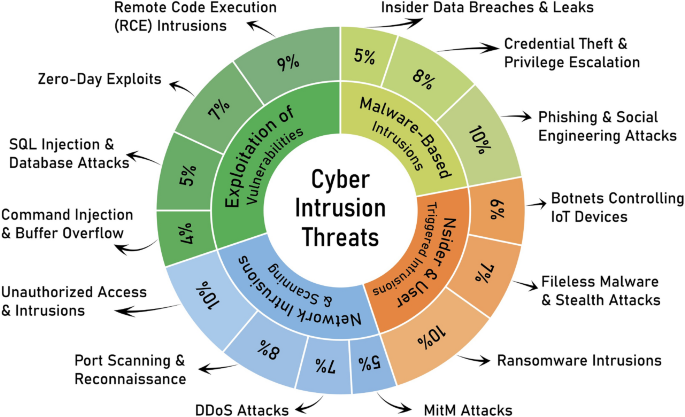

The performance of our newly proposed Cosine Similarity-based Majority Class Reduction (CSMCR) technique was rigorously evaluated through experiments utilizing multiple Intrusion Detection System (IDS) datasets, namely IoTID2041, N-BaIoT42, RT-IoT202243, and UNSW Bot-IoT44. These datasets are distinguished by their diverse attack types and varying levels of class imbalance, providing an in-depth analysis of the impact of dataset balancing on intrusion detection performance.

Impact of Class Ratio on Model Performance

A crucial focus of our evaluation was to analyze how model performance shifts across different majority-to-minority class ratios. We began with a perfectly balanced 1:1 ratio, gradually introducing imbalances of 1:2, 1:3, and 1:4. Each subsection delves into the effects of these ratios on detection accuracy, F1-score, computational efficiency, and generalization capabilities of the model.

Performance with a Minority-to-Majority Class Ratio of 1:1: Baseline Evaluation

At a 1:1 ratio, we observed significant benefits in model training due to an equitable representation of samples. This balance eliminated bias toward the majority class, enhancing both recall and precision. Table 4 captures these findings, revealing near-perfect scores in some cases—Accuracy fixing at 1 and F1 at 1—while maintaining high Matthews correlation coefficient (MCC) values of above 0.82 and Youden’s J scores exceeding 0.85.

This setup not only prevented overfitting by ensuring that the model learns equally from both classes but also enhanced computational efficiency due to faster convergence and improved robustness. The balanced dataset facilitated stable predictions across varying distributions and simplified the interpretability of model decisions.

Performance with a Minority-to-Majority Class Ratio of 1:2: Mild Imbalance

When adjusting to a 1:2 ratio, the introduction of mild imbalance began to challenge model performance. Table 5 indicates that, while accuracy and F1 scores initially remained stable—with N-BaIoT holding at near perfection (Accuracy = 0.9997, F1 = 0.9996)—echoed shifts suggested that the model’s bias toward the majority class was beginning to materialize.

Processing time saw a slight uptick, reflecting the early impacts of imbalance on computational demands. Although underfitting was minimal at this stage, the model was still starting to favor majority instances, indicating that further imbalance may worsen the performance dynamics.

Performance with a Minority-to-Majority Class Ratio of 1:3: Moderate Imbalance

Transitioning to a 1:3 ratio highlighted the challenges presented by moderate imbalance. Results in Table 6 emphasized that while accuracy and F1 scores remained commendable, a noted decline occurred, particularly for the IoTID20 dataset—Accuracy fell from 0.9618 to 0.8980 and F1-score from 0.9430 to 0.8332. This shift was indicative of the model increasingly succumbing to overfitting, prioritizing patterns from the majority class while marginalizing minority class insights.

The increased processing times further emphasized the computational strain of handling skewed datasets. Concerns regarding classification reliability rose, particularly given the uptick in false negatives indicative of growing staleness in model learning.

Performance with a Minority-to-Majority Class Ratio of 1:4: Significant Imbalance

In this extreme case of class imbalance, the model’s susceptibility to overfitting was pronounced. Table 7 illustrates significant drops in sensitivity for IoTID20 (0.9522 to 0.8422) while marginal improvements in precision reflected an unusual trend—suggesting that while fewer false positives occurred, critical instances were increasingly being overlooked.

Balancing this severe imbalance exposed the model’s diminishing generalization ability, reiterating why the 1:1 ratio is predominantly effective in mitigating risks associated with drastic discrepancies in class presence.

Comparisons with Other Sampling Techniques

Our evaluation also included the hybrid deep learning model integrating RegNet and FBNet against various dataset balancing techniques. These included CSMCR, no balancing, SMOTE (Synthetic Minority Over-sampling Technique), and Random UnderSampling.

Table 8 showcases the comparative performance metrics across datasets. SMOTE, despite yielding robust results, incurred high computational costs and lengthened processing times. On the contrary, our proposed CSMCR technique strikes a balance between efficiency and performance, demonstrating substantially lower execution time compared to SMOTE while achieving superior classification metrics.

Performance of the Proposed Balancing Technique (CSMCR)

CSMCR is a distinctive balancing methodology designed to handle class imbalance via selective reduction of majority class samples based on cosine similarity. This strategy ensures critical information retention and maintains the predictive diversity essential for insightful model training.

Results from various datasets, including RT-IoT2022 and UNSW Bot-IoT, demonstrated the advantages of CSMCR as it yields effective classification (Accuracy of 0.9836 with an MCC of 0.9673 in RT-IoT2022) without the heavy computational burdens often seen with traditional oversampling methods.

Sensitivity Analysis of Similarity Threshold Ranges

We also conducted a sensitivity analysis examining how the cosine similarity threshold can influence dataset performance. The analysis revealed that lower similarity bands provided the best F1 scores, yet offered limited practical utility due to inadequate representation of normal samples. As similarity ranges expanded to capture more samples, performance remained consistent but led to marked increases in computational runtime, highlighting a need for balanced parameter tuning.

Scalability and Feasibility in IoT Settings

CSMCR is engineered to be scalable and feasible for real-time applications, especially in resource-constrained IoT environments. It accomplishes this through several mechanisms:

- Reduced Runtime: Achieving up to 99% reduction in dataset balancing time compared to SMOTE.

- Memory Efficiency: Minimizing the memory footprint essential for devices with limited resources.

- Low Computational Overhead: Leveraging cosine similarity enables linear scalability with the number of majority samples considered.

- Real-time Deployment Readiness: Faster convergence on CSMCR-processed data improves inference speed and reduces energy consumption during predictions.

Comparative Review of Recent Studies

Finally, we situated our proposed method within the broader context of recent IoT intrusion detection research focused on imbalanced datasets. Performance metrics—accuracy, precision, recall, and F1-score—across the benchmark datasets illustrate that our method competes effectively, often surpassing adversarial models in the precision-reliant N-BaIoT dataset.

By maintaining a focused approach to model training and leveraging comprehensive evaluation techniques, we validate CSMCR’s capabilities as a forward-thinking solution to the challenges presented by class imbalance in intrusion detection systems.